- Home

- Blog

- Mainframes

- MainFrame Tutorial - What is MainFrame

Among all the Computing Technologies still in use today, mainframes have the most history. In actuality, the history of mainframes is too extensive. Only a small percentage of the software engineers who worked on the initial mainframes still use them today. Because of this, mainframes stand apart from practically every other computing technology in use today.

| MainFrame Tutorial - Table of Contents |

What are MainFrames?

Mainframes are high-performance computers with lots of memory and processors that can handle a huge number of quick calculations and real-time transactions. Commercial databases, transaction servers, and applications that need high resilience, security, and agility depend on the mainframe.

If you are a Mainframe programmer, it is necessary to know how to create and verify the code necessary for Mainframe programs and applications to run correctly. Mainframe programmers also convert the code written by Mainframe engineers and developers into instructions that a computer can understand.

How are MainFrames Operated?

IBM MainFrames are especially to operate

- Deliver the greatest levels of security with cutting-edge software and built-in cryptographic cards. For instance, the most recent IBM zSystems® mainframes can manage privacy by the policy while processing up to 1 trillion secure web transactions daily.

- Provide resilience through testing for extreme weather conditions and utilizing many layers of redundancy for each component (power supply, cooling, backup batteries, CPUs, I/O components, and cryptographic modules).

| If you want to enrich your career and become a professional in MainFrames, then enroll in "IBM Cognos Framework Manager Training" This course will help you to achieve excellence in this domain. |

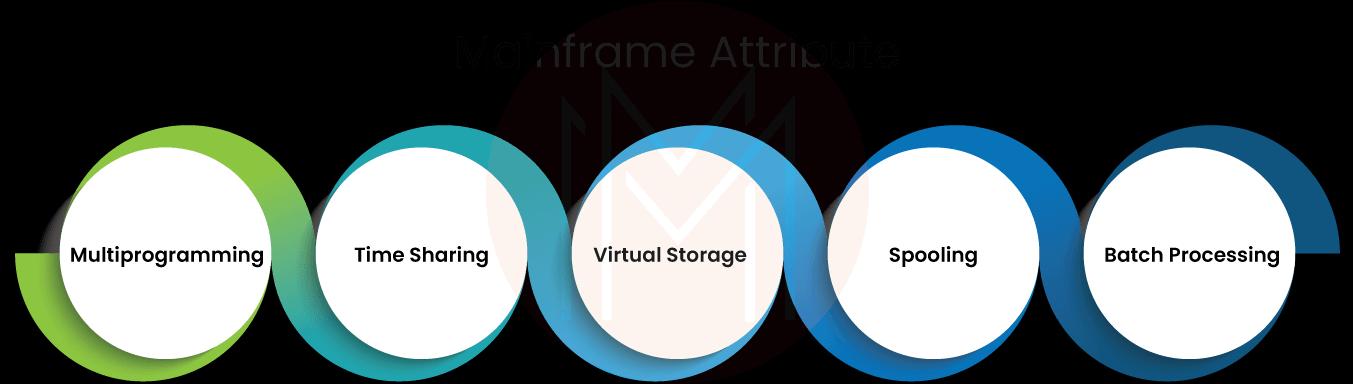

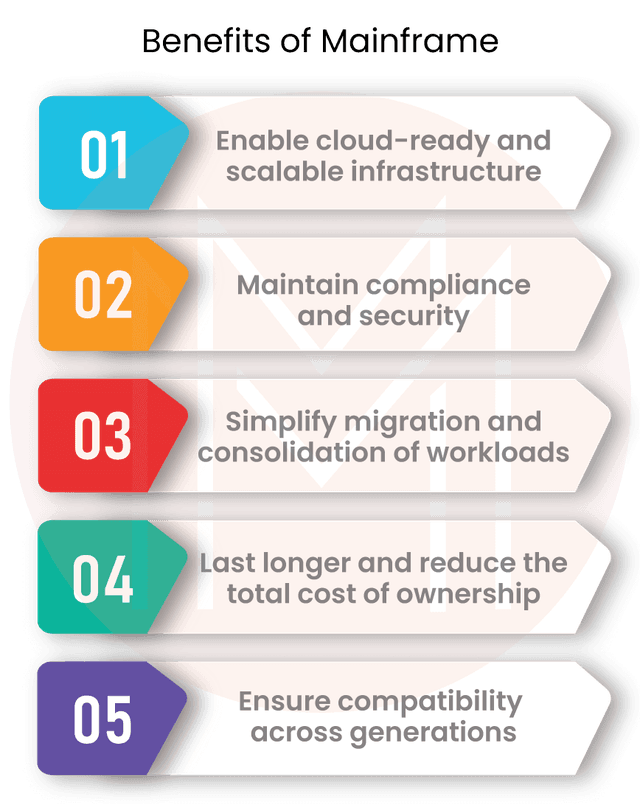

Benefits of the MainFrame

There are many benefits to using a mainframe for cloud computing. A few benefits are listed below that you should think about:

- Infrastructure that is adaptable: System z enables a range of extremely secure virtualized environments for cloud implementation, including the z/VM operating system running virtual servers, hypervisors, blade servers, and logical partitions (LPARs), which are assessed at common criteria EAL 5+.

- Scalability: Mainframes offer the perfect platform for big data analytics, data warehousing, production processing, and web applications, supporting millions of users with exceptional performance.It is estimated that up to 70% of corporate production data still resides or originates on the mainframe. As a result, private clouds running on System Z have safe access to crucial data that may be shared when needed with the proper access rules, encryption, data security, masking, and integrity.

- Support for compliance laws, guidelines, and best practices that call for data encryption, job separation, privileged user monitoring, secure communication protocols, audit reporting, and other things.

- Availability, dependability, recoverability, security, integrity, and performance are at their highest levels on the current mainframe, making it a very resilient platform.

- Security: Implementing a mainframe private cloud enables better management with a high level of security transparency that gives an enterprise-wide view. Additionally, mainframe clouds can lessen the security risks associated with public clouds that use open networks.

- Migration: The number of distributed systems that need to be controlled is decreased by simply migrating distributed workloads to the mainframe virtualized environment.

- Workload Consolidation: After the virtual environment is optimized, combining different workloads on the mainframe is simple while ensuring any necessary separation between virtual systems. Additionally, this lowers licensing costs compared to distributed systems.

- Total Cost of Ownership (TCO): A private cloud built on IBM enterprise systems had a 3-year total cost of ownership (TCO) that was 76% lower than the public cloud of a different service provider, according to a study on the simplicity of security management.

| Related Article: MainFrames Interview Questions |

MainFrame Architecture

Architecture is a set of specified concepts and guidelines that serve as building blocks for products. The architecture of mainframe computers has improved with each generation. Yet, they have remained the most reliable, secure, and interoperable of all computing systems

Architecture in computer science specifies how a system is organized. An architecture can be broken down recursively into constituent pieces, interactions between constituent parts, and constraints for assembling constituent parts. Classes, components, and subsystems are examples of parts that communicate with one another using interfaces.

Each new generation of mainframe computers has seen advancements in at least one of the following architectural components, beginning with the first massive machines, which appeared on the scene in the 1960s and gained the moniker "Big Iron" (in contrast to smaller departmental systems):

- larger and quicker processors

- Added physical memory and improved memory addressing

- the ability to upgrade both hardware and software dynamically

- increased hardware problem detection and repair automation

- Improved input/output (I/O) devices as well as additional and quicker channels connecting I/O devices to processors

- I/O attachments with higher levels of sophistication, like LAN adapters with intensive inboard processing

- higher capacity to divide the resources of a single machine into numerous, conceptually separate, and isolated systems, each running a separate operating system

- advanced technologies for clustering, like Parallel Sysplex®, and data sharing between different systems.

- Mainframe computers continue to be the most reliable, secure, and interoperable of all computing systems despite the ongoing change. The most recent models can still run applications created in the 1970s or older while handling the most complex and demanding client workloads.

How is it that Technology can evolve so quickly and still be so reliable?

It can change to address brand-new difficulties. The client/server computing model, which relies on dispersed nodes of less powerful computers, first appeared in the early 1990s to compete with mainframe computers' hegemony. Industry experts referred to the mainframe computer as a "dinosaur" and prophesied its quick demise. As a result, designers created new mainframe computers to satisfy demand, as they have always done when faced with shifting times and an expanding list of customer requirements. As the industry leader in mainframe computers, IBM® gave its then-current system the code name T-Rex as a nod to the critics of dinosaurs.

The mainframe computer is ready to ride the next wave of growth in the IT sector thanks to its enhanced features and new tiers of data processing capabilities, including Web-serving, autonomics, disaster recovery, and grid computing. Manufacturers of mainframes like IBM are once more reporting double-digit annual sales growth.

The evolution keeps going. Although the mainframe computer still plays a key function in the IT organization, it is now considered the main hub in the biggest distributed networks. Several networked mainframe computers acting as key hubs and routers make up a considerable portion of the Internet.

You might wonder whether the mainframe computer is a self-contained computing environment or a piece of the distributed computing puzzle as the mainframe computer's image continues to change. The New Mainframe can be used as the primary server in a company's distributed server farm or as a self-contained processing center, powerful enough to handle the largest and most diverse workloads in one secure "footprint," according to the answer. In the client/server computing approach, the mainframe computer effectively serves as the sole server.

MainFrame Tools

#1. CICS

"Customer Information Control System" is what CICS® stands for. It serves as the z/OS® operating system's general-purpose transaction processing subsystem. While many other customers request to execute the same applications using the same files and programs, CICS offers services for executing an application online upon request.

CICS controls resource allocation, data integrity, and execution priority while responding quickly. Users are authorized by CICS, which distributes resources (such as actual storage and processing cycles) and forwards database queries from the program to the proper database management (such as DB2®). We may claim that CICS behaves similarly to the z/OS operating system and carries out many of the same tasks.

A CICS application is a group of connected applications that work together to complete a business task, like processing a travel request or creating a paycheck for a corporation. Applications under CICS control access programs and files through CICS services and interfaces.

Traditionally, CICS applications are launched by submitting a transaction request. Running one or more application programs that implement the necessary function constitutes the transaction's execution. CICS application programs are occasionally referred to in CICS documentation as "programs," Sometimes, the term "transaction" is used to denote the processing carried out by the application programs.

Enterprise JavaTM Beans can also be used as CICS applications.

Functions of CICS

The following are the primary tasks carried out by CICS in an application

- CICS handles requests from several program users simultaneously.

- Even if numerous users are working on the CICS system, the user still gets the impression that he is the only one.

- CICS provides access to data files so that they can be read or updated in an application.

Features of CICS

The following are CICS's Features

- CICS manages its processor storage, has a task manager that supervises the execution of many programs, and offers its file management features, making it an operating system unto itself.

- The batch operating system offers an online environment thanks to CICS. Jobs submitted are instantly carried out.

- CICS is a standardized interface for transaction processing.

- As CICS runs as a batch task in the operating system at the back end, it is feasible to have two or more CICS regions active simultaneously.

- CICS functions as an operating system by itself. Its responsibility is to offer a setting for the online execution of application programs. CICS only utilizes one address space, region, or partition. CICS manages the program schedule for those that use it. Using the command PREFIX CICS*, we may examine CICS in the spool where it runs as a batch job. The five main services offered by CICS are listed below. Together, these services complete a task.

CICS Environment

The services that we will go over in-depth, step by step, are as follows

- Computer Services

- Services for Data Communication

- Services for Handling Data

- Services for Application Programming

- Monitoring Services

The following control functions are maintained by CICS to manage the allocation or de-allocation of resources within the system

- Task Control offers capabilities for multitasking and task scheduling. It handles maintaining the state of every CICS task. The processor's time is divided across running CICS tasks by Task Control. We refer to this as multitasking. CICS prioritizes the most critical job response time.

- Program Control controls how application programs are loaded and released. It is vital to link a task with the relevant application software as soon as it starts. Despite the fact that multiple tasks may require the same application program, CICS only loads one instance of the code into memory. This code allows for independent task threading, enabling several users to conduct transactions simultaneously while using the same application program's physical copy.

- Storage Control controls the acquisition and release of primary storage. Dynamic storage is acquired, managed, and released through storage control. Input/output zones, programs, etc., use dynamic storage.

Interval Control provides timer services.

- Services for Data Communication

- To handle data communication requests from application programs, Data Communication Services interface with telecommunication access methods like BTAM, VTAM, and TCAM.

- Using Basic Mapping Support, CICS relieves application programs from the task of resolving terminal hardware difficulties (BMS).

- Through Multi-Region Operation (MRO), multiple CICS regions within the same system can communicate with one another.

- Inter System Communication (ISC) is a feature of CICS that enables the communication between a CICS area on one system and a CICS region on another system.

Services for Handling Data

- BDAM, VSAM, and other data access mechanisms are interfaced with data handling services.

- Data handling requests from application programs are made easier by CICS. Application programmers can use a set of instructions provided by CICS to manage data set and database access, as well as associated processes.

- Data Handling Services assist in processing database queries from application programs by integrating with database access techniques like IMS/DB, DB2, etc.

- By controlling simultaneous record updates, protecting data when tasks ABEND, and protecting data during system failures, CICS makes it easier to manage data integrity.

Services for Application Programming

Applications are interfaced with using application programming services. Features offered by the CICS application programming services include command-level translation, CEDF (the debug facility), and CECI (the command interpreter facility). In the following modules, we'll go into further detail.

Monitoring Services

Monitoring services for various events monitor the CICS address space. It offers numerous statistical data points that can be utilized to fine-tune the system.

#2. COBOL

Common Business Oriented Language is known as COBOL. It is procedural, object-oriented, and imperative. A computer program called a compiler converts other computer programs written in high-level (source) languages into machine code, which the computer can understand. Data is input into a file or database, processed, and produced via COBOL. In a nutshell, COBOL receives data, computes it, and then produces the results.

COBOL was created for corporate computer applications in sectors like finance and human resources. COBOL employs English words and phrases, in contrast to other high-level computer programming languages, to make it simpler for regular business users to understand. The language was based on Rear Admiral Grace Hopper's work on the primarily text-based FLOW-MATIC programming language from the 1940s. Often known to as the "grandmother of COBOL," Hopper served as a technical consultant on the FLOW-MATIC project.

All operating systems had their own corresponding programming languages prior to COBOL. This presented a challenge for businesses that utilized a variety of computer brands, as was the case with the US Department of Defense, which supported the COBOL project. COBOL soon rose to prominence as one of the most widely used programming languages in the world due to its portability and ease of use. Even though COBOL is often considered obsolete, it has the most lines of active code of any programming language code.

Characteristics of COBOL

- simplicity and uniformity. A standard language that is simple to learn and can be compiled and run on a variety of machines is COBOL. It has a clean coding style and offers a large syntactic vocabulary.

- abilities geared for business. Large amounts of data can be handled by COBOL thanks to its sophisticated file-handling capabilities. More than 70% of all commercial transactions are still conducted in COBOL. From straightforward batch reporting to intricate operations, COBOL is an excellent choice.

- Universality. COBOL is cross-platform and device compatible and has evolved over the course of six decades of corporate change. Nearly all computer platforms can use the language's debugging and testing tools, and new COBOL solutions, compilers, and development tools are launched every year.

- Scalability and organization. COBOL's logical control structures make it simple to read, alter, and debug. Additionally, COBOL is cross-platform compatible, dependable, and scalable.

COBOL Features

Standard Language

On devices like the IBM AS/400, personal computers, and other computing platforms, COBOL is a standard language that may be compiled and run.

For business-oriented applications in the financial, defense, and other sectors, Business Oriented COBOL was created. Due to its sophisticated file processing features, it is capable of handling large volumes of data.

Strong Language

Due to the availability of its many debugging and testing tools across practically all computer platforms, COBOL is a robust language.

Organizing Language

Because COBOL has logical control structures, it is simpler to read and alter. COBOL is simple to debug since it has numerous divisions.

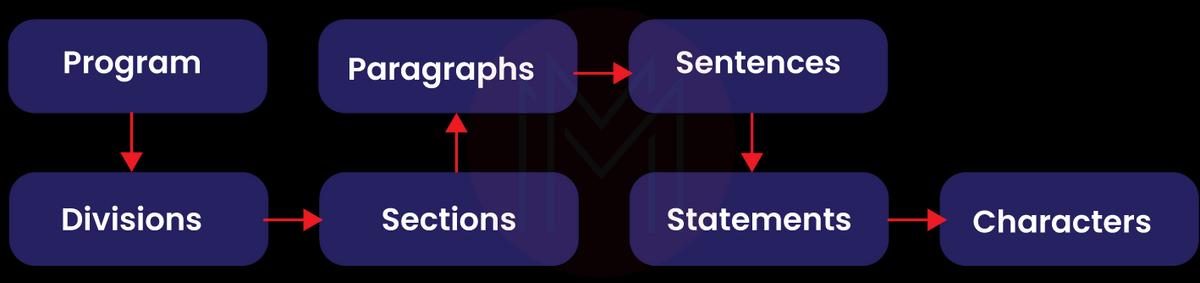

The following graphic illustrates the divisions that make up a COBOL program structure.

Below is a quick description of these divisions.

Program logic is divided into sections logically. A section consists of several paragraphs.

The subdivision of a section or division is a paragraph. It has zero or more sentences/entries and is either a user-defined or a predefined name followed by a period. The combination of one or more statements forms a sentence. Only the Procedure division has sentences. A period is required to end a sentence.

Statements are grammatically correct COBOL commands that carry out the processing.

In the hierarchy, characters are at the bottom and cannot be divided.

| Related Article: Cobol Interview Questions |

#3. DB2

An IBM database product is called DB2. A relational database management system is what it is (RDBMS). DB2 is made to store, analyse, and retrieve data efficiently. The DB2 product is enhanced with XML-based non-relational structures and support for object-oriented capabilities.

History

IBM had initially created the DB2 product for their particular platform. Since 1990, it has made the decision to create a Universal Database (UDB) DB2 Server that can function on any reputable operating system, including Windows, Linux, and UNIX.

Versions

With BLU Acceleration and the code name "Kepler," the current UDB version for IBM DB2 is 10.5. Below is a list of every DB2 version released up to this point

| Version | Code Name |

| 3.4 | Cobweb |

| 8.1, 8.2 | Stinger |

| 9.1 | Viper |

| 9.5 | Viper 2 |

| 9.7 | Cobra |

| 9.8 | It added features with Only PureScale |

| 10.1 | Galileo |

| 10.5 | Kepler |

Editions and Features of Data Servers

Organizations choose the best DB2 version based on the necessary characteristics that they require. The DB2 server editions and their characteristics are displayed in the following table

- Advanced Enterprise Server Edition and Enterprise Server Edition (AESE / ESE) - It is designed for mid-size to large-size business organizations. Platform - Linux, UNIX, and Windows. Table partitioning High Availability Disaster Recovery (HARD) Materialized Query Table (MQTs) Multidimensional Clustering (MDC) Connection concentrator Pure XML Backup compression Homogeneous Federations

- Workgroup Server Edition (WSE) - It is designed for workgroups or mid-size business organizations. Using this WSE you can work with - High Availability Disaster Recovery (HARD) Online Reorganization Pure XML Web Service Federation support DB2 Homogeneous Federations Homogeneous SQL replication Backup compression

- Express -C - It provides all the capabilities of DB2 at zero charges. It can run on any physical or virtual system with any size of configuration.

- Express Edition - It is designed for entry-level and mid-size business organizations. It is a full-featured DB2 data server. It offers only limited services. This Edition comes with - Web Service Federations DB2 homogeneous federations Homogeneous SQL Replications Backup compression

- Enterprise Developer Edition - It offers only single application developers. It is useful to design, build and prototype the applications for deployment on any of the IBM servers. The software cannot be used for developing applications.

#4. IMS DB

Information Management System is referred to as IMS. IMS was created in 1966 as part of the Apollo mission to send a man to the moon by IBM, Rockwell, and Caterpillar. It spearheaded the revolution in database management systems and is still developing to satisfy demands for data processing. IMS offers a user-friendly, dependable, and uniform environment for carrying out high-performance transactions. High-level programming languages like COBOL use the IMS database to store and access data organized hierarchically.

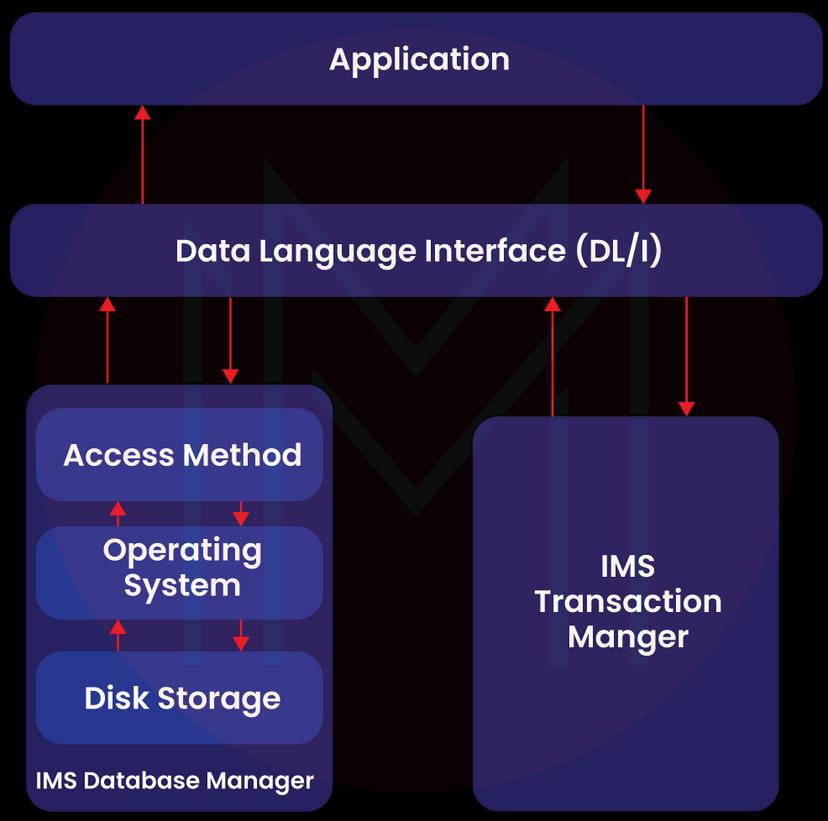

A database is a grouping of connected pieces of data. These information elements are arranged and kept in storage such that quick and simple access is possible. Data is stored in a hierarchical format in the IMS database, where each entity relies on higher-level entities. The following diagram depicts the physical components of an application system that utilizes IMS

Management of Databases

A database management system is a collection of software tools used to store, manage, and access data in a database. IMS database management system protects data's integrity and enables quick recovery by structuring it in a way that makes it simple to find. With the use of its database management system, IMS manages a sizable volume of corporate data for the entire world.

Manager of Transactions

The transaction manager's job is to act as a platform for communication between the application programs and the database. IMS manages transactions in this way. A transaction manager interacts with the end user to store and retrieve data from the database. IMS can store the data in its back-end database, which is either DB2 or IMS DB.

Data Language Interface (DL/I)

The application applications that make up DL/I provide access to the data kept in the database. The interface language used by programmers to access the database in application software is called DL/I, and IMS DB uses it.

IMS characteristics to keep in mind

- IMS supports programs written in a variety of languages, including Java and XML.

- Any platform can be used to access IMS apps and data.

- In comparison to DB2, IMS DB processing is extremely quick.

IMS Limitations

- IMS DB implementation is really difficult.

- Reduced flexibility due to IMS's preset tree structure.

- IMS DB management is challenging.

#5. JCL

The main operating system for IBM Mainframe systems, Multiple Virtual Storage (MVS), uses Job Control Language (JCL) as its command language. JCL notifies the Operating System using Job control Statements about the program to be performed, the inputs needed, and the input and output location. Programs can be run in batch mode or online mode on a mainframe. JCL is used to submit a program for batch mode execution.

When to Use JCL

In a mainframe setting, JCL is utilized to provide a communication channel between an application (for instance, COBOL, Assembler, or PL/I) and the operating system. Programs can be run in batch mode or online mode on a mainframe. A VSAM (Virtual Storage Access Method) file used to process bank transactions and apply them to the appropriate accounts is an example of a batch system. A back office screen used by bank employees to open an account is an example of an online system. Programs are sent to the operating system as a job in batch mode using a JCL.

Processing Jobs

- A job is a type of work that may be broken down into numerous job tasks. A collection of Work Control Statements in a Job Control Language (JCL) are used to specify each job phase.

- The Job Entry System (JES) is a tool that the operating system utilizes to receive jobs, schedule them for processing, and manage output.

The steps involved in processing a job are as follows:

- Job Submission: Sending JES the JCL.

- Job Conversion: The JCL and PROC are combined to create an interpreted text that JES can understand and are then saved in a dataset that we refer to as SPOOL

- Job Queuing: Using the CLASS and PRTY parameters in the JOB statement, JES determines the job's priority (explained in JCL - JOB Statement chapter). If there are no JCL issues, the job is reviewed to see if it may be scheduled into the job queue.

- Job Execution: A job is selected from the job queue for execution when it reaches the highest priority. The program is run, the JCL is read from the SPOOL, and the output is then directed to the appropriate output destination as stated in the JCL.

- SPOOL: When a work is finished, the JES SPOOL space and the allotted resources are freed. Before it is released from the SPOOL, the job log must be copied to another dataset for storage.

JCL Execution on MainFrames

Several methods exist for users to connect to a mainframe server, including thin clients, fake terminals, virtual client systems (VCS), and virtual desktop systems (VDS).

When accessing the TSO/E or ISPF interface on Z/OS, every legitimate user is given a login ID. The JCL can be programmed and stored as a member in a partitioned dataset in the Z/OS interface (PDS). The JCL is performed when it is sent and the output is received.

Structure of a JCL

The basic form of a JCL with the joint statements, is given below

//SAMPJCL JOB 1, CLASS=6, MSGCLASS=0, NOTIFY=&SYSUID (1)

//* (2)

//STEP010 EXEC PGM=SORT (3)

//SORTIN DD DSN=JCL.SAMPLE.INPUT,DISP=SHR (4)

//SORTOUT DD DSN=JCL.SAMPLE.OUTPUT, (5)

// DISP=(NEW,CATLG,CATLG),DATACLAS=DSIZE50

//SYSOUT DD SYSOUT=* (6)

//SYSUDUMP DD SYSOUT=C (6)

//SYSPRINT DD SYSOUT=* (6)

//SYSIN DD * (6)

SORT FIELDS=COPY

INCLUDE COND=(28,3, CH, EQ, C'XXX')

/* (7)Program Summary

The following explains each of the numbered JCL statements

- JOB statement—Specifies the data needed for SPOOLing the job, such as the job id, the priority of execution, and the user-id that will be alerted when the job is finished.

- //* statement - This statement serves as commentary.

- The PROC or program to be run is specified in the EXEC statement. In the illustration mentioned earlier, a SORT program is running (i.e., sorting the input data in a particular order)

- The input DD statement specifies the kind of input that will be provided to the application in (3). A Physical Sequential (PS) file is supplied as input in the example above in shared mode (DISP = SHR).

- The output DD statement specifies the output generated when the application is run. An example of a PS file is constructed above. A statement is continued in the following line, which should begin with "//" and one or more spaces if it continues past the 70th position in a bar.

- Extra DD statement types can be used to provide the program with additional information (in the example above, the SYSIN DD statement specifies the SORT condition) and to indicate the location of the error/execution log (example: SYSUDUMP/SYSPRINT). As shown in the example above, DD statements can be found in a dataset (mainframe file) or as stream data (information hard-coded within the JCL).

The in-stream data ends at (7) /*.

Except for stream data, every JCL statement begins with /. Before and after the JOB, EXEC, and DD keywords, there should be at least one space and no spaces in the remaining portion of the statement.

#6. VSAM

A type of data set and the access technique used to control different user data types is a virtual storage access mechanism (VSAM).

VSAM offers a lot more sophisticated functions than other disc access methods as an access mechanism. Disk records are stored in VSAM in a unique format incomprehensible to other access techniques.

The primary use of VSAM is for applications. It is not used for executable modules, JCL, or source code programs. ISPF cannot routinely display or edit VSAM files.

Key-sequenced, entry-sequenced, linear, and relative records are the four types of data sets that can be created using VSAM to organize records. The method their records are kept, and accessible is the main distinction between different kinds of data collection.

VSAM Characteristics

The following are the VSAM's characteristics:

- Using passwords, VSAM guards data against unwanted access.

- Data sets can be accessed quickly with VSAM.

- Performance optimization options exist for VSAM.

- Sharing data sets in batch and online environments is possible with VSAM.

- When storing data, VSAM is more organized and organized.

- In VSAM files, free space is automatically reused.

Constraints of VSAM

VSAM's only restriction is that it cannot be stored on TAPE volumes. It is constantly kept in the DASD space. Data storage requires a lot of cylinders, which is not economical.

VSAM consists of the following components −

- VSAM Cluster

- Control Area

- Control Interval

VSAM Cluster

The logical datasets for storing records, or clusters, are called VSAM. A cluster is an association between the dataset's index, sequence set, and data components. Contiguous regions called Control Intervals, separate the space occupied by VSAM clusters. Control intervals will be covered in more detail later in this lesson.

A VSAM cluster consists of two primary parts

- The index section is in the index component. There are index records in the index component. Records from the data component can be retrieved by VSAM using the index component.

- Data Part is contained in Data Component. The Data component contains actual data records.

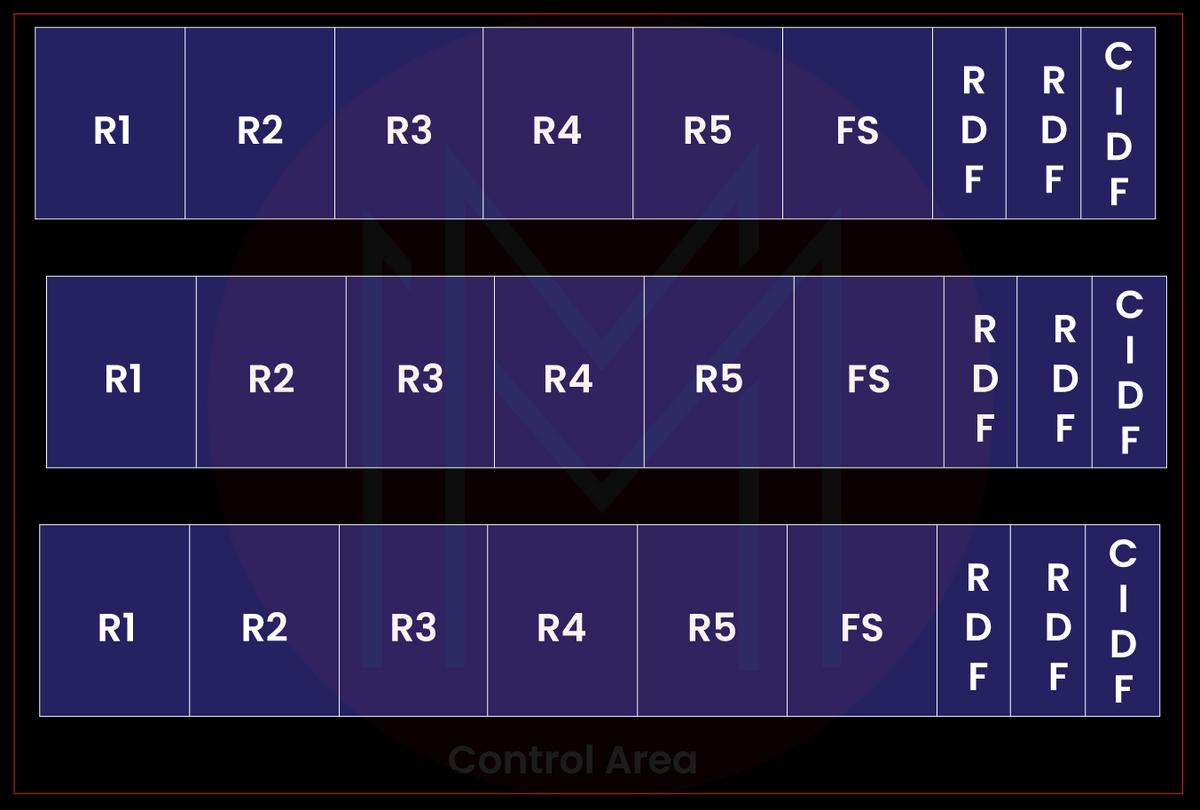

Here is a list of terminology used in the program above.

- Records that are kept in the Control Interval are R1..R5.

- FS stands for free space, which can be utilized to expand the dataset.

- Record Definition Fields, or RDF, are a type of metadata. RDF only takes up 3 bytes. It gives a description of record lengths and indicates the number of neighboring records that are the same length.

- CIDF Control Interval Definition Fields are another name for CIDF. Information regarding the Control Interval is contained in CIDF, which is 4 bytes long.

Control Area

Combining two or more Control Intervals creates a Control Area (CA). There may be one or more Control Areas in a VSAM dataset. A multiple of the VSAM's Control Area determines its size at all times. Control Areas are the units of extension for VSAM files.

The Control Area example is provided below

MainFrames Frequently Asked Questions

1. What is the mainframe used for?

Digital mainframe computer focuses on input/output devices, including large-capacity discs and printers for high-speed data processing. Payroll calculations, accounting, corporate transactions, information retrieval, airline seat reservations, and calculations in science and engineering have all been made on mainframes. Client-server architecture has largely replaced mainframe systems, which had remote "dumb" terminals, in many applications.

2. Does the mainframe have coding?

Contrary to common assumptions, mainframes and the programmers who develop code for them are still alive and thriving. Furthermore, they do not program in obscure ancient languages. They combine more "contemporary" mainframe languages like Java and C++ with more conventional ones like COBOL and REXX.

3. What are the three examples of mainframe computers?

Systems such as IBM zSeries, System z9, and the new System z10 are good examples of mainframe computers.

4. What are MainFrame Skills?

- Attention to detail.

- Business processes.

- Capacity management.

- Communication.

- Data analysis.

- Data integration.

- DB2.

- Digital security.

5. Is Java required for the mainframe?

Java is a language, whereas Mainframe is a platform. You cannot compare a language to a program. Mainframes are capable of running Java as well. The mainframe cannot be executed in Java.

Conclusion

You must have learned about important mainframe technologies like CICS, COBOL, DB2, IMS DB, JCL, and VSAM in this tutorial. These technologies offer different characteristics and functionalities. We made an effort to describe everything in a single piece and ready book. We assure you that this training will be useful to both aspiring mainframe professionals and current working professionals.

On-Job Support Service

On-Job Support Service

Online Work Support for your on-job roles.

Our work-support plans provide precise options as per your project tasks. Whether you are a newbie or an experienced professional seeking assistance in completing project tasks, we are here with the following plans to meet your custom needs:

- Pay Per Hour

- Pay Per Week

- Monthly

| Name | Dates | |

|---|---|---|

| IBM Cognos Framework Manager Training | Mar 03 to Mar 18 | View Details |

| IBM Cognos Framework Manager Training | Mar 07 to Mar 22 | View Details |

| IBM Cognos Framework Manager Training | Mar 10 to Mar 25 | View Details |

| IBM Cognos Framework Manager Training | Mar 14 to Mar 29 | View Details |