- Introduction to Amazon Elastic File System

- Amazon On-Demand Instance Pricing

- AWS Kinesis

- Amazon Redshift Tutorial

- Amazon SageMaker - AIs Next Game Changer

- AWS Console - Amazon Web Services

- AWS Architect Interview Questions

- AWS Architecture

- Amazon Athena

- Top 11 AWS Certifications List and Exam Learning Path

- How to Create Alarms in Amazon CloudWatch

- AWS CloudWatch Tutorial

- Introduction To AWS CLI

- AWS Configuration

- AWS Data Pipeline Documentation

- AWS EC2 Instance Types

- AWS Elastic Beanstalk

- AWS Elastic Beanstalk Available in AWS GovCloud (US)

- AWS Free Tier Limits and Faq

- AWS EC2 Instance Pricing

- Choosing The Right EC2 Instance Type For Your Application

- AWS Interview Questions And Answers

- AWS Key Management Service

- AWS Lambda Interview Questions

- AWS Lambda Tutorial

- What Is AWS Management Console?

- Complete AWS Marketplace User Guide

- AWS Outage

- AWS Reserved Instances

- AWS SDK

- What is AWS SNS?

- AWS Simple Queue Service

- AWS SysOps Interview Questions

- AWS vs Azure

- AWS Vs Azure Vs Google Cloud Free Tier

- Introduction to AWS Pricing

- Brief Introduction to Amazon Web Services (AWS)

- Clean Up Process in AWS

- Creating a Custom AMI in AWS

- Creating an Elastic Load Balancer in AWS

- How to Deploy Your Web Application into AWS

- How to Launch Amazon EC2 Instance Using AMI?

- How to Launch Amazon EC2 Instances Using Auto Scaling

- How to Sign Up for the AWS Service?

- How to Update Your Amazon EC2 Security Group

- Process of Installing the Command Line Tools in AWS

- Big Data in AWS

- Earning Big Money With AWS Certification

- AWS Certification Without IT Experience. Is It Possible?

- How to deploy a Java enterprise application to AWS cloud

- What is AWS Lambda?

- Top 10 Reasons To Learn AWS

- Run a Controlled Deploy With AWS Elastic Beanstalk

- Apache Spark Clusters on Amazon EC2

- Top 30 AWS Services List in 2024

- What is Amazon S3? A Complete AWS S3 Tutorial

- What is AMI in AWS

- What is AWS? Amazon Web Services Introduction

- What is AWS Elasticsearch?

- What is AWS ELB? – A Complete AWS Load Balancer Tutorial

- What is AWS Glue?

- AWS IAM (Identity and Access Management)

- AWS IoT Core Tutorial - What is AWS IoT?

- What is Cloud Computing - Introduction to Cloud Computing

- Why AWS Has Gained Popularity?

- Top 10 Cloud Computing Tools

- AWS Glue Tutorial

- AWS Glue Interview Questions

- AWS S3 Interview Questions

- AWS Projects and Use Cases

- AWS VPC Interview Questions and Answers

- AWS EC2 Tutorial

- AWS VPC Tutorial

- AWS EC2 Interview Questions

- AWS DynamoDB Interview Questions

- AWS API Gateway Interview Questions

- How to Become a Big Data Engineer

- What is AWS Fargate?

- What is AWS CloudFront

- AWS CloudWatch Interview Questions

- What is AWS CloudFormation?

- What is AWS Cloudformation

- Cloud Computing Interview Questions

- What is AWS Batch

- What is AWS Amplify? - AWS Amplify Alternatives

- Types of Cloud Computing - Cloud Services

- AWS DevOps Tutorial - A Complete Guide

- What is AWS SageMaker - AWS SageMaker Tutorial

- Amazon Interview Questions

- AWS DevOps Interview Questions

- Cognizant Interview Questions

- Cognizant Genc Interview Questions

- Nutanix Interview Questions

- Cloud Computing Projects and Use Cases

- test info

In earlier days, companies were utilizing their private servers for creating storage and compute services. But now, as internet speeds get enhanced, big or small companies have started adopting cloud computing and storing their data in the cloud for better performance. As a result, companies can concentrate more on their core competencies. As every company is adopting cloud services and AWS is a leading player, technical aspirants are eager to learn AWS. There are not enough people who know how to work with AWS, and jobs are going unoccupied.

It is evident that AWS cloud skills are and will remain in great demand for years to come. So, professionals who want to be certified AWS experts can join our AWS training. According to ziprecruiter.com, the average salary for a certified AWS professional in the US is around $161K per annum. In this AWS tutorial, you will learn what AWS is and the advantages of using AWS. AWS tutorial also helps you learn AWS services like EC2, S3, Lambda, etc. Before we start, let us have a look at what we will be discussing in this article:

| In this AWS Tutorial, You'll learn |

What is AWS?

The full form of AWS is Amazon Web Series. AWS is a platform that allows users to access on-demand services like a virtual cloud server, database storage, etc. It uses distributed IT infrastructure for providing various IT resources. It offers services like packaged software as a service(SaaS), Platform as a Service(PaaS), and Infrastructure as a Service(IaaS).

AWS Basics

What is Cloud Computing

Cloud Computing is a computing service in which big groups of remote servers meshed to enable centralized data storage and online access to computer resources or services. Following are the types of clouds:

Public Cloud

In the public cloud, extrinsic service providers make services and resources accessible to the users through the internet.

Private Cloud

Private cloud offers approximately the same features as public cloud, but organizations or third parties manage the services and data. In this type of cloud, the main focus is Infrastructure.

Hybrid Cloud

A hybrid cloud is a combination of public cloud and private cloud. According to the sensitivity of the applications and data, we use the Public cloud and private cloud.

AWS Advantages

Following are the advantages of AWS:

- AWS enables organizations to use popular operating systems, programming models, architectures, and databases.

- AWS is a cost-efficient service that enables us to pay only for what we use.

- We do not need to pay money for maintaining and running data centers.

- AWS provides rapid deployments.

- AWS provides distributed management and billing.

- Through AWS, we can deploy our applications in multiple regions throughout the world with just a few clicks.

What are the features of AWS?

Flexibility

AWS flexibility enables us to select suitable programming languages, models, and operating systems. Therefore we do not need to learn the latest skills for adopting the latest technologies. The flexibility of AWS allows us to migrate the applications to the cloud easily. AWS flexibility is a huge asset for the organizations for delivering the product with upgraded technology.

Scalable and Elastic

In the conventional IT organization, we calculate scalability and elasticity with infrastructure and investment. Scalability is the ability to scale the computing services down or up when demand decreases or increases respectively.

Cost-effective

Cost is one of the key factors that must be considered in providing IT solutions. Cloud offers an on-demand infrastructure that allows us to use the resources that you genuinely require. In AWS, we are not restricted to a group of resources like computing, bandwidth, and storage resources. AWS does not have any long-term commitment, upfront investment, or minimum speed.

Secure

AWS offers a scalable cloud computing platform that gives customers end-to-end privacy and end-to-end security. AWS integrates the security into services and documents for explaining how to utilize the security features.

Expertise

AWS cloud offers levels of security, privacy, reliability, and scalability. AWS continues to help its customers by improving infrastructure capabilities. AWS has developed an infrastructure according to the lessons taught from the past.

| Related blog: AWS Interview Questions and Answers |

What are the Applications of AWS?

For the following computing resources, we use AWS:

- SaaS Hosting

- Website hosting

- Social and Mobile Applications

- Media Sharing

- Academic Computing

- Social Networking

- Search Engines

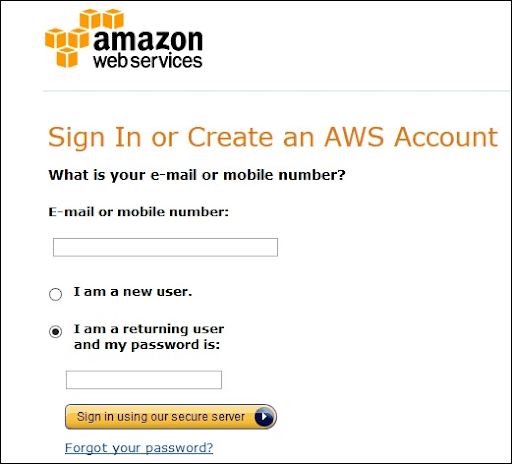

How to Create an Account in AWS?

AWS offers a free account for one year for using and learning different components of AWS. Through an AWS account, we can access AWS services like S3, EC2, etc.

Step1: For creating an AWS account, we have to open the link:

After opening the above link, enter the details and sign-up for a new account.

If you already have an account, then we can sign in through Email and password.

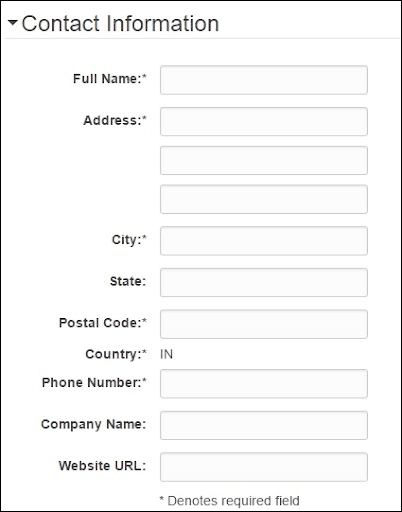

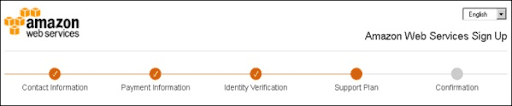

Step2: After entering the E-mail, fill the form. Amazon utilizes this information for invoicing, identifying, and billing the account. After account creation, sign-up for the required services.

Step3: To Login for the services, we provide the payment information. Amazon implements a minimum amount of transactions against the card over the file for checking that it is true. This charge differs with the region.

Step4: Next, we perform identity verification. Amazon performs a call back for verifying the given contact number.

Step5: Select a support plan from the plans like Basic, Business, Enterprise, or Developer. The basic plan costs less and has very limited resources, which is helpful to get acquainted with AWS.

Step6: The last step is confirmation. Press the link to log in and switch to the AWS management console.

AWS Account Identifier

AWS allocates two unique IDs to every AWS account:

- AWS account ID

AWS account ID is a 12-digit number, and we use it for constructing Amazon Resource Names(ARN). AWS account ID helps us to differentiate the resources from the resources of other AWS Accounts. - Conical String User ID

Conical String User ID is a large string of alphanumeric characters like 1234abcde123. We use this ID in the Amazon S3 bucket policy for cross-account access, i.e., for accessing the resources of another AWS account.

Visit here to learn AWS Training in New York

AWS IAM(Identity Access Management)

Identity Access Management(IAM) is a user object that we create in AWS for representing a person who utilizes it with restricted access to the resources.

How to Create users in IAM?

Step 1: Go to the following link to log in to the AWS Management console.

Step 2: Choose the users option over the left navigation pane for opening the users’ list.

Step 3: We can create new users through the “Create New Users” option, a new window opens. Type the username that we have to make. Choose the create option and create a new user.

Step 4: We can see the Access IDs and secret keys by choosing the “show users security credentials” link. We can save the details on the system through the “download credentials” option.

Step 5: We can handle the security credentials of the user.

AWS Compute Services

AWS EC2

What is AWS EC2?

AWS Elastic Compute Cloud is a web service interface that offers scalable compute capability in the cloud. EC2 minimizes the time needed to get and restart the latest user instances to minutes instead of older days. If you want a server, then you have to put a purchasing order and perform cabling for getting a new server which is an extremely time-consuming process.

According to the computing requirement, we can scale the compute capacity down and up. AWS EC2 offers the developers the development of robust applications that separate themselves from general scenarios.

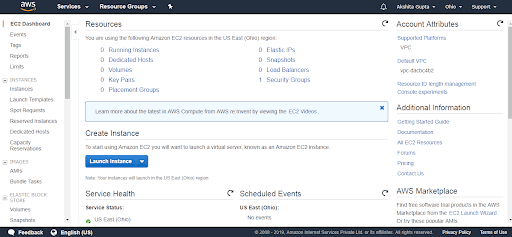

Creating an EC2 Instance

- Log in to the AWS management console. Press the EC2 service. Press the launch instance button for creating the new instance.

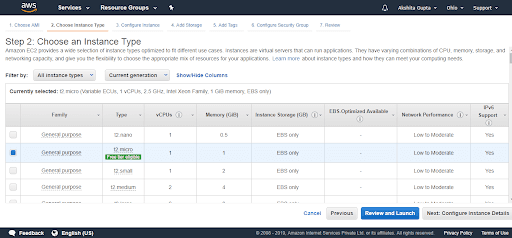

- Press the “Select” button with the Microsoft Windows Server 2016 Base.

- Be sure that you select t2 micro and press “Review and Launch.” Press “Launch.”

- Choose “Create a new key pair.” In the following box, fill up the key pair name. To download the key, press “Download Key Pair.” After that, press “Launch Instances.”

After launching the instance, we will revert to the Amazon EC2 console.

AWS Lambda

What is AWS Lambda?

AWS Lambda is a compute service that allows us to execute the code without managing or provisioning servers. Lambda executes our code on the compute infrastructure and carries out the administration of the compute resources comprising the operating system and server maintenance, automatic scaling, and code logging. Through Lambda, we can execute our code virtually for any type of backend or application service.

We set up our code into Lambda functions. Lambda executes our functions only when required and scales automatically, from minor requests per day to thousands per second.

When we use Lambda

Lamda is a convenient compute service for several application scenarios until we can run our application code through the Lambda standard runtime environment and among the resources that Lamda offers. While using Lambda, you will be responsible

only for your code. Lambda handles the compute fleet that provides a memory balance, network, CPU for running our code.

AWS Lambda Features

Following are the important features of AWS Lambda:

- Concurrency and Scaling controls

Concurrency and Scaling controls like provisioned concurrency and concurrency limits provide you with fine-grained control over the responsiveness and scaling of our applications. - Code Signing

For AWS Lambda, Code signing offers integrity and trust controls to check that developers execute only unmodified code in our Lambda functions. - Functions are defined as a container

We use our desired container image tooling, dependencies, and workflows for building, deploying and testing our lambda functions. - Lambda Extensions

We use Lambda extensions for extending our Lambda functions. For instance, we use extensions to integrate the Lambda with our preferred tools to monitor governance and security.

Creating a Lambda Function

Step1: First, open the “Functions Page” over the Lambda console.

Step 2: Select “Create Function.”

Step 3: In “Basic Information,” perform the following:

- For the “Function Name,” type “my-function.”

- For the “Runtime,” select “Node.js 14.x”. Please remember that Lambda offers runtimes for .NET, Java, Python, Ruby, and Go.

Step 4: Select “Create function.”

| Related article: AWS Projects |

What is Cloudwatch

CloudWatch is a utility that we use to monitor our AWS applications and resources that we run on the AWS in real-time. We use CloudWatch for tracking and collecting the metrics that assess our applications and resources. CloudWatch displays the measures spontaneously about each AWS service that we select.

CloudWatch Terminology

- Alarms

Alarms enable us to set up alarms for notifying you at any time a specific threshold is hit. - Dashboards

We use CloudWatch for creating dashboards to display what is taking place with our AWS account. - Events

CloudWatch events allow us to answer state changes to our AWS resources. - Logs

CloudWatch Logs enable us to monitor, store and aggregate the logs.

AWS Storage Services

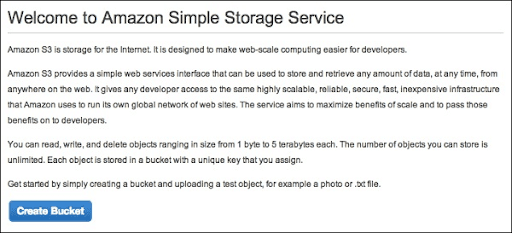

What is AWS S3

AWS S3 is a low-cost, high-speed, scalable developed for data archiving, application programs, and online backup. It enables us to download, store, and upload any kind of file up to 5 TB in size. Storage services will allow the subscribers to use the similar systems that amazon utilizes to run its websites.

How to Configure S3

Following are the steps to configure Amazon S3:

Step 1: Go to the Amazon S3 console

Step 2: Through the following steps, we create the bucket:

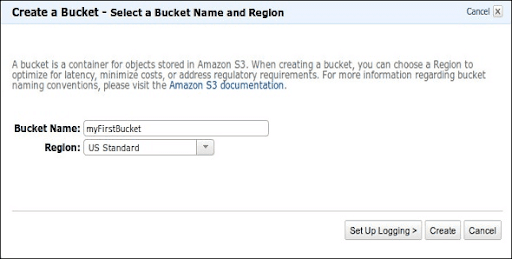

- Press the “Create Bucket” button in the following window.

- Fill up the required details and press the “Create” button for creating the bucket.

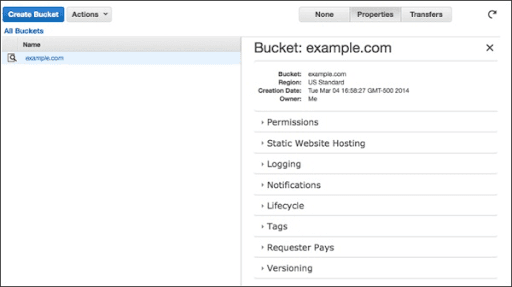

- The bucket is created successfully, and the following console displays the bucket list and its properties.

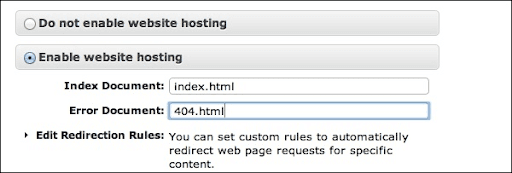

- Choose the static website option. Select the radio button “Enable website hosting” and fill up the required details.

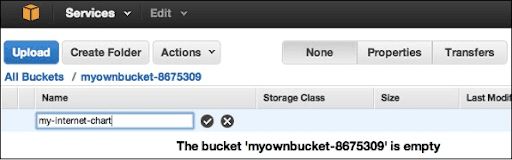

Step 3: Insert an object into the bucket through the following steps:

- Go to the Amazon S3 console

- Press the Upload button.

- Press the “Add Files” option. Choose the files that you want to upload from the system, and after that, press the “Open” button.

- Press the “Start Upload” button to upload the files into the bucket.

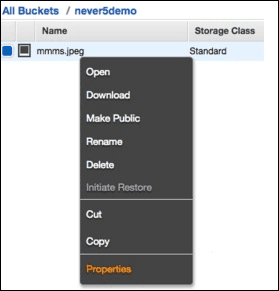

- For opening or downloading the object: Open the Amazon S3 console, go to the folders & objects list, right-click the object to be downloaded/opened. After that, choose the necessary object:

AWS Storage classes

AWS Storage classes maintain data integrity through checksums. We use storage classes to assist the parallel data loss in multiple facilities. Following are the four types of storage classes:

- S3 Standard

It is a standard storage class that stores the data excessively throughout multiple devices in various facilities. It is developed to maintain the loss of 2 facilities parallely. It offers high throughput performance and low latency. - S3 one zone-infrequent access

We use the S3 one zone-infrequent access storage class when we access the data less frequently but require fast access when needed. It holds data in a single zone while other storage classes save data in three zones. It is suitable to store the backup data and a perfect option for the less frequently accessed data. It offers lifecycle management for the migration of the objects to the other S3 storage classes. - S3 Standard IA

IA refers to infrequently accessed. We use Standard IA storage class when we use the data less frequently but need rapid access when required. It is developed to maintain the loss of 2 facilities parallely. - S3 Glacier

It is the cheapest storage class, and we can use it for archives only. We can save any volume of data at a lesser cost than the other storage classes. We can load the objects directly to S3 Glacier.

CloudFront CDN

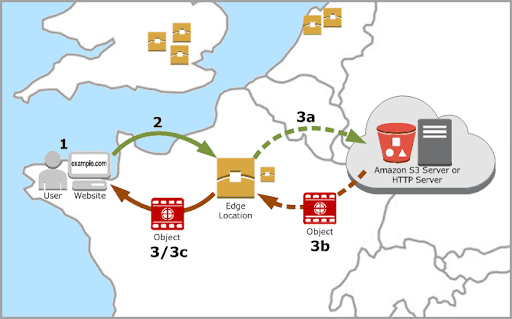

CloudFront CDN is a method of distributed servers that give web content and web pages to a user according to the user’s location, the source of the content delivery server, and the webpage.

Terminology of CloudFront CDN

Distribution: It is the name of the CDN, which contains a group of edge locations. Creating a new CDN in the network indicates that we are creating Distribution.

Origin: It specifies the origin of all the files which CDN will distribute. The origin can be an EC2 Instance, an Elastic Load Balancer, or an S3 bucket.

Edge Location: It is the location where we cache the content. It is the split of an AWS availability zone or AWS region.

How CloudFront CDN delivers content to the users

After we set up CloudFront to deliver our content, here is what happens when users request files:

- A user accesses our application or website and requests files like an HTML file and an image file.

- DNS routes that request to CloudFront POP can perfectly serve the request- generally the immediate CloudFront POP in the text of latency- and routes that request that edge location.

- In the CloudFront POP, CloudFront verifies its cache of the requested files. If the files are available in the cache, CloudFront sends them to the user. If the files are not available in the cache, it performs the following:

- CloudFront matches the request with the specifications in your distribution and dispatches the request of the files to your origin server for the respective file type.

- Origin servers return them to the edge location.

- Once the first byte comes from the origin, CloudFront starts dispatching files to the user. CloudFront inserts files to cache in edge location for later when someone requests those files.

AWS Snowball

Snowball is a data transport solution that utilizes secure appliances for transferring vast amounts of data out of and into AWS. It is a process of taking the data into AWS and evading the internet. In place of handling all the explicit disks, Amazon offered you a tool, and you loaded a tool with the data.

Snowball addresses general challenges for huge-scale data transfers like long transfer time, security issues, and high network costs. Transferring data through Snowball fast, secure, and accessible. Snowball offers 256-bit encryption, tamper-resistant enclosures, and a Trusted platform module to assure security.

Snowball Edge

Snowball Edge is the 100 TB data transfer device with onboard compute and storage capabilities. It is like an AWS data center that we can bring on-site. We can also use it for moving large amounts of data out of and into AWS.

AWS Network Services

AWS VPC

The full form of VPC is Virtual Private Cloud. Amazon VPC offers a coherently separated AWS cloud where we can start AWS resources in the virtual network that we define. We can have full control over our virtual networking environment, comprising a choice of our IP Address range, the configuration of the route tables, and the creation of the subnets.

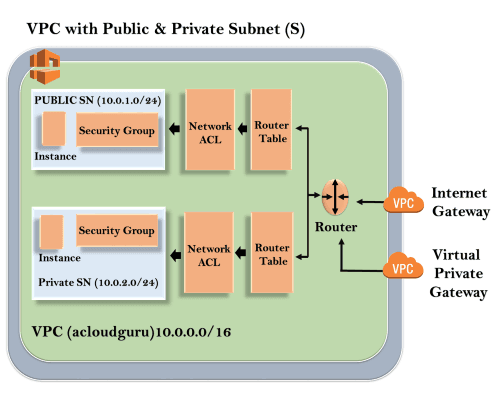

VPC Architecture

Outline represents the region, and the region name is “us-east-1”. Inside the region, we have VPC, and outside VPC, we have a virtual private gateway and internet gateway. Virtual Private Gateway and Internet Gateway are the methods for connecting to the VPC. Both the connections go to the router in the VPC, and the router directs traffic to the routing table. After that, the routing table will direct the traffic to the Network ACL. Network ACL is a firewall or a security group. Network ACL is a state list that allows and denies the roles.

Learn AWS Training in Delhi

Uses of VPC

- Through VPC, we can start the instances in a subnet of our choice.

- With VPC, we can allocate custom IP address ranges in every subnet.

- By using VPC, we can set up the route tables between the subnets.

- It offers the best security control over our AWS resources.

- We can allocate security groups to the Individual instances.

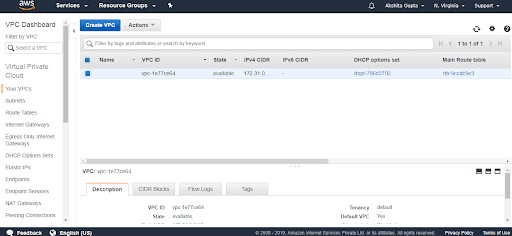

Creating your Custom VPC

- First, log in to the .”AWS management console.

- Press the VPC services in the “Networking and Content Delivery.”

- Press the “Your VPCs” exist on the left side of the console.

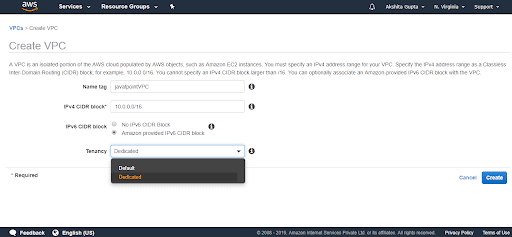

- Press “Create VPC” for creating our custom VPC.

- Fill up the details for creating the custom VPC.

We have to fill the following fields:

- Name tag: It is the VPC name that we give to our VPC.

- IPV4 CIDR block: Build this address as large as possible.

- IPV6 CIDR block: We can provide an IPV6 CIDR block.

- Tenancy: It is the default field.

AWS Direct Connect

AWS Direct Connect is a Cloud utility solution that simplifies establishing a reliable network solution from our place to AWS. Through AWS Direct Connect, we can create the connectivity between AWS and our office, data center, colocation environment, which reduces our network costs, increases bandwidth throughput, and offers a consistent network experience than the internet-based connection.

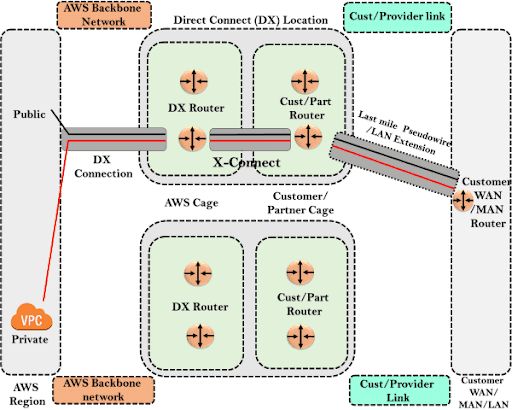

Direct Connect Architecture

- AWS Region: In the above architecture, we have an AWS region, and in the AWS region, we have public services like S3, VPC.

- Direct Connect Location: Direct Connect architecture contains direct connect location, which is spread throughout the world. In the direct connect location, we have two cages: customer or partner cage, AWS cage. AWS cage contains direct connect routers, and Partner/Customer contains Part/Cust routers.

AWS Bastion Host

A bastion Host is a particular purpose computer over a host configured and developed to resist the attacks. The computer hosts one application. For instance, we remove a proxy server and other services for reducing the threat to the computer. A bastion host is tempered because of its purpose and location, which is in the demilitarized zone or the outside of a firewall.

In the above architecture, we have private and public subnets. NAT instance is available at the backside of the security group, and NAT gateway is available after the security group since we configure the NAT instance with the security group while NAT gateway does not need any security group. When the instance in the private subnet needs to access the internet, they do it by using NAT Gateway or NAT instance.

AWS AMI

The full form of AMI is Amazon Machine Images. It is a virtual image that we use for creating the virtual machine in an EC2 instance. Following are the types of AWS AMI:

- EBS - backend instances: It is an EC2 instance that offers temporary storage. If we delete the EC2 instance, then the data that exists in the EC2 instance will be deleted. For making the data stable, Amazon offers an EBS volume.

- Instance Store - backend instances: In the instance store, the instance contains storage approximately 1 TB or 2 TB, which is transitory storage. Once we terminate the instance, the data will be lost.

AWS Database Services

Amazon DynamoDB

Amazon DynamoDB is also called Amazon Dynamo Database or DDB. It is a NoSQL database utility offered by AWS(Amazon Web services). DynamoDB is famous for its latencies and scalability. According to AWS, DynamoDB reduces costs and eases the storage and retrieval of data.

Advantages of AWS DynamoDB

- Scalable

Amazon DynamoDB scales the resources committed to a table to thousands of servers distributed throughout different availability zones to satisfy our throughput and storage requirements. There are no restrictions to the volume of data every table can store. - Rapid

Amazon DynamoDB offers great throughput at minimal latency. It is developed on the state drivers to maximize for excellent performance even at a large scale. - Managed

DynamoDB releases developers from the concerns of providing software and hardware, configuring the distributed database cluster, and handling the cluster operations. It manages all the difficulties of partitions and scaling our data on our machine resources to satisfy our I/O performance needs. - Flexible

Amazon DynamoDB is a highly flexible system that does not compel its users into a specific consistency model or data model. DynamoDB tables do not contain an established schema but rather enable every data item to contain any type of attribute. - Available and Durable

Amazon DynamoDB copies its data on at least 3 data centers such that the system continues to work and provides data even under failure cases.

Components of Amazon DynamoDB

Following are the components of the Amazon DynamoDB:

- Tables: Like other database systems, DynamoDB stores the data in the tables. The table is a collection of data.

- Items: Every table contains multiple items. Item is a set of attributes that is solely identifiable amid all the other items. In DynamoDB, we don’t have any restriction to the number of items we can store in the tables.

- Attributes: Every Item contains multiple attributes. The attribute is an essential data element that does not require to be broken down. For instance, a “People” table has attributes like PersonID, FirstName, LastName, etc.

- Primary Key: When we create the table, we should define the primary key of the table. The primary key uniquely determines every table item, such that no two items can have a similar key.

Amazon Redshift

Redshift is a rapid and robust, completely managed, and petabyte-scale data warehouse utility in the cloud. We use Redshift for only $0.25 per hour with no upfront costs or commitments and the extent to a $1,000 terabyte per year. Redshift contains two kinds of nodes:

- Single node: A single node can store up to 160GB.

- Multi-node: Multi-node contains multiple nodes. It is two types:

- Leader Node

It handles the client connections and accepts queries. Leader node accepts queries from client applications, analyses the queries, and builds the execution plans. It aligns with the concurrent execution of those plans with the compute code and integrates the intermediate results of the nodes. - Compute Node

A compute node runs the execution plans, and after that, we send the intermediate results to the leader node for aggregation before dispatching them to the client application.

- Leader Node

| Related Article: Amazon Redshift Tutorial |

AWS Analytics Services

Amazon Elastic MapReduce

Amazon EMR is a cluster platform that streamlines executing big data frameworks like Apache Hadoop, Apache Spark on the AWS for processing and analyzing vast amounts of data. Through these frameworks and associate freeware projects like Apache Pig and Apache Hive, we can process the data for the analytics intents and the business intelligence workloads. Following are the advantages of AWS Elastic MapReduce:

- Cost savings

Amazon ECR pricing relies on the type of instance and the number of EC2 instances that we implement, and the region in which we start our cluster. - AWS Integration

Amazon EMR(Elastic MapReduce) integrates with the other AWS services for providing functionalities and capabilities associated with the storage, networking, security, etc., for our cluster. - Deployment

Our EMR Cluster contains EC2 instances that carry out the work we submit to our cluster. When we start our Amazon EMR, the cluster setups the instances with applications that we select, like Apache Spark or Hadoop. - Reliability

Amazon Elastic MapReduce(EMR) supervises nodes in our cluster and systematically terminates and substitutes the instance in case of failure. Amazon EMR delivers configuration options that handle if our cluster is ended manually or automatically. If we configure our cluster to be automatically ended, it is ended after all the steps finish.

Uses of AWS EMR(Elastic MapReduce)

Following are the uses of AWS EMR:

- Real-time Analytics: Users can process and use real-time data. We can perform the streaming analysis in a fault-tolerant way and submit the results to Amazon HDFS or S3.

- Log Analysis: AWS EMR eases log processing and creates mobile and web applications. The semi-structured or unstructured data can convert into valuable understandings through Amazon EMR.

- Clickstream Analysis: To provide more efficient and valuable advertisements Amazon EMR(Elastic MapReduce)

- Extract Transform Load: Amazon EMR frequently acclimates rapidly and cost-efficiently and performs data transformations workloads such as aggregate, sort, etc.

AWS Machine Learning

Amazon provides various tools and services under AWS Machine Learning. These solutions allow organizations and developers to deploy ML systems more rapidly compared to a code-based approach.

| Know More about Machine Learning: Machine Learning Tutorial |

Services of AWS Machine Learning

- SageMaker: This service allows us to effectively and rapidly transition our theoretical machine learning models into production. Sagemaker contains various tools that will enable us to build, deploy, and design our ML model.

- Fraud Detector: Amazon Fraud Detector is helpful to flag possibly fraudulent accounts. Organizations should join the existing data of identified fraudulent transactions for training it for future use.

- Comprehend: Natural Language Processing(NLP) utilizes machine learning for extracting valuable information from textual data, including unorganized data like customer service emails and customer reviews.

Advantages of Amazon Machine Learning

- Open Platform: Machine Learning is ideal for the machine learning researcher, data researcher. AWS provides machine learning tools and services designed to satisfy our needs and expertise level.

- Wide Framework Support: AWS endorses every machine learning framework with TensorFlow, Caffe2. Therefore we will develop or bring any model we select.

- Deep Platform Integrations: ML services integrate with the remaining platform with the database and data lake tools we want to run the machine learning workloads. AWS data provides you with a leading platform for the complete data.

- Secure: Through granular permission policies, we control access to the resources. Database and storage services provide tough code to make your data secure. Versatile key management options allow us to create and handle the encryption keys.

Conclusion

AWS is a famous cloud service provider, and it offers several cloud services. More than 90% of the companies are likely to deploy their products and services into the cloud platform by 2024. AWS is a well-known cloud computing platform, and it provides approximately 100 cloud services. This AWS tutorial gives you a brief understanding of every AWS service.

If you have any queries, let us know by commenting in the below section.

On-Job Support Service

On-Job Support Service

Online Work Support for your on-job roles.

Our work-support plans provide precise options as per your project tasks. Whether you are a newbie or an experienced professional seeking assistance in completing project tasks, we are here with the following plans to meet your custom needs:

- Pay Per Hour

- Pay Per Week

- Monthly

| Name | Dates | |

|---|---|---|

| AWS Training | Mar 03 to Mar 18 | View Details |

| AWS Training | Mar 07 to Mar 22 | View Details |

| AWS Training | Mar 10 to Mar 25 | View Details |

| AWS Training | Mar 14 to Mar 29 | View Details |

Viswanath is a passionate content writer of Mindmajix. He has expertise in Trending Domains like Data Science, Artificial Intelligence, Machine Learning, Blockchain, etc. His articles help the learners to get insights about the Domain. You can reach him on Linkedin