- Home

- Blog

- Apache Certification Courses

- Apache Airflow Tutorial - An Ultimate Guide for 2025

If you work closely in Big Data, you are most likely to have heard of Apache Airflow. It commenced as an open-source project in 2014 to help companies and organizations handle their batch data pipelines. Since that time, it has turned to be one of the most popular workflow management platforms within the domain of data engineering.

Written in Python, Apache Airflow offers the utmost flexibility and robustness. It simplifies the workflow of tasks with its well-equipped user interface. So, if you are looking forward to learning more about it, find out everything in this Apache Airflow tutorial.

| Table of Content- Apache AirFlow Tutorial |

What is Apache Airflow?

Apache Airflow is one significant scheduler for programmatically scheduling, authoring, and monitoring the workflows in an organization. It is mainly designed to orchestrate and handle complex pipelines of data. Initially, it was designed to handle issues that correspond with long-term tasks and robust scripts. However, it has now grown to be a powerful data pipeline platform.

Airflow can be described as a platform that helps define, monitoring and execute workflows. In simple words, workflow is a sequence of steps that you take to accomplish a certain objective. Also, Airflow is a code-first platform as well that is designed with the notion that data pipelines can be best expressed as codes.

Apache Airflow was built to be expandable with plugins that enable interaction with a variety of common external systems along with other platforms to make one that is solely for you. With this platform, you can effortlessly run thousands of varying tasks each day; thereby, streamlining the entire workflow management.

| If you want to enrich your career and become a professional in Apache Kafka , then enroll on "MindMajix's Apache Kafka Training" - This course will help you to achieve excellence in this domain. |

Why Use Apache Airflow?

You can easily get a variety of reasons to use apache airflow as mentioned below:

- This one is an open-source platform; hence, you can download Airflow and begin using it immediately, either individually or along with your team.

- It is extremely scalable and can be deployed on either one server or can be scaled up to massive deployments with a variety of nodes.

- Airflow apache runs extremely well with cloud environments; hence, you can easily gain a variety of options.

- It was developed to work with the standard architectures that are integrated into most software development environments. Also, you can have an array of customization options as well.

- Its active and large community lets you scale information and allows you to connect with peers easily.

- Airflow enables diverse methods of monitoring, making it easier for you to keep track of your tasks.

- Its dependability on code offers you the liberty to write whatever code you would want to execute at each step of the data pipeline.

Fundamentals of Apache Airflow

Moving forward, let’s explore the fundamentals of Apache airflow and find out more about this platform.

#1. DAG in Airflow

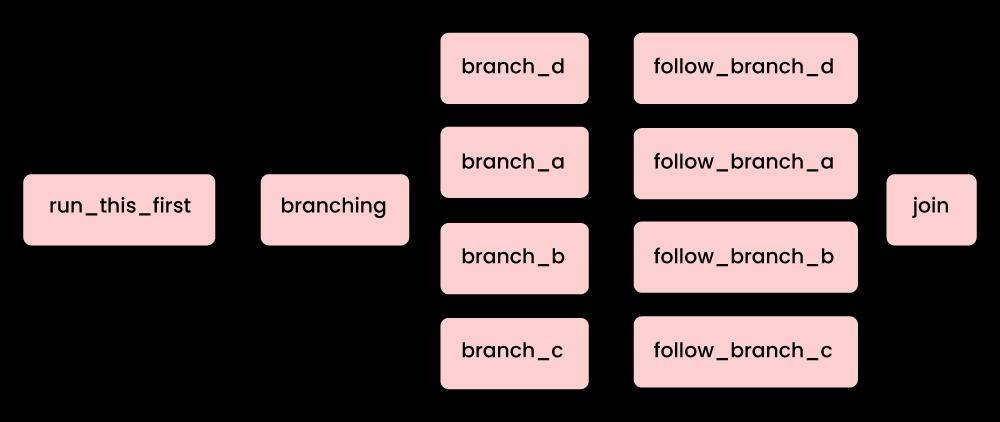

Herein, workflows are generally defined with the help of Directed Acyclic Graphs (DAG). These are created of those tasks that have to be executed along with their associated dependencies. Every DAG is illustrating a group of tasks that you want to run. And, they also showcase the relationship between tasks available in the user interface of the Apache Airflow. Let’s break down DAG further to understand more about it:

- Directed: If you have several tasks that further have dependencies, each one of them would require at least one specific upstream task or downstream task.

- Acyclic: Here, tasks are not allowed to create data with self-references. This neglects the possibility of creating an infinite loop.

- Graph: Tasks are generally in a logical structure with precisely defined relationships and processes in association with other tasks.

One thing that you must note here is that a DAG is meant to define how the tasks will be executed and not what specific tasks will be doing.

#2. DAG Run

Basically, when a DAG gets executed, it is known as a DAG run. Let’s assume that you have a DAG scheduled and it should run every hour. This way, every instantiation of the DAG will establish a DAG run. There could be several DAG runs connected to one DAG running simultaneously.

#3. Task

Tasks vary in terms of complexity and they are operators’ instantiations. You can take them up as work units that are showcased by nodes in the DAG. They illustrate the work that is completed at every step of the workflow with real work that will be portrayed by being defined by the operators.

#4. Airflow Operators

In Apache Airflow, operators are meant to define the work. An operator is much like a class or a template that helps execute a specific task. All of the operators are originated from BaseOperator. You can find operators for a variety of basic tasks, like:

- PythonOperator

- BashOperator

- MySqlOperator

- EmailOperator

These operators are generally used to specify actions that must be executed in Python, Bash, MySQL, and Email. In Apache Airflow, you can find three primary types of operators:

- Operators that can run until specific conditions are fulfilled

- Operators that execute an action or request a different system to execute an action

- Operators that can move data from one system to the other

#5. Hooks

Hooks enable Airflow to interface with third-party systems. With them, you can effortlessly connect with the outside APIs and databases, such as Hive, MySQL, GCS, and many more. Basically, hooks are much like building blocks for operators. There will be no secured information in them. Rather, it is stored in the encrypted metadata database of Airflow.

#6. Relationships

Between tasks, airflow exceeds at defining complicated relationships. Let’s say that you wish to designate a task and that T1 should get executed before T2. Thus, there will be varying statements that you can use to define this precise relationship, like:

- T2 << T1

- T1 >> T2

- T1.set_downstream (T2)

- T2.set_upstream (T1)

| Read these latest Apache Kafka Interview Questions and Answers that help you grab high-paying jobs |

How does Apache Airflow Work?

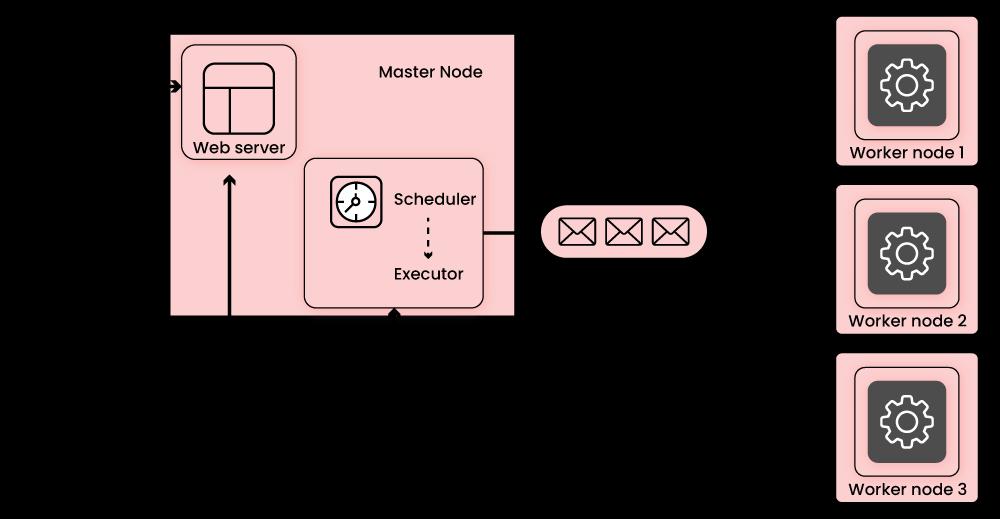

To understand how does Apache Airflow works, you must understand there are four major components that create this scalable and robust workflow scheduling platform:

- Scheduler: The scheduler monitors all of the DAGs and their linked tasks. For a task, when dependencies are met, the scheduler initiates the task. It checks active tasks for initiation periodically.

- Web Server: This is the user interface of Airflow. It displays the status of responsibilities and lets the user interact with databases and read log files from remote file stores, such as Google Cloud Storage, S3, Microsoft Azure blobs, and more.

- Database: In the database, the state of the DAGs and their linked tasks are saved to make sure the schedule remembers the information of metadata. Airflow uses Object Relational Mapping (ORM) and SQLAlchemy to connect to the metadata database. The scheduler evaluates all of the DAGs and stores important information, such as task instances, statistics from every run, and schedule intervals.

- Executor: The executor zeroes down upon how the work will get done. There is a variety of executors that you can use for diverse use cases, such as SequentialExecutor, LocalExecutor, CeleryExecutor, and KubernetsExecutor.

Airflow evaluates all of the DAGs in the background at a specific period. This period is set with the help of processor_poll_interval config and equals one second. Once a DAG file is evaluated, DAG runs are made as per the parameters of scheduling. Then, task instances are instantiated for such tasks that must be performed and their status is changed to SCHEDULED in the metadata database.

The next step is when the schedule questions the database, retrieves tasks when they are in the scheduled state, and distributes them to all of the executors. And then, the task’s state changes to QUEUED. The queued tasks are drawn from the queue by executors. When this happens, the status of the task is changed to RUNNING.

Once a task is finished, it will be marked as either finished or failed. And then, the scheduler will update the final status in the database.

Apache Airflow Installation

Apache Airflow can be installed with pip through a simple pip install apache-airflow. You can either use a separate python virtual environment or install the same in the default python environment.

If you wish to use the conda virtual environment, you will have to:

- Install miniconda

- Ensure that conda is on your path:

$ which conda

~/miniconda2/bin/conda

- Now, you can create a virtual environment from environment.yml:

$ conda env create -f environment.yml- Then, simply activate the virtual environment:

$ source activate airflow-tutorialNow, you will have a working Airflow installation. Alternatively, you can install Airflow manually as well by running:

$ pip install apache-airflowWhile installing Apache Airflow, keep in mind that since the release of the 1.8.1 version, Airflow is now packaged as apache-airflow. Make sure that you are installing extra packages correctly with the Python package. For instance, if you have installed apache-airflow and don’t use pip install airflow[dask], you will end up installing the old version.

Basic CLI Commands

Here are some common basic Airflow CLI commands.

- To run the sleep task: airflow run tutorial sleep 2022-12-13

- To list tasks in the DAG tutorial: bash-3.2$ airflow list_tasks tutorial

- To pause the DAG: airflow pause tutorial

- To unpause the tutorial: airflow unpause tutorial

Airflow User Interface

Now that the installation is complete, let’s have an overview of the Apache Airflow user interface. Here are some of the components that you will get in the interface:

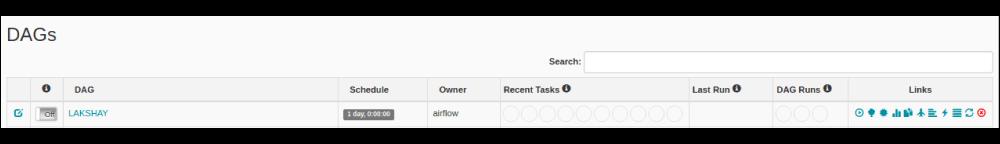

#1. DAGS View

It is the default view that lists all of the DAGS available in the system. With this view, you will get a summarized view of DAGS, such as how many times a specific DAG ran successfully, how many times it failed, the last execution time, and more.

#2. Graph View

In the graph view, you get to visualize every step of the workflow along with the dependencies and the current status. Also, you can check the current status with varying color codes as well, such as:

#3. Tree View

The tree view represents the DAG as well. If you think that your pipeline is taking a long to execute, you can check out which exact part is taking time and work on it with this view.

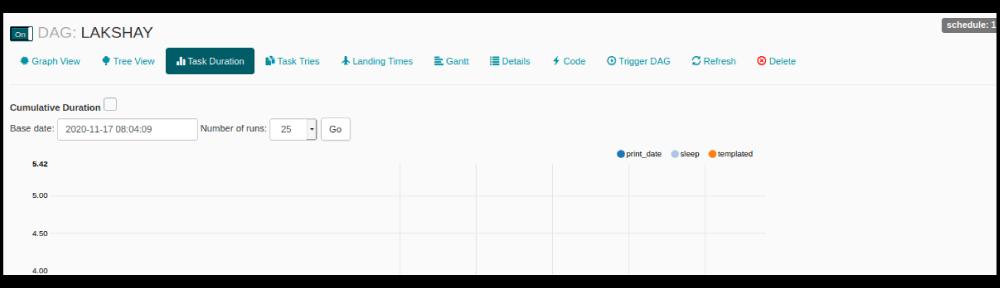

#4. Task Duration

Under this view, you can easily compare the tasks’ duration at varying time intervals. You can also optimize the algorithms and compare the performance here.

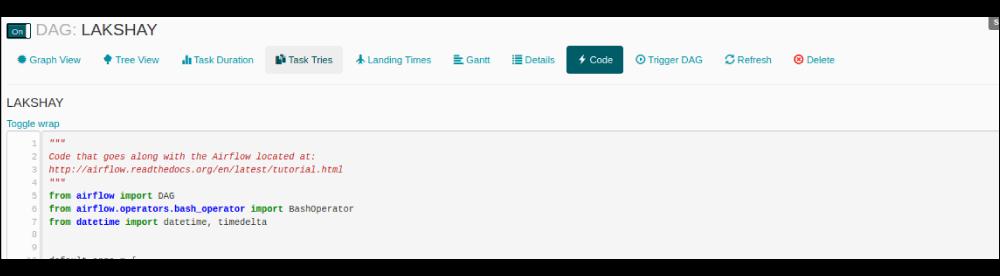

#5. Code

In this specific view, you can view the code quickly and see what was used to generate a DAG.

Conclusion

Now that you have understood the basics in this Apache Airflow tutorial, get started without any delay. Keep in mind that an ideal method to learn everything about this tool is to build with it. Once you have downloaded Airflow, you can either contribute to an open-source project on the internet or design one of your own.

Related Article:

On-Job Support Service

On-Job Support Service

Online Work Support for your on-job roles.

Our work-support plans provide precise options as per your project tasks. Whether you are a newbie or an experienced professional seeking assistance in completing project tasks, we are here with the following plans to meet your custom needs:

- Pay Per Hour

- Pay Per Week

- Monthly

| Name | Dates | |

|---|---|---|

| AnthillPro Training | Feb 21 to Mar 08 | View Details |

| AnthillPro Training | Feb 24 to Mar 11 | View Details |

| AnthillPro Training | Feb 28 to Mar 15 | View Details |

| AnthillPro Training | Mar 03 to Mar 18 | View Details |

Madhuri is a Senior Content Creator at MindMajix. She has written about a range of different topics on various technologies, which include, Splunk, Tensorflow, Selenium, and CEH. She spends most of her time researching on technology, and startups. Connect with her via LinkedIn and Twitter .