- Home

- Blog

- Apache Certification Courses

- Airflow Interview Questions

Automation of tasks plays a crucial role in almost every industry. Moreover, it’s one of the instant methods to accomplish functional efficiency. However, a lot of us simply fail to comprehend how tasks can be automated. So, in the end, they get tangled in a loop of manual labor, doing the same thing time and again.

This becomes even more difficult for those professionals who deal with a variety of workflows, such as accumulating data from several databases, preprocessing the data, and uploading and reporting the same.

Apache Airflow is a tool that turns out to be helpful in this situation. Whether you’re a software engineer, data engineer or data scientist, this tool is useful for everybody. So, if you’re trying to look for a job in this domain, this post covers some of the latest Airflow interview questions for beginners and professionals to go through.

Airflow Interview Questions and Answers 2024 (Updated) have been divided into two stages they are:

Top Airflow Interview Questions

- What are the problems resolved by Airflow?

- Define the basic concepts in Airflow.

- How would you create a new DAG?

- Explain the design of workflow in Airflow.

- Define the types of Executors in Airflow.

- Can you tell us some Airflow dependencies?

- How can you use Airflow XComs in Jinja templates?

- Define integrations of the Airflow.

- How can you define a workflow in Airflow?

- How would you add logs to Airflow logs?

Airflow Interview Questions for Freshers

Are you a beginner in the field of Airflow, and you’ve just started giving interviews now? If yes, these Airflow interview questions for beginners will be helpful to a great extent.

1. How will you describe Airflow?

Apache Airflow is referred to as an open-source platform that is used for workflow management. This one is a data transformation pipeline Extract, Transform, Load (ETL) workflow orchestration tool. It initiated its operations back in October 2014 at Airbnb. At that time, it offered a solution to manage the increasingly complicated workflows of a company. This Airflow tool allowed them to programmatically write, schedule and regulate the workflows through an inbuilt Airflow user interface.

2. What are the problems resolved by Airflow?

Some of the issues and problems resolved by Airflow include:

- Maintaining an audit trail of every completed task

- Scalable in nature

- Creating and maintaining a relationship between tasks with ease

- Comes with a UI that can track and monitor the execution of the workflow and more.

3. What are some of the features of Apache Airflow?

Some of the features of Apache Airflow include:

- It helps schedule all the jobs and their historical status

- Helps in supporting executions through web UI and CRUD operations on DAG

- Helps view Directed Acyclic Graphs and the relation dependencies

4. How does Apache Airflow act as a Solution?

Airflow solves a variety of problems, such as:

- Failures: This tool assists in retrying in case there is a failure.

- Monitoring: It helps in checking if the status has been succeeded or failed.

- Dependency: There are two different types of dependencies, such as:

- Data Dependencies that assist in upstreaming the data

- Execution Dependencies that assist in deploying all the new changes

- Scalability: It helps centralize the scheduler

- Deployment: It is useful in deploying changes with ease

- Processing Historical Data: It is effective in backfilling historical data

| If you want to enrich your career and become a professional in Apache Kafka, then enroll in "MindMajix's Apache Kafka Training". |

5. Define the basic concepts in Airflow.

Airflow has four basic concepts, such as:

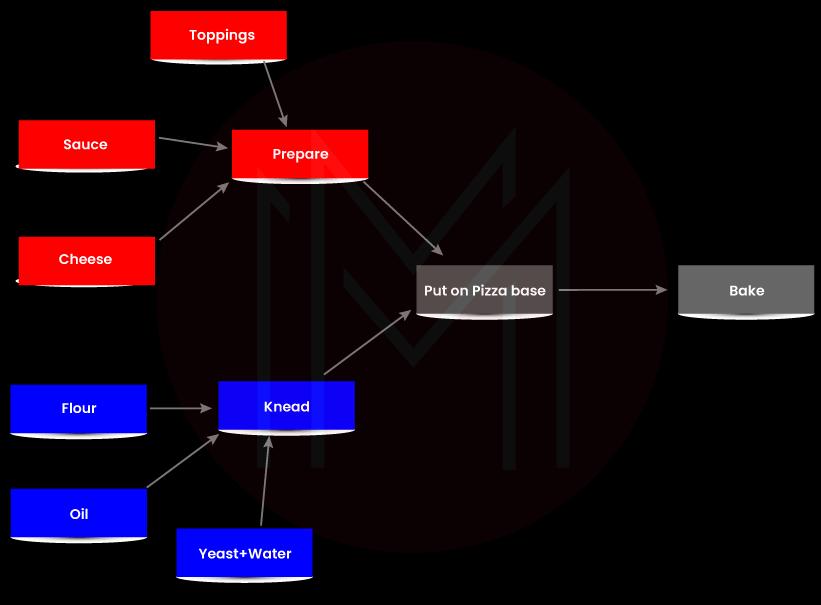

- DAG: It acts as the order’s description that is used for work

- Task Instance: It is a task that is assigned to a DAG

- Operator: This one is a Template that carries out the work

- Task: It is a parameterized instance

6. Define integrations of the Airflow.

Some of the integrations that you’ll find in Airflow include:

- Apache Plg

- Amazon EMR

- Kubernetes

- Amazon S3

- AWS Glue

- Hadoop

- Azure Data Lake

7. What do you know about the command line?

The command line is used to run Apache Airflow. There are some significant commands that everybody should know, such as:

- Airflow run is used for running a task

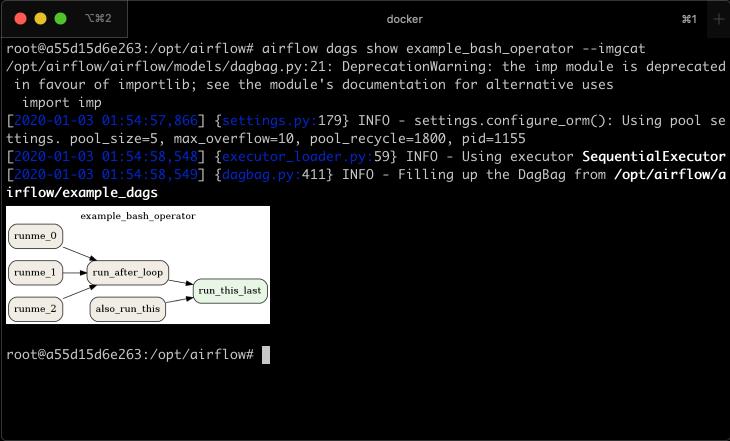

- Airflow show DAG is used for showcasing tasks and their dependencies

- Airflow task is used for debugging tasks

- Airflow Webserver is used for beginning the GUI

- Airflow backfill is used for running a specific part of DAG

8. How would you create a new DAG?

There are two different methods to create a new DAG, such as:

- By writing a Python code

- By testing the code

9. What do you mean by Xcoms?

Cross Communication (XComs) is a mechanism that allows tasks to talk to one another. The default tasks get isolated and can run on varying machines. They can be comprehended by a Key and by dag_id and task_id.

10. Define Jinja Templates.

Jinja templates assist by offering pipeline authors that contain a specific set of inbuilt Macros and Parameters. Normally, it’s a template that contains Expressions and Variables.

Airflow Interview Questions for Experienced

If you’ve been a professional in the Airflow domain and are thinking of switching your job, these Airflow interview questions for professionals will be useful during the preparation.

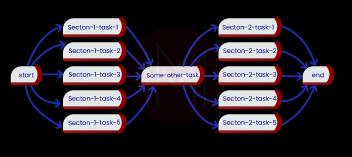

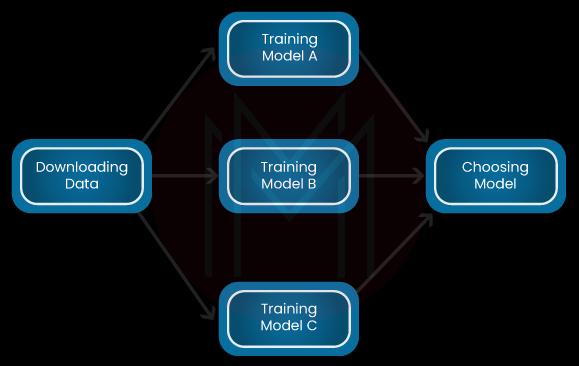

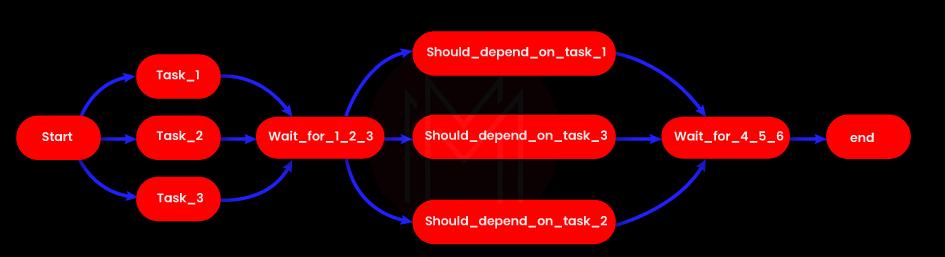

1. Explain the design of workflow in Airflow.

To design workflow in this tool, a Directed Acyclic Graph (DAG) is used. When creating a workflow, you must contemplate how it could be divided into varying tasks that can be independent. And then, the tasks are combined into a graph to create a logical whole.

The overall, comprehensive logic of the workflow is dependent on the graph’s shape. An Airflow DAG can come with multiple branches, and you can select the ones to follow and the ones to skip during the execution of the workflow.

Also:

- Airflow can be halted, completed, and can run workflows by resuming from the last unfinished task.

- It is crucial to keep in mind that Airflow operators can run multiple times when designing. Every task should be independent and capable of being performed several times without leading to any unintentional consequences.

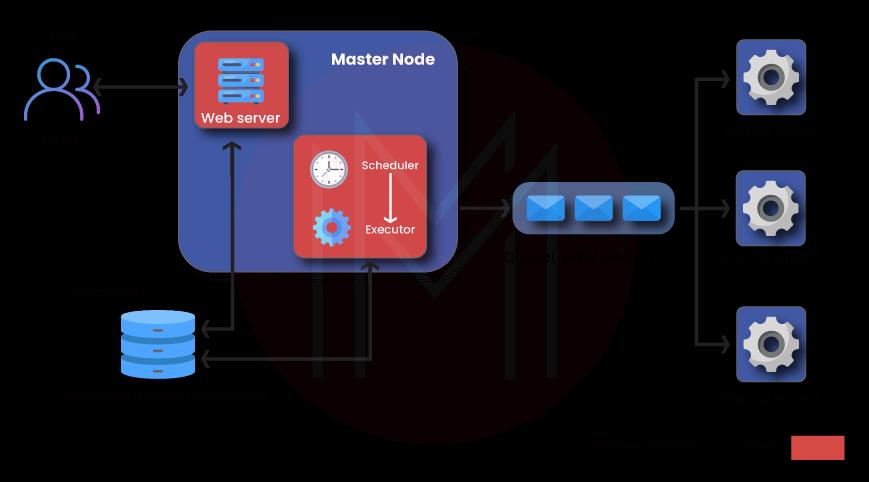

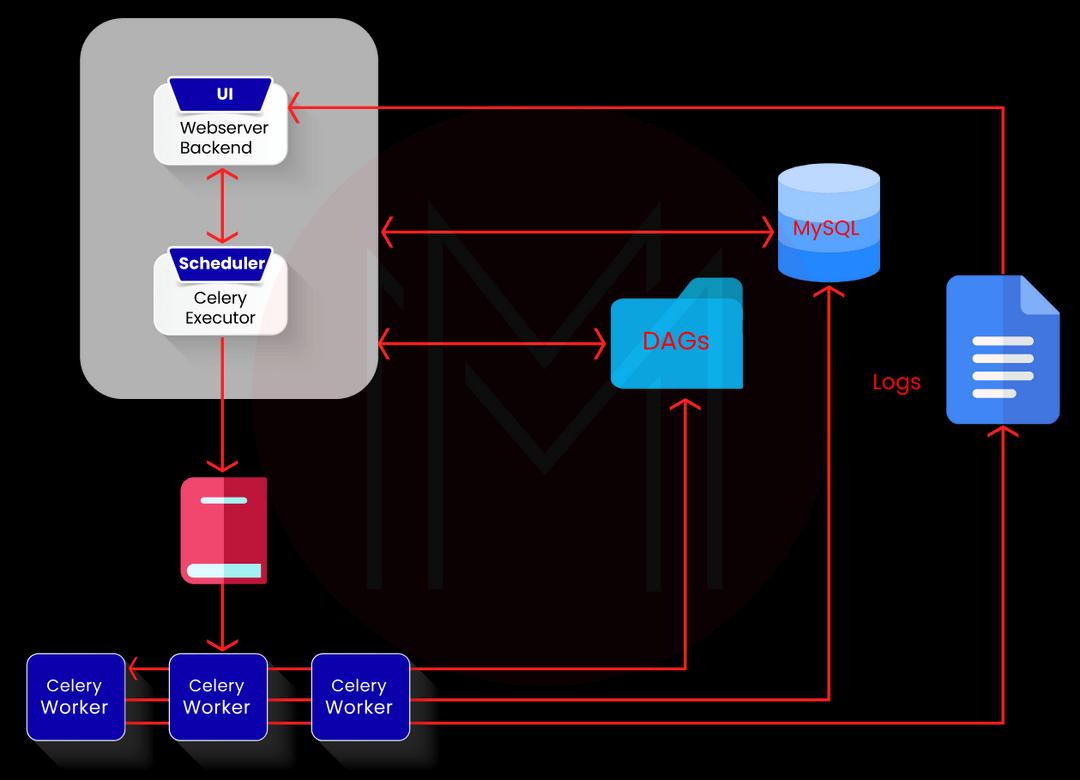

2. What do you know about Airflow Architecture and its components?

There are four primary Airflow components, such as:

- Web Server

This one is an Airflow UI that is developed on the Flask and offers an overview of the complete health of a variety of DAGs. Furthermore, it also helps visualize an array of components and states of each DAG. For the Airflow setup, the web server also lets you manage different configurations, roles, and users. - Scheduler

Every ‘n’ second, the scheduler navigates through the DAGs and helps schedule the tasks that have to be executed. The scheduler has an internal component as well, which is known as the Executor. Just as the name suggests, it helps execute the tasks, and the scheduler orchestrates all of them. In Airflow, you’ll find a variety of Executors, such as KubernetesExecutor, CeleryExecutor, LocalExecutor, and SequentialExecutor.

- Worker

Basically, workers are liable for running those tasks that the Executor has provided to them. - Metadata Database

Airflow supports an extensive range of metadata storage databases. These comprise information regarding DAGs and their runs along with other Airflow configurations, such as connections, roles and users. Also, the database is used by the webserver to showcase the states and runs of the DAGs.

3. Define the types of Executors in Airflow.

The Executors, as mentioned above, are such components that execute tasks. Thus, Airflow has a variety of them, such as:

- SequentialExecutor

SequentialExecutor only executes one task at a time. Herein, the workers and the scheduler both use a similar machine. - KubernetesExecutor

This one runs every task in its own Kubernetes pod. On-demand, it spins up the worker pods, thus, enabling the efficient use of resources - LocalExecutor

In most ways, this one is the same as the SequentialExecutor. However, the only difference is that it can run several tasks at a time. - CeleryExecutor

Celery is typically a Python framework that is used for running distributed asynchronous tasks. Thus, it has been a page of Airflow for a long time now. CeleryExecutors come with a fixed number of workers that are always on the standby to take tasks whenever available.

4. Can you define the pros and cons of all Executors in Airflow?

Here are the pros and cons of Executors in Airflow.

| Executors | Pros | Cons |

| SequentialExecutor |

|

|

| LocalExecutor |

|

|

| CeleryExecutor |

|

|

| KubernetesExecutor |

|

|

5. How can you define a workflow in Airflow?

To define workflows in Airflow, Python files are used. The DAG Python class lets you create a Directed Acyclic Graph, which represents the workflow.

from Airflow.models import DAG

from airflow.utils.dates import days_ago

args = {

'start_date': days_ago(0),

}

dag = DAG(

dag_id='bash_operator_example',

default_args=args,

schedule_interval='* * * * *',

)You can use the beginning date to launch any task on a certain date. The schedule interval also specifies how often every workflow is scheduled to run. Also, ‘* * * * *’ represents that the tasks should run each minute.

| Check Out: Apache Airflow Tutorial |

6. Can you tell us some Airflow dependencies?

Some of the dependencies in Airflow are mentioned below:

freetds-bin \

krb5-user \

ldap-utils \

libffi6 \

libsasl2-2 \

libsasl2-modules \

locales \

lsb-release \

sasl2-bin \

sqlite3 \

7. How can you restart the Airflow webserver?

The Airflow web server can be restarted through data pipelines. Also, the backend process can be started through this command:

airflow webserver -p 8080 -B true

8. How can you run a bash script file?

The bash script file can run with this command:

create_command = """

./scripts/create_file.sh

"""

t1 = BashOperator(

task_id= 'create_file',

bash_command=create_command,

dag=dag

)9. How would you add logs to Airflow logs?

We can add logs either through the logging module or by using the below-mentioned command:

import

dag = xx

def print_params_fn(**KKA):

import logging

logging.info(KKA)

return None

print_params = PythonOperator(task_id="print_params",

python_callable=print_params_fn,

provide_context=True,

dag=dag)10. How can you use Airflow XComs in Jinja templates?

We can use Airflow XComs in Jinja templates through this command:

SELECT * FROM {{ task_instance.xcom_pull(task_ids='foo', key='Table_Name') }}Conclusion

Once you’re backed up by the right type of preparation material, cracking an interview becomes a seamless experience. So, without further ado, refer to these Airflow interview questions mentioned above and sharpen your skills substantially.

On-Job Support Service

On-Job Support Service

Online Work Support for your on-job roles.

Our work-support plans provide precise options as per your project tasks. Whether you are a newbie or an experienced professional seeking assistance in completing project tasks, we are here with the following plans to meet your custom needs:

- Pay Per Hour

- Pay Per Week

- Monthly

| Name | Dates | |

|---|---|---|

| Apache Kafka Training | Mar 07 to Mar 22 | View Details |

| Apache Kafka Training | Mar 10 to Mar 25 | View Details |

| Apache Kafka Training | Mar 14 to Mar 29 | View Details |

| Apache Kafka Training | Mar 17 to Apr 01 | View Details |