- Introduction to Amazon Elastic File System

- Amazon On-Demand Instance Pricing

- AWS Kinesis

- Amazon Redshift Tutorial

- Amazon SageMaker - AIs Next Game Changer

- AWS Console - Amazon Web Services

- AWS Architect Interview Questions

- AWS Architecture

- Amazon Athena

- Top 11 AWS Certifications List and Exam Learning Path

- How to Create Alarms in Amazon CloudWatch

- AWS CloudWatch Tutorial

- Introduction To AWS CLI

- AWS Configuration

- AWS Data Pipeline Documentation

- AWS EC2 Instance Types

- AWS Elastic Beanstalk

- AWS Elastic Beanstalk Available in AWS GovCloud (US)

- AWS Free Tier Limits and Faq

- AWS EC2 Instance Pricing

- Choosing The Right EC2 Instance Type For Your Application

- AWS Interview Questions And Answers

- AWS Key Management Service

- AWS Lambda Interview Questions

- AWS Lambda Tutorial

- What Is AWS Management Console?

- Complete AWS Marketplace User Guide

- AWS Outage

- AWS Reserved Instances

- AWS SDK

- What is AWS SNS?

- AWS Simple Queue Service

- AWS SysOps Interview Questions

- AWS vs Azure

- AWS Vs Azure Vs Google Cloud Free Tier

- Introduction to AWS Pricing

- Brief Introduction to Amazon Web Services (AWS)

- Clean Up Process in AWS

- Creating a Custom AMI in AWS

- Creating an Elastic Load Balancer in AWS

- How to Deploy Your Web Application into AWS

- How to Launch Amazon EC2 Instance Using AMI?

- How to Launch Amazon EC2 Instances Using Auto Scaling

- How to Sign Up for the AWS Service?

- How to Update Your Amazon EC2 Security Group

- Process of Installing the Command Line Tools in AWS

- Big Data in AWS

- Earning Big Money With AWS Certification

- AWS Certification Without IT Experience. Is It Possible?

- How to deploy a Java enterprise application to AWS cloud

- What is AWS Lambda?

- Top 10 Reasons To Learn AWS

- Run a Controlled Deploy With AWS Elastic Beanstalk

- Apache Spark Clusters on Amazon EC2

- Top 30 AWS Services List in 2024

- What is Amazon S3? A Complete AWS S3 Tutorial

- What is AMI in AWS

- What is AWS? Amazon Web Services Introduction

- What is AWS Elasticsearch?

- What is AWS ELB? – A Complete AWS Load Balancer Tutorial

- What is AWS Glue?

- AWS IAM (Identity and Access Management)

- AWS IoT Core Tutorial - What is AWS IoT?

- What is Cloud Computing - Introduction to Cloud Computing

- Why AWS Has Gained Popularity?

- Top 10 Cloud Computing Tools

- AWS Glue Tutorial

- AWS Glue Interview Questions

- AWS S3 Interview Questions

- AWS Projects and Use Cases

- AWS VPC Interview Questions and Answers

- AWS EC2 Tutorial

- AWS VPC Tutorial

- AWS EC2 Interview Questions

- AWS DynamoDB Interview Questions

- AWS API Gateway Interview Questions

- How to Become a Big Data Engineer

- What is AWS Fargate?

- What is AWS CloudFront

- AWS CloudWatch Interview Questions

- What is AWS CloudFormation?

- What is AWS Cloudformation

- Cloud Computing Interview Questions

- What is AWS Amplify? - AWS Amplify Alternatives

- Types of Cloud Computing - Cloud Services

- AWS DevOps Tutorial - A Complete Guide

- What is AWS SageMaker - AWS SageMaker Tutorial

- Amazon Interview Questions

- AWS DevOps Interview Questions

- Cognizant Interview Questions

- Cognizant Genc Interview Questions

- Nutanix Interview Questions

- Cloud Computing Projects and Use Cases

- test info

Complexity is one of the critical challenges for any business – no matter how big or small. It may occur at any stage, whether in development, design, or production. Undoubtedly, resolving the complexity in operations with tactful strategies and tools is vital to gain operational excellence. As a result, businesses will gain speed, generate better results, and hit the maximum potential in their operations.

One of the key strategies in reducing or nullifying complexity is breaking complex tasks into simple pieces of tasks. And then completing the pieces of tasks will result in enhanced performance.

Thanks to AWS Batch. It is one of the vital AWS services for cloud computing. AWS Batch applies batch processing to simplify complexity in processes, gain speed, and produce quick and optimum results in the end.

This blog addresses the basic principle behind the function of AWS batch, its vital features, use cases, and more in greater detail.

| Table of Contents: What is AWS Batch |

What is AWS Batch?

AWS Batch is the batch processing service offered by AWS, which simplifies running high-volume workloads in compute resources. In other words, you can effectively plan, schedule, run, and scale batch computing workloads of any scale with AWS batch. Not only that, you can quickly launch, run, and terminate compute resources while working with AWS batch. The computing resources include Amazon EC2, AWS Fargate, and spot instances.

Know that AWS Batch splits workloads into small pieces of tasks or batch jobs. It runs the batch jobs simultaneously across various availability zones in an AWS region. Thus, it reduces job execution time drastically.

What is Batch Computing?

Before we dive into learning AWS batch, we will know a bit about batch computing.

Batch computing runs programs or jobs on multiple computers without human intervention. Every job in batch computing hugely relies on the completion of preceding jobs, scheduling of jobs, availability of inputs, and other parameters. In batch computing, you can define input parameters through command line arguments, scripts, and control files.

| If you would like to become an AWS Certified professional, then visit Mindmajix - A Global online training platform: “AWS Certification Training". This course will help you to achieve excellence in this domain. |

Why AWS Batch?

Undoubtedly, AWS batch is an excellent tool for batch processing jobs in the AWS cloud.

Now, we will uncover the reasons below:

- AWS Batch simplifies configuring and managing to compute resources, eliminating workloads' complexity.

- It automates the provisioning of compute resources and optimizes workload distribution – regardless of the quantity and scale of workloads.

- It eliminates the need to use any batch-processing software in the AWS Cloud.

- No human intervention is required to run batch jobs. So employees can focus on other vital operations.

- Additionally, AWS batch scales compute resources, delivers speedy outputs, and reduces costs.

| Related Article: AWS Tutorial |

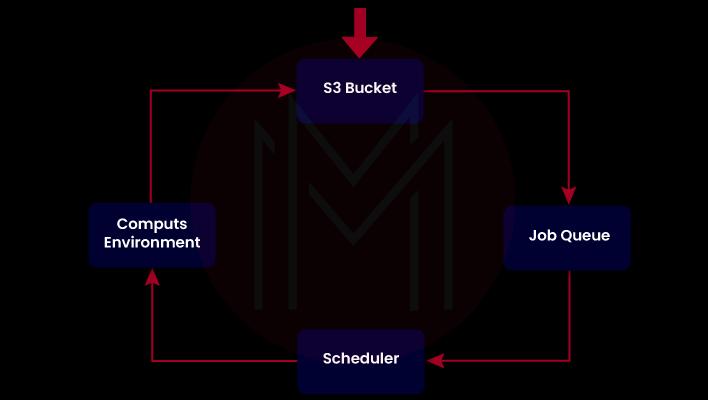

How to implement AWS Batch?

Let's see how we can use AWS batch to run workloads step by step as follows:

- First, you must create an AWS batch environment in a new VPC or existing VPC in the AWS Cloud.

- You must build job definitions clearly and create multiple job queues based on priorities.

- Then, you need to pack codes for batch jobs, mention the dependencies, and submit the jobs in job queues using the AWS management console or CLI or SDKs.

- Finally, you can run batch jobs using Docker container images or workflow engines. Note that you can access Docker images from container registries.

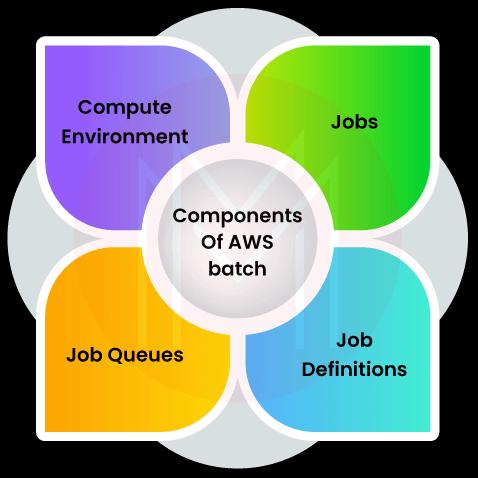

Components of AWS Batch

There are four crucial components in the AWS batch. Let’s discuss the components in detail in the following:

-

Compute Environment

The AWS batch compute environment includes many compute resources to run batch jobs effectively. There are two types of computing environments in AWS Batch – managed and unmanaged.

Know that the ‘Managed compute environment’ is provisioned and managed by AWS, whereas the ‘Unmanaged compute environment’ is managed by customers.

Managed compute environment deals with specifying the desired compute type for running workloads. For example, you can decide whether you need Fargate or EC2. You can specify spot instances as well.

-

Job Definitions

In AWS Batch, jobs are well-defined before submitting them in job queues. Job definitions include details of batch jobs, docker properties, associated variables, CPU and memory requirements, computing resources requirements, and other essential information. All this information helps to optimize the batch jobs execution. Besides, the AWS batch allows overriding the values defined in job definitions before submitting them.

Essentially, job definitions define how batch jobs should run in computing devices. Simply put, they act as the blueprint for running batch jobs.

-

Job Queues

Another significant component of AWS Batch is job queues. Once you have created job definitions, you can submit them in job queuing. You can configure job queues based on priority. Usually, Jobs wait in job queues until the job scheduler schedules them. The job scheduler schedules jobs based on priority. So, jobs with high priority are scheduled first for execution by the job scheduler.

For example, time-sensitive jobs are usually highly-prioritized. So you can execute them first. Low-priority jobs are executed at any time – mainly when compute resources are cheaper.

| Related Article: Top 10 Reasons To Learn AWS |

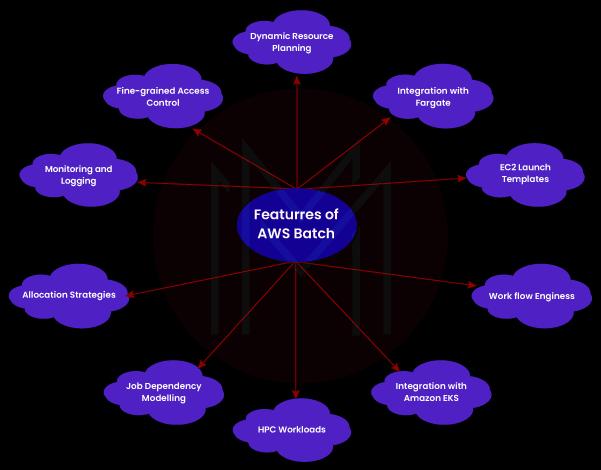

Features of AWS Batch

AWS batch comes with many wonderful features. Are you interested in knowing them in detail?

Let's have a look at them below:

-

Dynamic Resource Provisioning

In AWS Batch, you must set up a compute environment, job definitions, and job queue. After that, you don’t need to manage a single computing resource in the environment. This is because the AWS batch performs automated provisioning and scaling of resources.

-

Integration with Fargate

This integration allows using only the required amount of CPU and memory for every batch job. As a result, you can optimize the use of computing resources significantly. Also, it supports isolating compute resources for every job, which ultimately improves the security to compute resources.

-

EC2 Launch Templates

By using AWS batch, you can customize compute resources with the help of EC2 launch templates. Using the templates, you can scale EC2 instances seamlessly based on requirements. Not only that, you can add storage volumes, choose network interfaces, and configure permissions with the help of templates. Above all, these templates help to reduce the number of steps in configuring batch environments.

| Related Article: AWS EC2 Tutorial |

-

Workflow Engines

AWS batch can easily integrate with open-source workflow engines such as Pegasus WMS, Nextflow, Luigi, Apache Airflow, and many others. Moreover, you can model batch computing pipelines by using workflow languages.

-

Integration with Amazon EKS

AWS batch allows running batch jobs on Amazon EKS clusters. Not just that, you can easily attach job queues with the EKS cluster-enabled compute environment. AWS batch scale Kubernetes nodes and places pods in nodes seamlessly.

-

HPC Workloads

In the simplest words, you can effectively run tightly-coupled High-Performance computing workloads using AWS batch since it supports running multi-node parallel jobs. AWS batch works with an ‘Elastic fabric adapter’ that is a network interface. With this interface, you can effortlessly run applications that demand robust internode communication.

-

Job-dependency Modeling

AWS batch allows defining dependencies between jobs efficiently. Consider a batch job that may consist of three stages and require different types of resources at different stages. So, you can create batch jobs for different stages – no matter what degree of dependency exists between the stages of the job.

-

Allocation Strategies

AWS batch offers three strategies to allocate compute resources for running jobs. The strategies are given below:

- Best-fit

- Best-fit progressive

- Spot-capacity oriented.

In the best-fit type, AWS batch allocates the best-fit instance types based on the job requirements with low costs. At the same time, in this type, you can’t add any additional instances if needed. So, jobs must wait until the current job gets over in the compute resources.

In the best-fit progressive type, you can add instances based on the requirements of jobs.

In the spot-capacity type, spot instances are selected based on job requirements. Spot instances are usually uninterrupted.

-

Integrated Monitoring and Logging

You can view crucial operational metrics through the AWS management console. The metrics include the computing capacity of resources as well as the metrics associated with the batch jobs at different stages. Logs are usually written in the console and Amazon Cloud watch.

-

Fine-grained Access Control

Access control is yet another feature of AWS Batch. AWS batch uses Identity and Access Management (IAM) to control and monitor the compute resources used for running batch jobs. It includes framing access policies for different users.

For instance, administrators can access any AWS branch API operation. At the same time, developers will get limited permissions to configure compute environments and register jobs. Besides, End users are not allowed to submit or delete jobs.

Use-cases of AWS Batch

Many sectors can benefit from the AWS batch. Here, we brief some of the significant use cases of AWS batch one by one.

-

Finance

With AWS Batch, you can make finance analyses by batch processing a high volume of finance data sets. Mainly, you can make a post-trade analysis. It includes analysis of everyday transaction costs, market performance, completion reports, and so on. No wonder you can automate the financial analysis in the AWS batch. Hence, you can predict the risks in business and make informed decisions to boost business performance.

-

Life Science

Researchers can quickly find libraries of molecules with AWS batch. This service helps researchers to get a deep understanding of biochemical processes, which allows them to design efficient drugs.

Generally, researchers generate raw files by making primary analyses of genomic sequences. Then, they use the AWS batch to complete the secondary analysis. AWS batch mainly helps to reduce errors because of incorrect alignment between reference and sample data.

-

Digital Media

the AWS batch plays a pivotal role in digital media. It offers wonderful tools with which you can automate content rendering jobs. With the tools, you can reduce human intervention in content rendering jobs to a minimum. For example, AWS batch speeds up batch transcoding workloads with automated workflows.

AWS batch accelerates content creation and automates workflows in asynchronous digital media processing. Thus, it reduces manual intervention in asynchronous processing.

Apart from the above-said, AWS batch supports running disparate but, at the same time, dependent jobs at different stages of batch processing. This is because AWS batch can handle execution dependencies as well as resource scheduling in the best way. With AWS batch, you can compile and process files, video content, graphics, etc.

What is the Pricing of the AWS Batch

Now, the question is, what is the price of an AWS batch for computing workloads?

It is essential to note that there is no additional cost for employing the AWS batch. We only need to pay for the AWS resources we create to run batch jobs. The AWS resources can be EC2 instances, spot instances, and Fargate. Mainly, AWS batch offers per-second billing, so we can run instances only when it is needed.

Benefits of AWS Batch

There are many benefits to using AWS batch in cloud computing.

Let’s take a look at the list given below.

- You can run any volume of batch-computing jobs without installing any batch-processing software.

- You can prioritize the execution of jobs based on business needs

- AWS batch allows scaling compute resources automatically

- It speeds up the running of computing resources, reducing frequent intervention

- On top of all, you can increase efficiency and reduce costs by optimizing compute resources and workload distribution.

Conclusion

On a final note, you can derive better results by efficiently scheduling and running compute resources with AWS batch. You can apply batch processing to simplify complex workloads into simple pieces of jobs and accelerate job execution. In short, you can achieve maximum results in minimum time. AWS batch automates the provisioning and scaling of computing devices. Above all, it handles dependencies between different tasks efficiently, thereby increasing the speed of job execution and boosting overall performance.

On-Job Support Service

On-Job Support Service

Online Work Support for your on-job roles.

Our work-support plans provide precise options as per your project tasks. Whether you are a newbie or an experienced professional seeking assistance in completing project tasks, we are here with the following plans to meet your custom needs:

- Pay Per Hour

- Pay Per Week

- Monthly

| Name | Dates | |

|---|---|---|

| AWS Training | Feb 24 to Mar 11 | View Details |

| AWS Training | Feb 28 to Mar 15 | View Details |

| AWS Training | Mar 03 to Mar 18 | View Details |

| AWS Training | Mar 07 to Mar 22 | View Details |

Madhuri is a Senior Content Creator at MindMajix. She has written about a range of different topics on various technologies, which include, Splunk, Tensorflow, Selenium, and CEH. She spends most of her time researching on technology, and startups. Connect with her via LinkedIn and Twitter .