- Introduction to Amazon Elastic File System

- Amazon On-Demand Instance Pricing

- AWS Kinesis

- Amazon Redshift Tutorial

- Amazon SageMaker - AIs Next Game Changer

- AWS Console - Amazon Web Services

- AWS Architect Interview Questions

- AWS Architecture

- Amazon Athena

- Top 11 AWS Certifications List and Exam Learning Path

- How to Create Alarms in Amazon CloudWatch

- AWS CloudWatch Tutorial

- Introduction To AWS CLI

- AWS Configuration

- AWS Data Pipeline Documentation

- AWS EC2 Instance Types

- AWS Elastic Beanstalk

- AWS Elastic Beanstalk Available in AWS GovCloud (US)

- AWS Free Tier Limits and Faq

- AWS EC2 Instance Pricing

- Choosing The Right EC2 Instance Type For Your Application

- AWS Interview Questions And Answers

- AWS Key Management Service

- AWS Lambda Interview Questions

- AWS Lambda Tutorial

- What Is AWS Management Console?

- Complete AWS Marketplace User Guide

- AWS Outage

- AWS Reserved Instances

- AWS SDK

- What is AWS SNS?

- AWS Simple Queue Service

- AWS SysOps Interview Questions

- AWS vs Azure

- AWS Vs Azure Vs Google Cloud Free Tier

- Introduction to AWS Pricing

- Brief Introduction to Amazon Web Services (AWS)

- Clean Up Process in AWS

- Creating a Custom AMI in AWS

- Creating an Elastic Load Balancer in AWS

- How to Deploy Your Web Application into AWS

- How to Launch Amazon EC2 Instance Using AMI?

- How to Launch Amazon EC2 Instances Using Auto Scaling

- How to Sign Up for the AWS Service?

- How to Update Your Amazon EC2 Security Group

- Process of Installing the Command Line Tools in AWS

- Big Data in AWS

- Earning Big Money With AWS Certification

- AWS Certification Without IT Experience. Is It Possible?

- How to deploy a Java enterprise application to AWS cloud

- What is AWS Lambda?

- Top 10 Reasons To Learn AWS

- Run a Controlled Deploy With AWS Elastic Beanstalk

- Apache Spark Clusters on Amazon EC2

- Top 30 AWS Services List in 2024

- What is Amazon S3? A Complete AWS S3 Tutorial

- What is AMI in AWS

- What is AWS? Amazon Web Services Introduction

- What is AWS Elasticsearch?

- What is AWS ELB? – A Complete AWS Load Balancer Tutorial

- What is AWS Glue?

- AWS IAM (Identity and Access Management)

- AWS IoT Core Tutorial - What is AWS IoT?

- What is Cloud Computing - Introduction to Cloud Computing

- Why AWS Has Gained Popularity?

- Top 10 Cloud Computing Tools

- AWS Glue Tutorial

- AWS Glue Interview Questions

- AWS S3 Interview Questions

- AWS Projects and Use Cases

- AWS VPC Interview Questions and Answers

- AWS EC2 Tutorial

- AWS VPC Tutorial

- AWS EC2 Interview Questions

- AWS DynamoDB Interview Questions

- AWS API Gateway Interview Questions

- How to Become a Big Data Engineer

- What is AWS Fargate?

- What is AWS CloudFront

- AWS CloudWatch Interview Questions

- What is AWS CloudFormation?

- What is AWS Cloudformation

- Cloud Computing Interview Questions

- What is AWS Batch

- What is AWS Amplify? - AWS Amplify Alternatives

- Types of Cloud Computing - Cloud Services

- AWS DevOps Tutorial - A Complete Guide

- What is AWS SageMaker - AWS SageMaker Tutorial

- AWS DevOps Interview Questions

- Cognizant Interview Questions

- Cognizant Genc Interview Questions

- Nutanix Interview Questions

- Cloud Computing Projects and Use Cases

- test info

Regarded as the most customer-centric organization, Amazon was founded on July 5, 1994, by Jeff Bezos. As widely known, it is an American multinational company with a major concentration on a variety of fields, such as e-Commerce, digital streaming, cloud computing, artificial intelligence, and more.

Amazon is majorly known for its massive disruption of industries via mass scale and technological innovation. It is the largest online marketplace, cloud computing platform, and AI assistant provider in the world. The company boasts more than six lakh employees from different parts of the globe. Because of being the largest internet company in terms of revenue and the second-largest private employer in the US, Amazon carries the highest brand valuation.

Provided a gamut of services offered by Amazon, it is nothing but obvious that almost every other person wishes to be a part of this organization. So, if you are one among this lot, MindMajix has brought a list of the latest and top Amazon interview questions 2024 for your reference. Navigate through this article and find out what goes behind Amazon interviews to crack one conveniently.

- Amazon Interview Process

- Amazon Technical Interview Questions

- FAQs

- Leadership Principles

- Tips

- Conclusion

Amazon Interview Process

-

Recruiter Connect

One of the best and easiest ways to come under the eye of Amazon recruiters is by maintaining a good profile on LinkedIn and messaging recruiters there. You can also consider applying on the job portal of Amazon. However, if you have a referral from an existing employee at Amazon, the process can become a bit easier for you.

-

Interview Rounds

Along with an initial coding test, Amazon is known for conducting up to four interview rounds. The coding test comprises Data Structure and Algorithm (DS/Algo) problems. While the first round is about evaluating your behavior and computer science knowledge, the remaining three rounds concentrate more on DS/Algo.

-

-

HR Round (1 Round)

-

In this round, you will be asked behavioral questions and ones related to computer science theory. The questions could be formed to assess your experience at previous organizations along with the conflicts and issues you would have faced with managers or colleagues.

-

-

Data Structures and Algorithms Rounds (3 Rounds)

-

In these rounds, you will be asked about DS/Algo problems where you may have to provide written code. There are chances that you may face a few behavioral questions in these rounds as well. While the problems’ level is anywhere between easy to hard, they are not the only deciding factor behind your hiring. Here, leadership principles come into play as well.

-

After Interviews

Once the interview rounds are completed, the recruiter will get in touch with you to tell you the verdict.

-

Hired

Once you and the team are ready, to begin with, the job, you will get an offer letter as a testimony to your getting a job at Amazon.

Top 10 Amazon Frequently Asked Questions

- What do you mean by ‘pass’ in Python?

- Differentiate Packages and Modules in Python.

- How can we split a string in Java?

- What do you understand by denormalization?

- How can you create a stored procedure in SQL?

- What do you mean by linear data structure?

- Define Tree traversal.

- Briefly describe EDA.

- Compare Data Profiling and Data Mining.

- Compare Supervised and Unsupervised Learning.

Amazon technical Interview Questions

Amazon Python Interview Questions

1. What exactly is Python?

It is a high-level as well as general-purpose programming language. We can build any application in Python for real-world problems. It can be quickly done by using the correct tools and libraries.

Python is an interpreted language that effectively supports modules, exception handling, threads, and memory management.

| If you want to enrich your career and become a professional in AWS then enroll in "AWS Training" - This course will help you to achieve excellence in this domain. |

2. What is the role of the ‘Is’ operator in Python?

We use the ‘Is’ operator to evaluate whether two variables refer to the same object. In other words, we use this operator to compare the identity of objects.

The test result returns as' true' if the variables refer to the same object. The test result returns as' false' if the variables don't refer to the same object.

Another thing is that the variables must be in the same memory. Otherwise, the 'Is' operator returns a ‘false’ output.

3. Outline the different scopes used in Python.

A scope is a block of code with a Python program's objects.

Below are the different scopes used in Python.

Local Scope: It holds the local objects of the current function

Module-level Scope: It represents the global objects of the current module

Global Scope: It refers to the objects that spread throughout a program.

Outermost Scope: It represents the built-in names callable in a program.

4. What do you mean by ‘pass’ in Python?

Pass is a keyword that represents a null operation. We use the pass keyword to fill up the empty blocks of codes.

5. What do you understand about ‘docstring’ in Python?

The docstring is the short form of documentation string. It is a multiline code string that we use to document code segments. It describes a method or function.

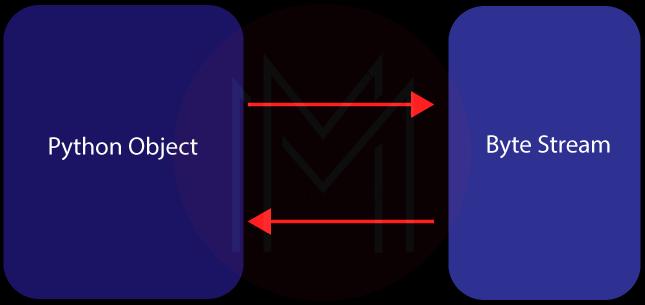

6. State the difference between Pickling and Unpickling.

Pickling serializes an object into a byte stream. Pickle. dump () is the function used for serialization.

Unpickling deserializes the byte stream. It means that it converts back the byte stream into objects. Pickle. load () is the function used for deserialization.

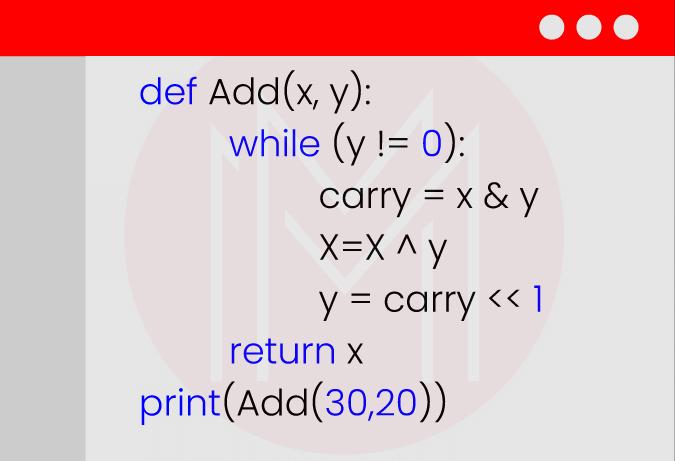

7. Create a Python program to add two integers without using the addition operator.

Code:

Output:

8. Are any tools available to analyze static codes in Python?

Yes. Pylint, Prospector, and Codiga are the static code analyzers Python offers. Using these tools, we can identify the errors in the static codes, rectify them, and eventually enhance the quality of the codes.

9. Differentiate Packages and Modules in Python.

| Package | Module |

| It is a collection of different modules. | It is a collection of global variables and functions. |

| It is used for code distribution and reuse. | It is used for code organization and reuse |

| Numpy, Pandas, and Django are a few examples of packages | Math, os,csv are a few examples of modules. |

10. Why are NumPy arrays better than lists in Python?

Here are the reasons why we prefer NumPy arrays rather than lists.

- The execution speed of the NumPy array is faster than lists.

- NumPy arrays consume less memory than lists.

Amazon Java Interview Questions

11. What exactly is Java?

It is one of the high-level programming languages that functions based on OOP. We can create large-scale applications with Java.

Moreover, Java is a platform-independent language. So we can run Java applications on any platform.

12. What is the use of the default constructor in Java?

We can use the default constructor to assign default values to different objects. The compiler automatically creates a default constructor if a class doesn't have a constructor. It means that it initializes variables of a class with its default values. The default values can be null for strings, ‘0’ for integers, false for Boolean, etc.

It is a simple but essential note that default constructors are also called ‘No-arg’ constructors.

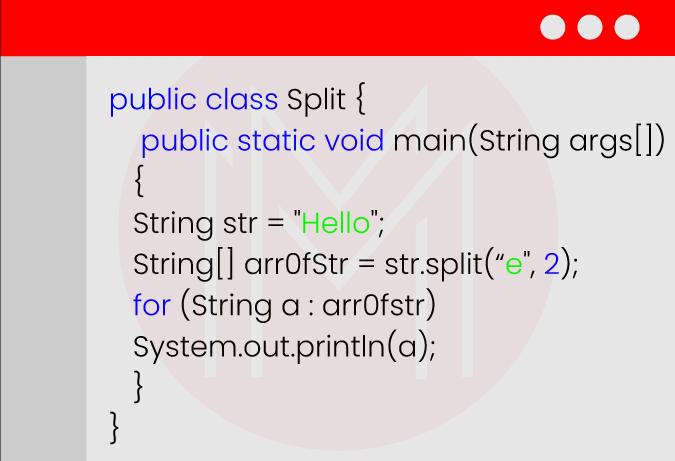

13. How can we split a string in Java?

We can split a string using the following function. In the below example, the string ‘Hello’ is split into two separate strings, as shown in the result.

Program:

Result:

14. What are the uses of packages in Java?

Below are the uses of packages in Java.

- Java packages help categorize interfaces and classes. As a result, we can quickly locate interfaces, classes, and annotations effectively.

- They offer excellent access control.

- They eliminate name conflicts.

- They can be used for data hiding.

15. Why isn't Java a pure OOP language?

Java supports all primitive data types such as byte, int, char, long, etc.

16. What do you mean by instance variable in Java?

They describe the properties of objects. All the methods of a class can access these variables. They are declared inside a class and outside the methods.

The instance variable improves security and privacy for data. Also, they keep tracking the changes in objects.

17. Differentiate HashTable and HashMap in Java.

| HashTable | HashMap |

| It is synchronized so that it is best for threaded applications. | It is not synchronized, so it is best for non-threaded applications. |

| It doesn’t allow null in keys as well as values. | It allows only one null key. But it will enable any number of null values. |

| It doesn’t support the order of insertion. | It supports the order of insertion. |

18. When do infinite loops occur in Java?

When the terminating condition of the loop is not found, infinite loops occur in Java. These loops run infinitely unless the program is terminated.

It occurs due to errors in programs most of the time. But sometimes, it is made intentionally to create a wait condition.

19. Why do we use super keywords?

We use super keywords to call superclass methods. Not only that, we use super keywords to access the superclass constructor.

The main thing about using the super keyword is that it helps to remove confusion because subclasses and superclasses methods have the same name.

20. Is it possible to override static methods in Java?

No. We cannot override static methods in Java. This is because method overriding depends on dynamic binding at runtime. But on the contrary, static methods make the static binding at compile time. That’s why we cannot override static methods.

Amazon SQL Interview Questions

21. What do you mean by unique constraint in MySQL?

We use the unique constraint to ensure that a column has distinct values entirely. In other words, no two records in a table will contain the same values.

[Related Article: MYSQL Interview Questions]

22. What are minus, union, and intersect operators?

We apply the minus operator to filter the rows present in the first table but not in the second table.

We use the union operator to combine the results of two SELECT statements. In other words, it eliminates the duplicate values from the two results. Besides, this command returns unique values. Also, all the data types must be the same in the two tables.

We apply intersect operator to detect common rows from the results of two select statements. Similar to the union, the data type must be the same in two tables.

23. What do you mean by alias in SQL?

Alias is nothing but a temporary name assigned to a table or table column. Alias is a method that we use to protect the real names of database fields.

Know that a table alias is also called a correlation name. Making aliases improves the readability of codes. Aliases only exist for the duration of the query. We can use the AS keyword to create aliases.

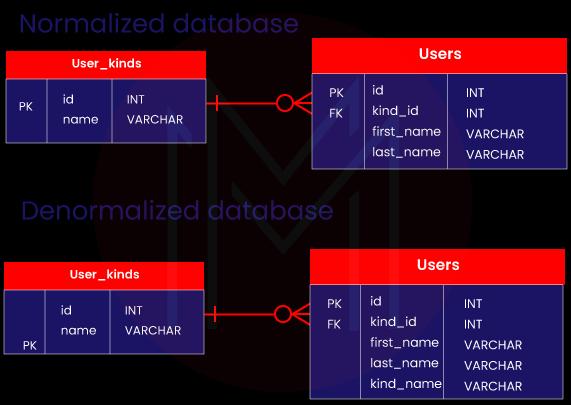

24. What do you understand by denormalization?

It is opposite to the normalization process. In denormalization, the normalized schema is changed into another schema. This schema usually has redundant information. The essential thing is that performance is improved due to redundancy. Not only that, we need to keep up the redundant data consistent to boost performance.

25. Outline the scalar functions and aggregate functions used in Java.

Regarding scalar functions, they return a single value from the input value.

- LEN ( ) – It calculates the length of a given column

- RAND ( ) – It generates a random set of numbers from the given values.

- UCASE ( ) – It converts a group of strings into uppercase letters.

- NOW( ) – It returns both the current date and time.

In aggregate functions, they perform on a set of values and return a single value. Following are a few aggregate functions.

- AVG ( ) – It calculates the mean of the given values.

- SUM ( ) – It calculates the total of given values.

- MIN ( ) – It identifies the minimum value from a set of values.

- LAST ( ) – It specifies the previous element from the given values.

26. Outline the character manipulation functions in SQL.

The character manipulation functions generally operate on the character strings. Below is the role of these functions in brief.

- CONCAT - This function appends string2 with string1.

- LPAD - It returns the string that is padded to the left

- SUBSTR - It returns a part of the string based on the provided start and end-point.

- TRIM – It shortens one or more characters from the provided string.

27. State the difference between CURRENT_DATE ()and NOW () in MySQL.

- CURRENTDATE ( ) and NOW ( ) are generally MySQL methods.

- CURRENTDATE ( ) is the method with which we can only find the data of the server.

- NOW ( ) method is used to identify both the current date and time of the server.

28. How can you create a stored procedure in SQL?

We can employ two methods to create a stored procedure in SQL. They are given below:

- By using Transact-SQL, we can build stored procedures.

- By using SQL Server Management Studio also, we shall create stored procedures.

29. Why do we use distinct statements in SQL?

We use distinct statements to remove duplicate columns from the result set. Following are a few properties of distinct statements.

- Distinct statements are used with select statements

- They can be used with aggregate functions such as COUNT, MAX, AVG, etc.

- They only return distinct values

- It operates on a single column.

30. Distinguish the cross-join and natural join.

| Cross join | Natural join |

| It generates a Cartesian product of two numbers. The size of the result table is nothing but the product of two tables. | It joins two tables. Joining is done based on attribute names and data types. |

| Cross-join returns all pairs of rows from the two if no condition is specified. tables. | If no condition is specified, natural join returns rows based on the common column. |

Amazon Data Structures and Algorithms Interview Questions

31. What do you mean by linear data structure?

In the linear data structure, data elements are arranged sequentially. It means that each element is connected with its previous and next elements. Arrays, stacks, lists, and queues are examples of linear data structures.

This structure supports single-level storage of data. Mainly, the data elements of this structure have a single relationship only. So traversal of data is done in a single run.

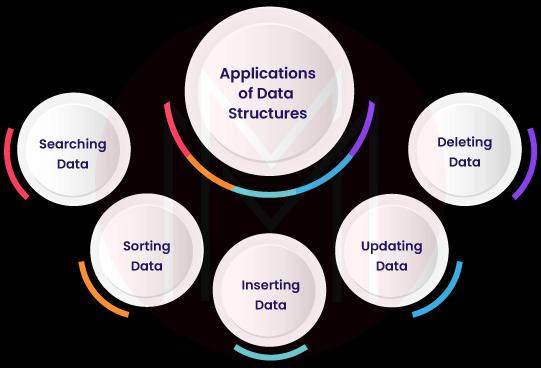

32. List out the applications of data structure and algorithms.

The below image depicts a few applications of data structures and algorithms.

[Related Article: Data Structure Interview Questions]

33. Brief about heap in data structure?

It is a special data structure that has the binary tree form.

Know that there are two types of heap data structures: max-heap and min-heap.

Regarding max-heap, the root node's value must be higher than the values of other nodes in the tree. On the other hand, the root node of a min-heap has the smallest value of the other nodes.

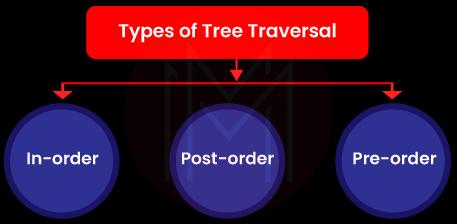

34. Define Tree traversal.

It is a process that helps to visit all the nodes of a tree. Generally, Links or Edges connect all the nodes in the tree.

There are three types of tree traversal, as shown in the below graphic.

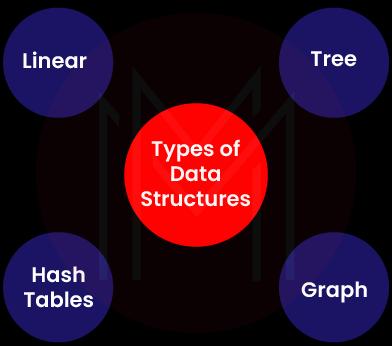

35. Name the different types of data structures.

The following image shows the different types of data structures.

36. What are the data structures used in BFS and DFS algorithms?

We use the ‘queue’ data structure in the BFS algorithm. On the other side, we use the ‘stack’ data structure in the DFS algorithm.

37. State the properties of a BTree.

The following are the crucial properties of a BTree.

- Every node will have ‘m’ children

- Each node must have at least m/2 children.

- The root node should have at least two nodes.

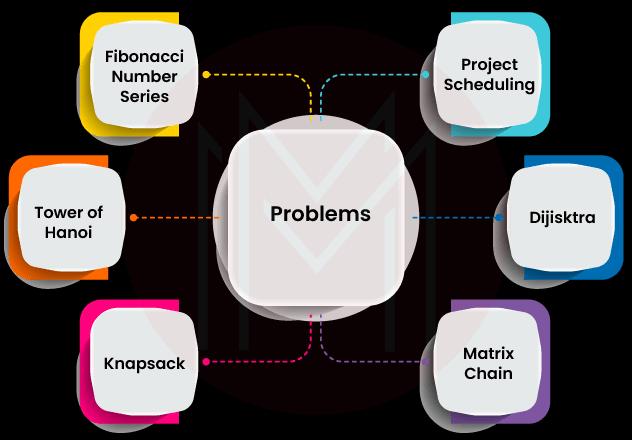

38. Mention the problems that dynamic programming algorithms can resolve.

Below are the problems that can be resolved with dynamic programming algorithms.

39. Create a Java program to find the height of a binary tree.

Code:

Output:

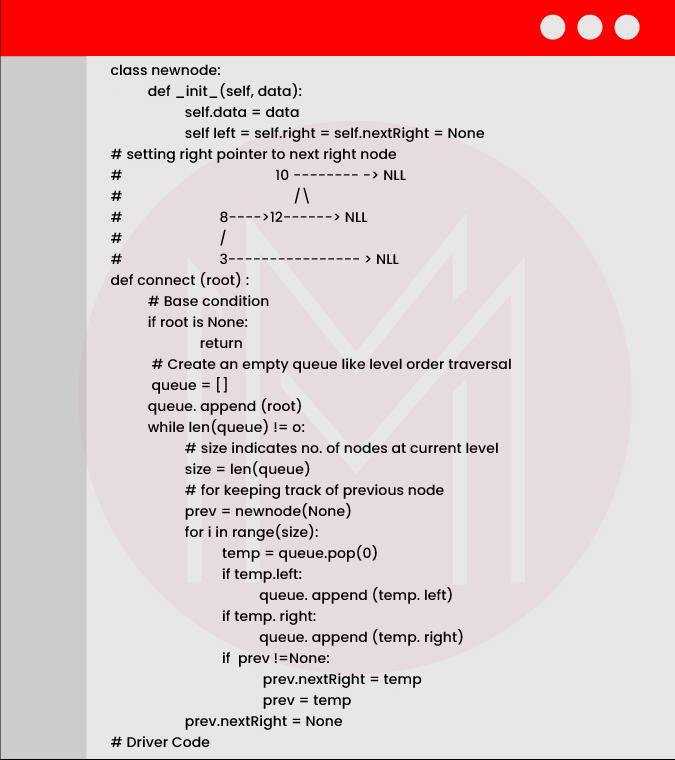

40. Write a Python program to connect nodes at the same binary tree level.

Code:

.png&w=1080&q=75)

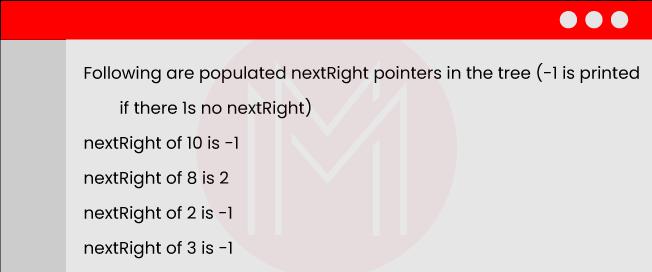

Output:

Amazon Data Analyst Interview Questions

41. What are the common challenges data analysts usually face?

Here are the challenges that data analysts face normally:

- Inferring valuable insights from a volume of data

- Storing, securing, and retrieving data effectively

- Selecting the correct tool for data analysis and visualization

- Identifying quality data for making accurate analysis.

42. Briefly describe EDA.

EDA stands for Exploratory Data Analysis. It refers to the process of initial data analysis to identify anomalies, discover patterns, and make assumptions.

Further, it helps to understand data and the relationships between them better.

43. What are the various sampling tests data analysts perform?

Jotted down are the different sampling tests data analysts perform:

- Simple random sample

- Stratified sample

- Systematic sample

- Cluster sample.

44. What is precisely time-series analysis?

It is a process of analyzing data collected over a while. We must use a large dataset for reliable and consistent time-series analysis.

We use time-series analysis for forecasting the future with the help of historical data.

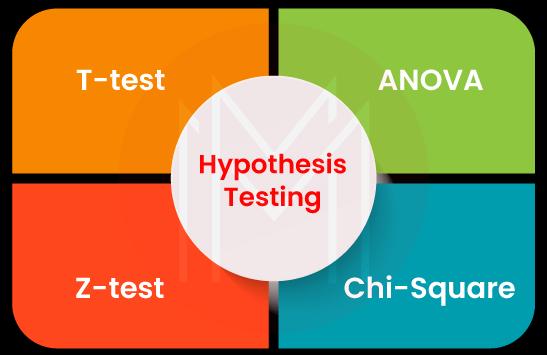

45. Name the various types of hypothesis testing performed in Data Analytics.

Below is the image that shows the various hypothesis tests usually performed in data analytics.

46. Compare Data Profiling and Data Mining.

| Data Profiling | Data Mining |

| It analyzes data from an existing source and gathers insights. | It is a process of analyzing the collected data and retrieving insights from the data. |

| It can be performed on both data mining as well as data profiling. | It is only performed on structured data. |

| Content discovery, Structure discovery, and relationship discovery are a few data profiling tools | Descriptive data mining and Predictive data mining are a few data mining types. |

| Microsoft Docs, as well as the Melisa data profiler, are a few tools used for data profiling. | Orange, Weka, Rattle, and are a few tools used for data mining. |

| It is also known as Knowledge Discovery in Databases (KDD). | It is also known as Data Archaeology. |

47. How can you manage missing values in a dataset?

We can manage missing values in a dataset in the following ways:

- Deleting rows with missing values

- Managing missing values with interpolation

- Replacing arbitrary values with missing values

48. Describe hierarchical clustering.

We use hierarchical clustering to group objects. In this clustering, the same type of objects is grouped together. In other words, each group is unique.

Dendrogram is the pictorial representation of hierarchical clustering. Besides, we use this clustering in domains such as bioinformatics, information processing, image processing, etc.

49. Distinguish Data Lake and data warehouse.

| Data Lake | Data Warehouse |

| It stores all structured, semi-structured, and unstructured data | It stores relational data from databases, transactional systems, and business applications. |

| Data has both curated as well as non-curated data. | It has highly curated data. |

| Primarily, data scientists use data lakes. | Mostly, business professionals use data warehouses. |

| It is highly accessible, and we can make changes quickly. | It is complicated, and changes take time and effort. |

[Related Article: Data Warehouse Interview Questions]

50. How can we identify outliers in a dataset?

We can identify outliers in a dataset through the following methods:

- By applying the Sorting method

- By employing Statistical outlier detection

- By using visualizations

- By using the interquartile range

Amazon Data Science Interview Questions

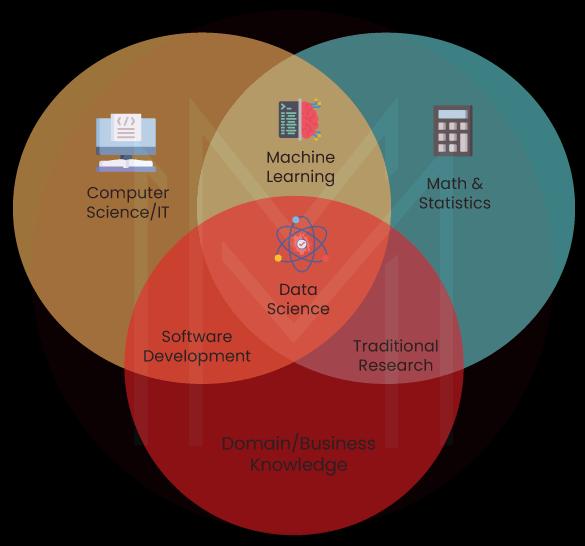

51. Explain: data science.

It is a multidisciplinary approach that we use to retrieve insights from data. The approach includes mathematics, AI, statistics, and computer science. So it is easier to store and manipulate mountains of data with the help of data science.

Furthermore, we can use data science to make descriptive, predictive, diagnostic, and prescriptive analyses.

52. What are the various feature selection methods used for variable selection in Machine learning?

We can use the following methods for feature selection in machine learning.

- Filter methods – information gain, correlation coefficient, fisher’s score, chi-square test, and MAD.

- Embedded methods – random forest importance and LASSO regularization.

- Wrapper methods – backward feature elimination, forward feature selection, and exhaustive feature selection.

53. What do you mean by dimensionality reduction in data science?

We use the Machine-Learning approach to reduce the number of random variables in a problem. As a result, we simplify modeling complex problems, remove redundancy, reduce model overfitting, and so on.

Here is a list of dimensionality reduction methods:

- Factor analysis

- Forward feature selection

- Linear discriminant analysis

- High correlation filter

- Independent component analysis

54. What is the significance of p-value in data science?

The p-value is used to decide whether to reject the null hypothesis. It is done with the help of a small significance level.

Know that the p-value generally lies between 0 to 1. If the p-value is lesser than the significance level, then there is strong evidence for rejecting the null hypothesis and vice-versa.

55. What exactly is the ROC curve?

ROC refers to Receive Operating Characteristic curve. We use this curve to show the performance of a classification model.

There are two parameters related to this curve: ‘True positive rate’ as well as ‘False positive rate’.

56. How is data analytics different from data science?

| Data Analytics | Data Science |

| It requires skills such as BI, SQL, statistics, and programming languages | It involves data modeling, advanced statistics, predictive analysis, and programming skills. |

| The Scope is micro in data analytics. | The Scope is macro in data analytics. |

| It explores the existing information. | It discovers new questions and finds answers to the questions. |

| It is widely used in gaming, healthcare, and so on. | It is commonly used in search engine engineering, ML, etc. |

57. Compare Supervised and Unsupervised Learning.

| Supervised Learning | Unsupervised Learning |

| We use labeled data to train models. | We use unlabeled data to train models. |

| This approach helps to predict outputs. | This approach helps to find hidden patterns as well as valuable insights. |

| It needs supervision to train models. | It doesn’t need supervision to train models. |

| It generates accurate results | The results generated in this approach are not accurate. |

| It consists of various algorithms such as linear regression, Bayesian logic, support vector machine, etc | It includes multiple algorithms such as the Apriori and clustering algorithm. |

58. How can you overfit a model in data science?

We can prevent overfitting a model by using the following methods:

- Early stopping

- Regularization

- Ensembling

- Pruning

- Data augmentation

59. How should you maintain a deployed model?

We should maintain a deployed model in the following steps:

- Evaluate the model

- Compared with similar models

- Rebuild the model adapting changes

60. What are the Differences between wide-format and long-format data?

| Wide-format | Long format |

| Data items do not repeat in the first column | Data items repeat in the first column |

| When you are analyzing data, it is better to use a wide format. | We can use a long form when you want to visualize multiple variables. |

Amazon FAQs

1. What are the Amazon interview rounds?

There are a total of five rounds at Amazon. The four rounds are dedicated to data structures and algorithms; one round is for system design, and one round is the HR interview round.

2. Are Amazon interviews hard?

The interview toughness majorly depends upon the hard work you have put into the preparation. Generally, the questions asked are standard interview questions and range between easy to medium level. However, it varies as per the position you have applied for.

3. How do I apply for a job at Amazon?

You can apply for a job by visiting the Amazon Jobs Portals and finding a vacancy that matches your experience and skill set.

4. Can I apply for multiple roles at Amazon?

Yes, you can apply for multiple roles at Amazon, given they match your skills and interests.

5. How do I prepare for an Amazon online interview?

To prepare for an Amazon online interview, focus on behavioral-based questions. Format your responses through the STAR method. Be as thorough with details as possible. Also, concentrate on “I.”

6. What are some of the questions that one should ask the interviewer at Amazon?

You can question the interviewer about the company's culture and the current technologies being used there. You can also ask about future innovations that Amazon is working on.

7. How to prepare for the coding interviews at Amazon?

Practice as much as you can. Put plenty of effort into solving challenging problems on Data Structures and Algorithms. Carry a good knowledge of basic computer science concepts, like operating systems, object-oriented programming, computer networks, and more.

8. Does Amazon have a dress code?

No, Amazon does not believe in imposing a dress code.

Amazon Leadership Principles

The company follows the below-mentioned leadership principles:

- Customer Obsession: The company is focused on working vigorously to earn and retain the trust of customers.

- Ownership: Employees at Amazon don’t believe in sacrificing long-term value to chase short-term results. They act on behalf of the whole organization.

- Invent and Simplify: Leaders at Amazon need invention and innovation from their teams and look for simplified ways. They always stay on the lookout for new ideas.

- Are Right, A Lot: Leaders hold strong instincts and good judgment, making them right most of the time. They look for different perspectives.

- Learn and Be Curious: People at Amazon are always on their toes to improve themselves. They are consistently curious and try to explore new possibilities.

- Hire and Develop the Best: With every hire and promotion, they raise the bar of performance. They recognize unique talent and coach others seriously.

- Insist on Highest Standards: Leaders here have high standards. They consistently drive their teams to deliver qualitative processes, products, and services.

- Think Big: They communicate and create a bold direction to inspire results. They think in a different way and always look for better methods to serve their customers.

- Bias for Action: The team values calculated and evaluated risk-taking as, according to them, speed is of utmost importance in business.

- Frugality: Their aim is to achieve more with less.

- Earn Trust: Leaders speak candidly, listen attentively and treat everybody respectfully. They are self-critical and don’t believe in pleasing anybody.

- Dive Deep: Teams at Amazon operate at every level, stay connected to details, and audit periodically.

- Have Backbone: Disagree and Commit: Leaders respectfully challenge decisions when they don’t agree with them, even if the situation is exhausting or uncomfortable. However, once a decision has been taken, they commit to it wholly.

- Deliver Results: Their focus is on major inputs for the business and delivering them with the appropriate quality.

- Strive to be Earth’s Best Employer: Amazon strives to create a productive, safer, diverse, high-performing, and just working environment where everybody can work with empathy while having fun.

- Success and Scale Bring Broad Responsibility: They are thoughtful and humble about the impacts of their actions.

Tips to Crack Amazon Interview

Now that you are aware of the rich heritage, work culture, and leadership principles of Amazon, you would be more tempted than ever to get a job there, right? So, here are some tips to crack Amazon interviews and be a part of this organization.

- Understand the leadership principles: People at Amazon take pride in their leadership principles. Thus, knowing them, understanding them, and citing a few during your interview can make you an attractive candidate.

- Be thorough with data structures and algorithms: The company appreciates those who can solve problems. If you wish to leave a good impression, work on logic structures and algorithm problems. You must have a better understanding of data structures and algorithms to earn the brownie points.

- Use the STAR methodology to create responses: The situation, Task, Action, and Result (STAR) method helps you format responses for behavioral-based questions. Begin by describing the situation, the task that had to be done, the action you took to complete the task, and the result of it. Make sure you don’t leave any crucial detail behind.

- Know your strengths: If you don’t boast about the true skills you possess, nobody will get to know about them. And this can cost you a lot. So, ensure you know yourself, your strengths, and your skills enough to highlight them in the interview.

- Keep the conversation flowing: When you appear for an interview, you do just not have to give only solutions but keep the conversation hooking and flowing. Whatever you should say must be sensible and clear to the interviewer. If possible, you can ask questions from your end as well.

Conclusion

Understanding everything about what goes in an Amazon interview gives you an upper hand compared to your competitors. So, with this list of 40 Amazon interview questions with answers, you can prepare well and rarely go silent in front of the interviewer. If you want to enrich your career and become a professional in AWS then enroll in "AWS Training" - This course will help you to achieve excellence in this domain.

On-Job Support Service

On-Job Support Service

Online Work Support for your on-job roles.

Our work-support plans provide precise options as per your project tasks. Whether you are a newbie or an experienced professional seeking assistance in completing project tasks, we are here with the following plans to meet your custom needs:

- Pay Per Hour

- Pay Per Week

- Monthly

| Name | Dates | |

|---|---|---|

| AWS Training | Feb 24 to Mar 11 | View Details |

| AWS Training | Feb 28 to Mar 15 | View Details |

| AWS Training | Mar 03 to Mar 18 | View Details |

| AWS Training | Mar 07 to Mar 22 | View Details |

Although from a small-town, Himanshika dreams big to accomplish varying goals. Working in the content writing industry for more than 5 years now, she has acquired enough experience while catering to several niches and domains. Currently working on her technical writing skills with Mindmajix, Himanshika is looking forward to explore the diversity of the IT industry. You can reach out to her on LinkedIn.