- Home

- Blog

- Data Modeling

- Big Data Modeling

With the growth of the internet and massive utilization of data has resulted in increasing CPU needs, velocity to recall the data, and a schema for more difficult data structure management, the integrity and the reliability of the available data. This type of data is called Big Data or Large-scale Data. Big Data has to deal with two critical issues, the increasing size of data sets and the rising data complexity.

To resolve these issues, we have to use data modeling to organize the data in a specific model and visualize it. To use big data to its full capabilities, we have to model it using different types of data models like the Hierarchical Model, Network Model, Entity-Releationship Model, etc. Thus, big data modeling is helpful for enterprises to understand complex data. In this Big Data Modeling blog, you will explore all the data modeling concepts and techniques required for modeling big data.

| Big Data Modeling: Table Of Content |

What is Big Data Modeling?

Big Data Modeling contains two terminologies, specifically “Big Data” and “Data Modeling.” The term “Big Data” is related to the massive amounts of data created by enterprises in this contemporary world. This type of data is barren of any constant paradigm and is complicated by nature. Enterprises can't analyze this kind of data using conventional methods.

These difficult data types can be analyzed through high-quality data modeling techniques. In this regard, we have to understand that “Data Modeling” means arranging the data in such visual patterns that the data analysis process can be carried out quickly. The techniques contain the process of developing a visual representation of the complete or part of the datasets.

| If you want to enrich your career and become a professional in Data Modeling, then visit Mindmajix - a global online training platform: "Data Modeling Training" This course will help you to achieve excellence in this domain. |

What is a Data Model?

The data model is defined as the modeling of data semantics, data description, and data consistency constraints. It offers conceptual models to describe the database's design at every data abstraction level. By defining and structuring the data from the perspective of the related business models, processes support the development of efficient management systems.

Why do we use a Data Model?

We use the data models due to the following reasons:

1. Data interpretation can be enhanced through the visual representation of the data. It gives a full picture of the data to help you design the physical database.

2. A data model gives a clear idea of market requirements.

3. The data model properly represents the critical data of an organization. Data omission problems are eliminated due to data modeling.

4. An efficient data model assures persistence throughout an organization's projects to improve data quality.

5. It helps us to develop a tangible interface that integrates the data on a unified platform. It also helps you find out duplicate, incomplete, and redundant data.

6. Stored Procedures, Relational Tables, and foreign and primary keys are all explained in it.

7. It assists project managers in getting quality control and greater reach. It also improves overall performance.

Why is Data Modeling required?

Data Modeling is a critical step in developing any difficult software. It allows developers to explore the domain and organize the work consequently. The data model is the system to organize and stores the data. It enables us to arrange the data as per access, usage, and service. Big data can benefit from the proper data models and the storage environment in the following ways:

- Performance: A good data model will allow us to simplify the database tuning. A well-built database generally runs fast, quicker than anticipated. The data model concepts should be coherent and crisp to achieve optimal performance. After that, we must use accurate rules for converting the data model into the database design.

- Efficiency: By using the Data model, organizations can save a lot of time in developing the core principles and values that control the process of the enterprise operations. In that way, the business plans can be designed in half the time formerly needed for creating the business plans. Besides this, data modeling also minimizes company expenses. It can simply identify the faults in data sets.

- Cost: We can develop applications at a low cost using good data models. Generally, data modeling uses less than 5to10% of the project budget and can minimize the 65to75% of the budget generally allotted to programming. Data modeling identifies the errors and mistakes early when they can be fixed. This is finer than fixing the errors once the software has been designed.

- Quality: Just like architects consider blueprints before constructing the building, we must consider the data before developing the application. Generally, about 70 percent of software development efforts fail, and the primary source of failure is hasty code. A data model helps us define the problem, allowing us to apply different approaches and choose the suitable one.

- Managed Risk: We can utilize the data model to predict the difficulty of the software and acquire insights into the department-level efforts and project risk. We must consider the model size and intensity of the inter-table connections.

- Good Documentation: Data Models document essential concepts, proving the basis for long-term maintenance. The documentation will help you use staff turnover. Today, most application vendors offer a data model of their application on request. This is because the IT industry identifies efficient data models that understandably give critical ideas and abstractions.

Related Article: Data Modeling Interview Questions

Data Model Perspectives

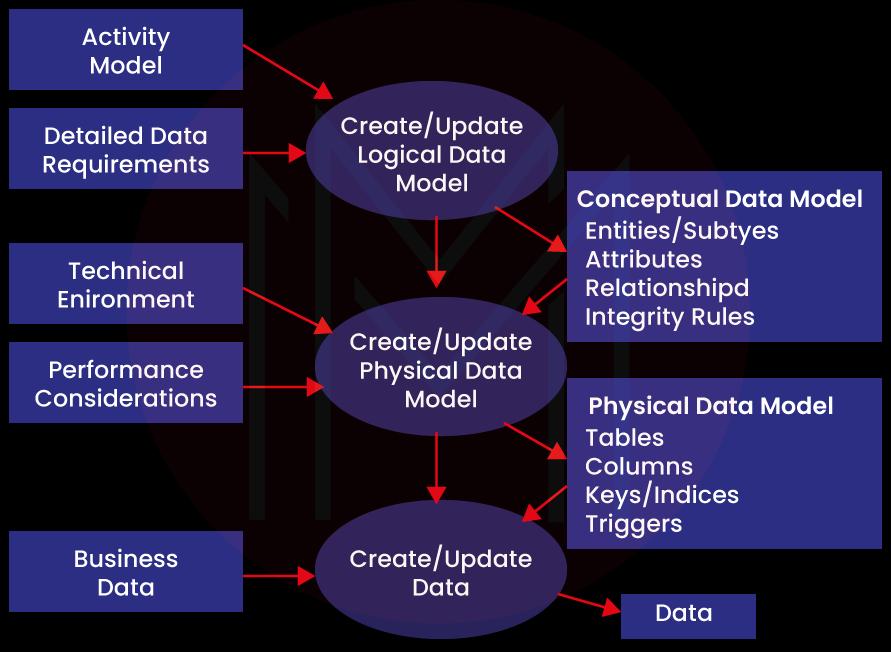

Physical, Logical, and Conceptual Data Models are the three kinds of data models. Data Models are utilized for describing the data, how it is arranged in the database, and how data components are associated with one another.

Conceptual Model

This stage defines what should be included in the model’s configuration to coordinate and explain the market principles. It primarily focuses on business-related entities, relationships, and characteristics. Business stakeholders and Data Architects are responsible for its development.

It is used for specifying the method scope. It is a tool to organize, visualize, and scope company ideas. The intent of building the conceptual model is to build new entities, attributes, and relationships. Data Stakeholders and Architects will create a conceptual data model.

The conceptual Data Model is kept by three key holders

- Attribute: Properties of the entity

- Entity: A real-world thing.

- Relationship: Association between the two entities.

Let us take a look at the illustration of the data model

Consider the below entities: customer and product. The product entity attributes are the price and name of the product, while customer entity attributes are the number and name of customers. Sales act as the connection between these two entities.

- The Conceptual Dat Model was built with the corporate audience in mind.

- It provides an introduction to the corporate principles for the complete organization.

- It is built separately, with the hardware requirements like data storage space and location and software requirements like DBMS vendor and technology.

Logical Model

Logical Data Model explains the arrangement of the data structures and their relationships. It sets the foundation to construct the physical model. This model assists in including the extra data in the components of the conceptual data model. In this mode, we don’t define a primary or secondary key. This model allows us to check and update the connector information for the relationships that have been established earlier.

The logical data model explains the requirements for the single project, but it can be merged with other logical data models per the project’s scope. The data attributes come with different data types, many of which have actual lengths and precisions.

- The logical data model is configured and created separately from the database management system.

- Data Types with the exact dimensions and precisions available for the data attributes.

- It defines the data required for the project yet communicates with other logical data models as per the project’s difficulty.

Physical Model

The physical model will explain how to utilize the database management system for executing the data model. It establishes the process in the context of tables, indexes, CRUP operations, partitioning, etc. Database Developers and Administrators will build it.

The physical data model defines how the data model is executed in the database. It lures databases and assists in building schemas by duplicating the database triggers, constraints, column keys, indexes, and other functions. This data model helps in visualizing data layout, access, and view. Authorizations, primary keys, foreign keys, profiles, and so on are all defined in this data model.

The minority and majority relationships are specified in the data model using the relationship between the tables. It is developed for a particular version of the database management system, project suite, and data storage. The physical data model was designed for the data storage, project site, and database management system. It includes relationships that handle cardinality and nullability of the relationships. Access profiles, Views, Primary keys, Authorizations, Foreign keys, and so on are all defined here.

Types of Data Modeling?

While various data modeling approaches exist, every module's main principles remain the same. Following are some of the most popular data modeling types::

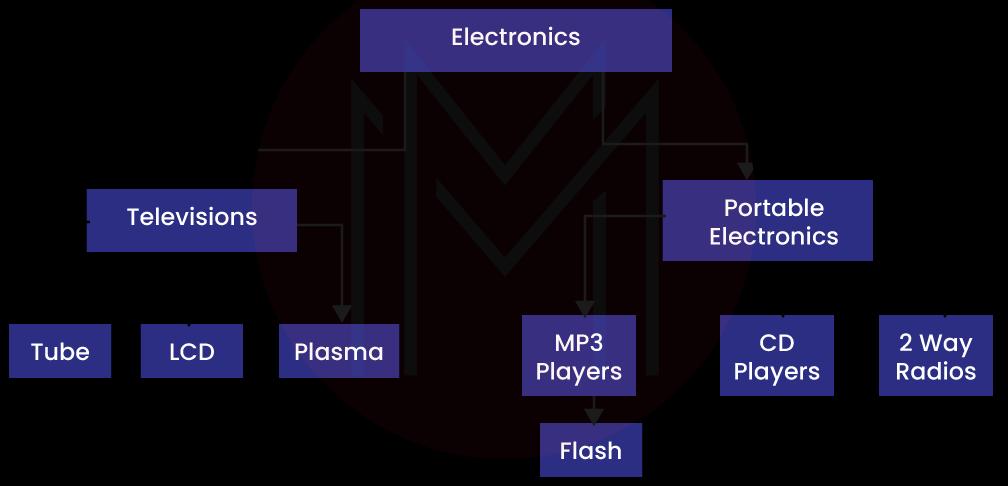

1. Hierarchical Data Model

This database modeling approach utilizes a tree-like structure for organizing the data. Every record in this table has a single parent or root. When it comes to sibling documents, they are arranged in a particular way. This is the physical order in which the information is stored. This modeling method can be applied to various real-time model relationships.

The hierarchical data model is used for arranging the data into a tree-like structure with a single parent that links all the data. A single root like this develops like a branch, connecting nodes to the parent nodes, with every child node having only one parent node. Data is structured in the relational system with the one-to-many relationship between two different types in this model. For example, a university's department contains a group of professors, students, and courses. The following image represents the hierarchical data modeling example.

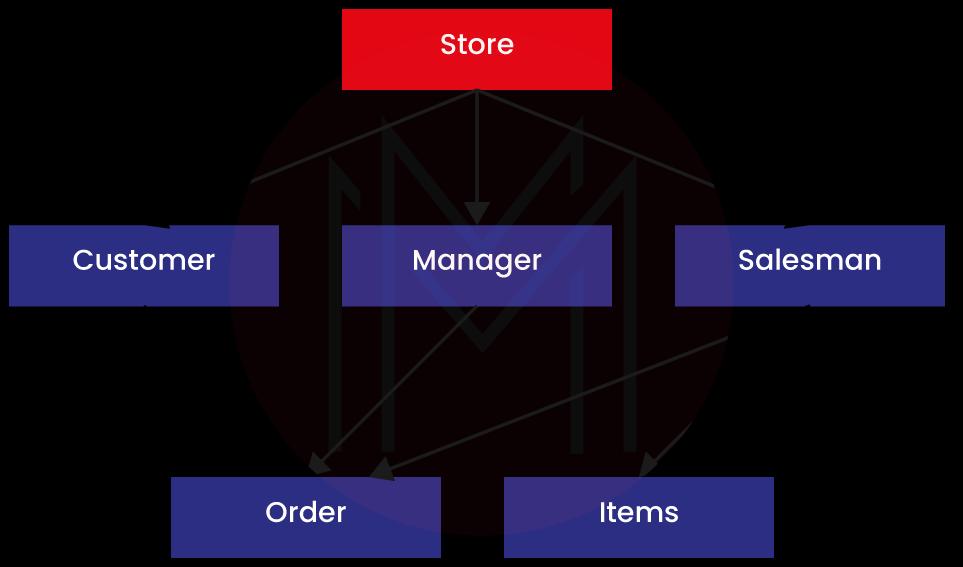

2. Network Model

The Network Model improves the Hierarchical Model, enabling different relationships with the associated records indicating multiple parent records. It will allow users to develop models through the sets of the same documents using the mathematical set theory. A parent and various child records are included in the set. Every record is a member of numerous sets, enabling the model to specify the difficult relationships. The model expresses the difficult relationships as each record belongs to various sets. The following image is an example of Network Data Modeling.

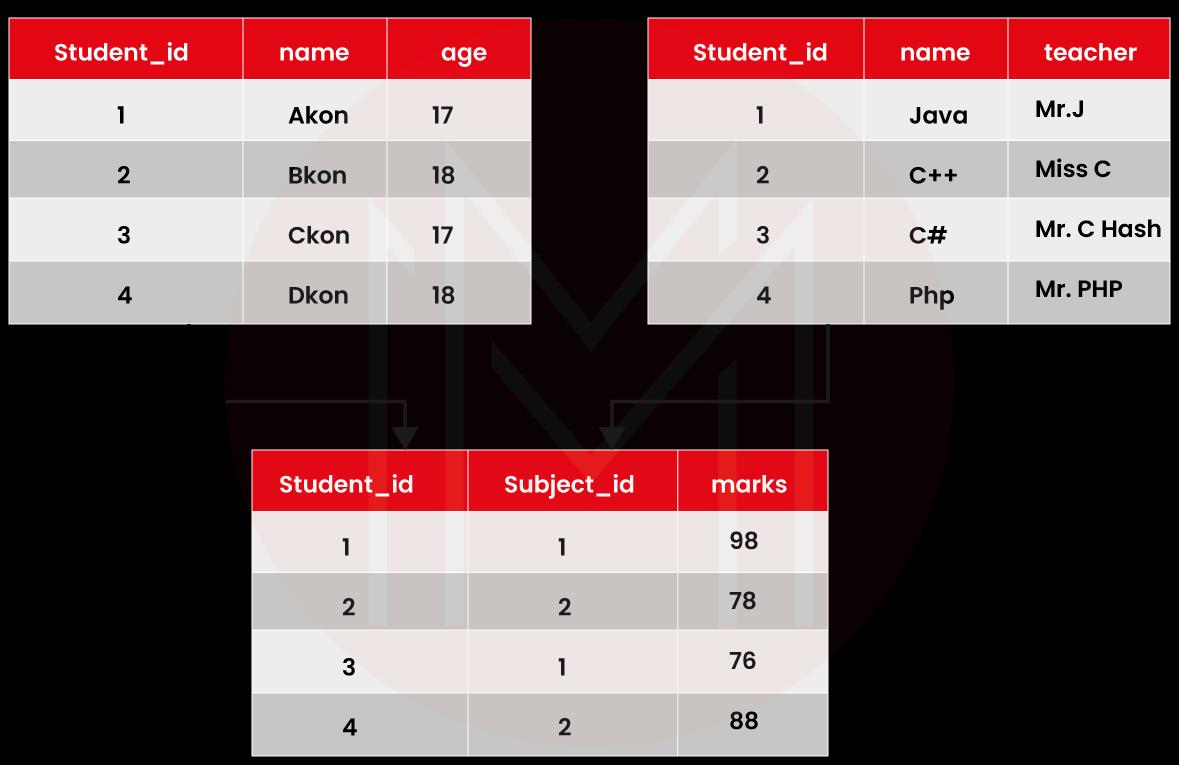

3. Relation Model

In 1970, an IBM researcher suggested that the Relational model is the best solution for the hierarchical paradigm. The data path does not have to be defined by the developers. Tables are used for managing the data segments in this case directly. The program’s difficulty has been reduced because of this data model. It requires an in-depth knowledge of the enterprise’s physical data management methodology. This model was rapidly integrated with SQL after its origination.

Tables act as data structures in relational data models. The rows of a table will have the data of the specified category. In the relational model, tables are considered relations.

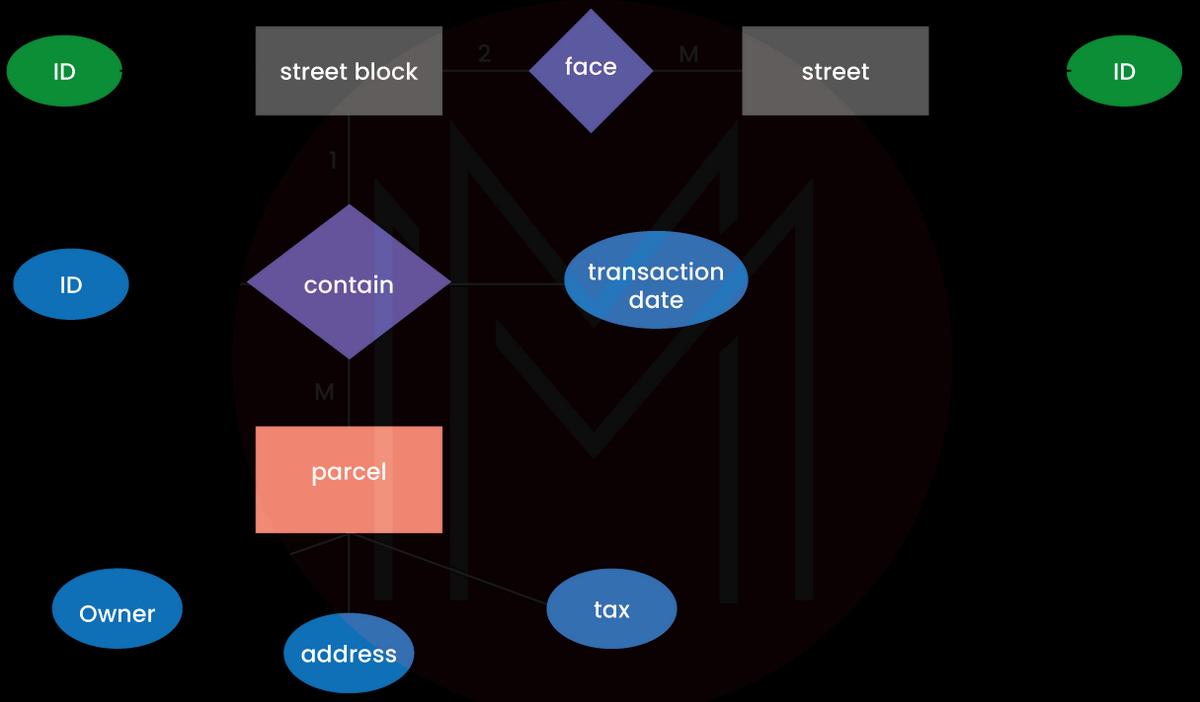

4. Entity-Relationship Model

The entity-relationship model will have the entities and their relationships. The E-R model is the graphical representation of this type. The main components of a real-time E-R model are entity set, relationship set, constraints, and attributes

An Entity is defined as an object or a piece of data. It will have properties known as attributes and a set of values known as a domain. A relationship is defined as a link between multiple entities.

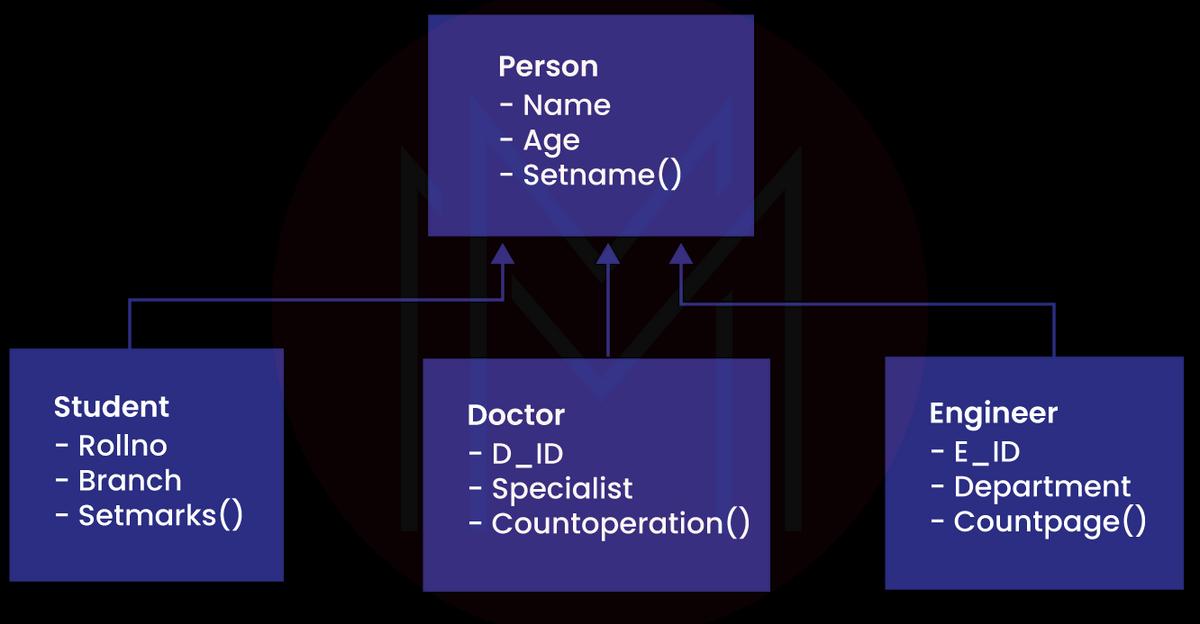

5. Object-Oriented Database Model

A group of objects is aligned with the functions and methods in an Object-oriented database. These are the methods and characteristics related to these objects. Hypertext databases, Multimedia databases, and other kinds of object-oriented databases are available. Even though it integrates the tables, this kind of data model is called a post-relational database model as it is not restricted to the tables. These database models are called hybrid models. The following image is an example of an Object-Oriented Database Model.

6. Object-Relational Model

The Object-Relational model can be considered a combination of the relational model and object-oriented model. This model enables you to incorporate the functions into a table.

An Object-Relational Data Model is designed by integrating the features of both object-oriented and Relational database models. It endorses objects, classes, inheritance, and other features, the same as the Object-oriented paradigm and tabular structures, data types, and other features, the same as the Relational database model.

Dimensions and Facts

- Dimension Table: The table contains fields with the definitions of the market elements and is referenced by the various fact tables.

- Fact Table: The table enlists all the measurements and their sales; granularity, for instance, can be semi-additive or additive.

7. Dimensional Modeling

It is the data warehouse design technique. It utilizes validated facts and measures and assists in navigation. The utilization of dimensional modeling in performance queries accelerates the process.

Dimensional Modeling - Related Keys

To learn data modeling, it is vital to understand the keys. There are five different kinds of dimensional modeling keys:

1. Primary or Alternate keys: A primary key is an area that includes a single unique record.

2. Business or Natural Keys: The field that distinctly defines an individual.

3. Composite or Compound Keys: A composite key is one in which more than one field is used for representing a key.

4. Foreign Keys: It is a key that relates to another key in another table.

5. Surrogate Keys: Generally, it is an auto-generated field with no business meaning.

Data Modeling Techniques

Data Modeling is applying particular methodologies and techniques to the data to convert it into a valuable form. This will be done using the data modeling tools, which help in creating the database structure from the diagrammatic drawings. It connects data easily and builds a perfect data structure as per the requirement.

Related Article: Data Modeling Examples

Data Modeling Advantages

- Using Data Modeling, the data objects of the functional team are adequately displayed.

- Enterprises have a massive amount of data in several formats. For unstructured data, data modeling provides a structured framework.

- Data Modeling allows us to fetch the data from the database and create different reports. Through reports, it indirectly contributes to the data analysis.

- It enhances coordination with the business.

- Data Modeling improves business intelligence by making the data modelers work closely with the realities of the project.

Data Modeling Disadvantages

- The data model development is a time-taking process. You must understand the physical characteristics of the data storage.

- This method requires complex application creation and biographical application creation.

- The data model is not precisely user-friendly. A few enhancements in the method need

Big Data Modeling FAQs

1. What is Data Modeling?

Data Modeling is the mechanism of diagramming the data flows. Data Model is designed using symbols and text to depict the data flows. It offers a blueprint to create the new database or reengineer the legacy application. Thus, data modeling allows enterprises to utilize their data efficiently to satisfy the business requirements for the information.

2. What are the different kinds of data modeling techniques?

Following are the different data modeling techniques:

- Hierarchical Data Modeling

- Relational Data Modeling

- Dimensional Modeling

- Graph Modeling

- Network Modeling

- Entity-Relationship Modeling

3. How can AWS help with Data Modeling?

AWS databases have more than 15 database engineers supporting the different data models. For example, we utilize Amazon Relational Database Service(Amazon RDS) for implementing the relational data models and Amazon Neptune for implementing the graph data models.

Related article: Big Data in AWS

4. Is big data difficult to learn?

While it is not an easy skill to learn, it is not specifically hard to understand how big data works. To get a clear idea of Big data, you just have to master how data is processed, stored, analyzed, and harvested.

5. Which tool is used for data modeling?

There are several data modeling tools out there that help you create the database structure from the diagrams. Following is the list of the top 10 robust data modeling tools you should know.

- Ewin Data Modeler

- ER/Studio

- Archi

- DbSchema Pro

- Lucidchar

- SQL Database Modeler

- IBM Infosphere Data Architect

- PgModeler

- MagicDraw

- DTM Data Modeler

6. What is the future of Big Data?

Big data is a constantly increasing domain. It is gaining popularity with its massive applications in different enterprises. So, a career in the field is one of the best choices for one looking for a stable job with great benefits.

7. What are the risks of Big Data?

The following are the Different Risks of Big Data:

- Data Security

- Costs

- Deployment Time

- Data Privacy

- Scalability

- Bad Data

- Improved Transparency

- Accessibility

8. Who uses Big Data?

Big Data is used in the following top 10 industries

- Healthcare Providers

- Banking and Securities

- Government

- Education

- Manufacturing and Natural Resources

- Communications, Media, and Entertainment

- Insurance

- Retail and Wholesale trade

- Energy and Utilities

- Transportation

9. What are the different kinds of models in Big data?

The following are the different kinds of data models for Big data

- Relational Data Model,

- Object-oriented Data Model,

- Dimensional Data Model,

- Entity-Relationship Data Model,

- Hierarchical Data Model

Conclusion

Data Modeling is the practice of building a visual representation of either a complete information system or parts of it for interaction links between data structures and points. It helps enterprises identify the data types stored within the enterprise data warehouse. In Big data, data modeling is essential because it allows enterprises to analyze massive amounts of data using high-quality data modeling methods. Big Data developers or Data engineers must learn data modeling to organize the data in a visualized pattern and analyze it. I hope this big data modeling provides sufficient information about big data modeling; if you have any queries, let us know by commenting below.

On-Job Support Service

On-Job Support Service

Online Work Support for your on-job roles.

Our work-support plans provide precise options as per your project tasks. Whether you are a newbie or an experienced professional seeking assistance in completing project tasks, we are here with the following plans to meet your custom needs:

- Pay Per Hour

- Pay Per Week

- Monthly

| Name | Dates | |

|---|---|---|

| Data Modeling Training | Feb 21 to Mar 08 | View Details |

| Data Modeling Training | Feb 24 to Mar 11 | View Details |

| Data Modeling Training | Feb 28 to Mar 15 | View Details |

| Data Modeling Training | Mar 03 to Mar 18 | View Details |

Viswanath is a passionate content writer of Mindmajix. He has expertise in Trending Domains like Data Science, Artificial Intelligence, Machine Learning, Blockchain, etc. His articles help the learners to get insights about the Domain. You can reach him on Linkedin