- Home

- Blog

- Informatica

- Informatica PowerCenter - ETL Tools

- Informatica Analyst Interview Questions

- Informatica Architecture

- Informatica Concepts

- Informatica Data Quality Tutorial

- Informatica Interview Questions

- Informatica MDM Interview Questions

- Informatica MDM Tutorial - A Complete Guide

- Informatica Metadata Manager

- Informatica PowerCenter

- Informatica Transformations

- Informatica Tutorial - A Complete Guide for Beginners

- Mapplet In Informatica

- Informatica Overview

- Informatica Cloud Interview Questions

- Ab Initio vs Informatica - Which ETL Tool is Better?

- Informatica Cloud Tutorial - Informatica Cloud Architecture

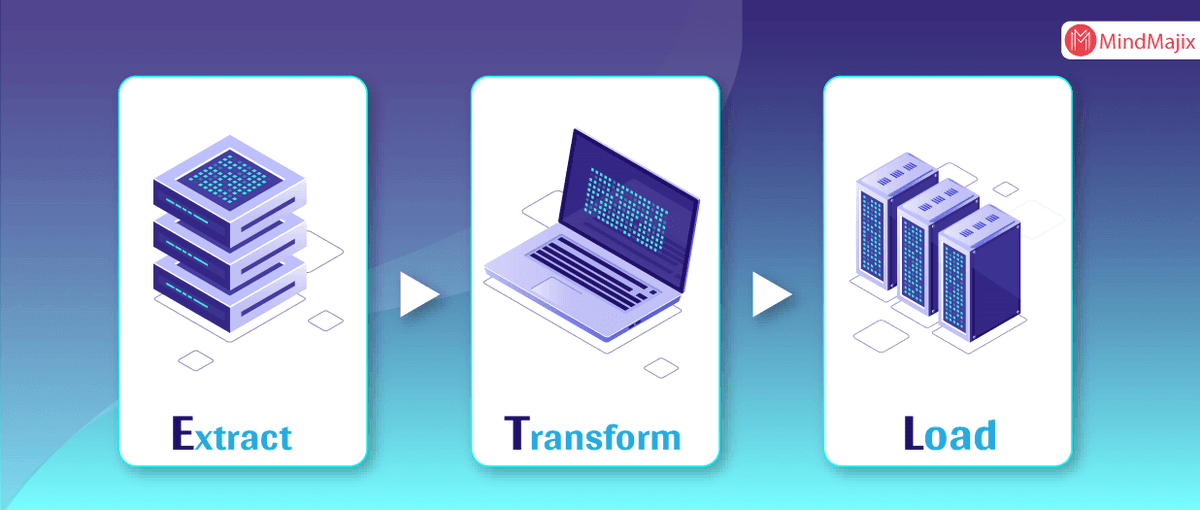

The overview of Informatica is explained in the previous article Informatica PowerCenter. Informatica relies on an ETL concept which is abbreviated as Extract- Transform- Load.

Informatica ETL Tools - Table of Content

Following are the topics we will be covering in this article

What is ETL?

It is a data warehousing concept of data extraction where the data is extracted from numerous different databases.

Who Invented ETL?

Ab Initio- a multinational software company based out of Lexington, Massachusetts, United States framed a GUI Based parallel processing software called ETL. The other historic transformations relating to the ETL journey are briefed here.

If you want to enrich your career and become a professional in Informatica, then visit Mindmajix - a global online training platform: "Informatica Online Training". This course will help you to achieve excellence in this domain.

Where do Informatica-ETL concepts apply in Real-Time Business?

Informatica is a company that offers data integration products for ETL, data masking, data Quality, data replica, data virtualization, master data management, etc. Informatica ETL is the most commonly used Data integration tool used for connecting & fetching data from different data sources.

Some of the typical use cases for approaching this software are:

- An organization migrating from an existing software system to a new database system.

2. To set up a Data Warehouse in an organization where the data is moved from the Production/ data gathering system to Warehouse.

3. It serves as a data cleansing tool where data is detected, corrected, or removed from corrupt or inaccurate records from a database.

How do Informatica - ETL tool is implemented?

1. Extract:

The data is extracted from different sources of data. Common data-source formats include relational databases, XML and flat files, Information Management System (IMS) or other data structures. An instant data validation is performed to confirm whether the data pulled from the sources have the correct values in a given domain.

2. Transform:

A set of rules or logical functions like cleaning of data are applied to the extracted data in order to prepare it for loading into a target data source. Cleaning of data implies passing only the "proper" data into the target source. There are many transformation types that can be applied to data as per the business need. Some of them can be column or row-based, coded and calculated values, key-based, joining different data sources, etc.

3. Load:

The data is simply loaded into the target data source.

All three phases are executed parallelly without being waiting for others to complete or begin.

------ Related Article: Informatica Tutorial ------

What are other features of ETL Tool?

1. Parallel Processing

ETL is implemented using a concept called Parallel Processing. Parallel Processing is a computation executed on multiple processes executing simultaneously. ETL can work 3 types of parallelism -

1. Data by splitting a single file into smaller data files.

2. The pipeline allows several components to run simultaneously on the same data.

3. A component is the executables. Processes involved running simultaneously on different data to do the same job.

Wish to make a career in the world of Informatica? Sign up for this online Informatica Training in Hyderabad to enhance your career!

2. Data Reuse, Data Re-Run, and Data Recovery

Each data row is provided with a row_id and each piece of the process is provided with a run_id so that one can track the data by these ids. There are checkpoints created to state the certain phases of the process as completed. These checkpoints state the need for us to re-run the query for completion of the task.

3. Visual ETL

The advanced ETL tools like PowerCenter and Metadata Messenger etc., that helps you to make faster, automated, and highly impactful structured data as per your business needs

You can ready-made database and metadata modules with drag and drop mechanism on a solution that automatically configures, connects, extracts, transfers, and loads on your target system.

What are the characteristics of a Good ETL Tool?

- It should increase data connectivity and scalability.

- It should be capable of connecting multiple relational databases.

- It should support even csv-datafiles so that end users can import these files with less code or no code.

- It should have a user-friendly GUI that makes end-users easily integrate the data with the visual mapper.

- It should allow the end-users to customize the data modules as per their business needs.

Related Article: Frequently asked Informatica Interview Questions

What is the Future of ETL?

Informatica -ETL products and services are provided to improve business operations, reduce big data management, provide high security to data, data recovery under unforeseen conditions and automate the process of developing and artistically design visual data. They are broadly divided into-

- ETL with Big Data

- ETL with Cloud

- ETL with SAS

- ETL with HADOOP

- ETL with Meta data etc.

- ETL as Self-service Access

- Mobile optimized solution etc., there are many more.

ETL is expanding its wings widely across the newer technology as per the present enterprise Faster world to value, staff, integrate, trust, innovate, and to deploy.

Why is Informatica ETL is in a boom?

1. Accurate and automate deployments

2. Minimizing the risks involved in adopting new technologies

3. Highly secured and trackable data

4. Self- Owned and customizable access to the permission

5. Exclusive data disaster recovery, data monitoring, and data maintenance.

6. Attractive and artistic visual data delivery.

7.Centralized and cloud-based server.

8. Concrete firmware protection to data and organization network protocols.

What are the side effects of Informatica ETL kind of automated data integration systems?

Anything in limit is good. But something like a Data integration tool makes the organization depend on it continuously. As it is a machine, it will work only when a programmed input is given. There is an equal risk of complete crashing of the systems- how good the data recovery systems are built. A small hole is enough for the rat to build its cage in our house. Similarly, any sort of misuse of simple data leads to a huge loss to the organization. Negligence and carelessness are enemies of these kinds of systems.

List of Informatica Courses:

Mindmajix offers training for many other Informatica courses depends on your requirement:

| Informatica Analyst | Informatica PIM |

| Informatica SRM | Informatica MDM |

| Informatica Data Quality | Informatica ILM |

| Informatica Big Data Edition | Informatica Multi-Domain MDM |

On-Job Support Service

On-Job Support Service

Online Work Support for your on-job roles.

Our work-support plans provide precise options as per your project tasks. Whether you are a newbie or an experienced professional seeking assistance in completing project tasks, we are here with the following plans to meet your custom needs:

- Pay Per Hour

- Pay Per Week

- Monthly

| Name | Dates | |

|---|---|---|

| Informatica Training | Mar 07 to Mar 22 | View Details |

| Informatica Training | Mar 10 to Mar 25 | View Details |

| Informatica Training | Mar 14 to Mar 29 | View Details |

| Informatica Training | Mar 17 to Apr 01 | View Details |

Ravindra Savaram is a Technical Lead at Mindmajix.com. His passion lies in writing articles on the most popular IT platforms including Machine learning, DevOps, Data Science, Artificial Intelligence, RPA, Deep Learning, and so on. You can stay up to date on all these technologies by following him on LinkedIn and Twitter.