- Docker Architecture

- Docker Commands with Examples for Container Operations

- Docker Interview Questions

- Docker Swarm Architecture

- Docker Container Security and Tools

- Basic Terminologies of Docker

- Docker Deployment Tools

- Docker Images and Containers

- Docker Security

- Getting Started with Docker

- Installing Docker to Mac, Windows, Oracle Linux 6 & 7 In Docker

- Isolated Development Environments with Docker

- Network Configuration in Docker

- Networking in Docker

- Running Docker with HTTP

- Software Development Tools and Virtual Machines VS Docker

- Why is Docker so Popular - Good and Bad of Docker

- What is Docker? How does Docker works?

- Vagrant Vs Docker

- LXD vs Docker

- Docker Projects and Use Cases

- What is Docker Kubernetes

DevOps teams frequently struggle with managing an application's dependencies and technology stack across numerous cloud and development environments. As part of their standard duties, they must ensure the functioning and stability of the program, regardless of the underlying platform on which it runs. Simply said, containerization is packaging an application's source code, along with the dependencies, libraries, and configuration files required to run and operate correctly, into a standalone executable unit.

Initially, containers were not widely adopted, primarily due to usability difficulties. Since Docker joined the picture and addressed these obstacles, containers have become ubiquitous. Docker's popularity has exploded in recent years, and it has revolutionized conventional software development. Docker's containers enable a tremendous economy of scale and have made development scalable while retaining its user-friendliness.

| Docker Architecture - Table of Contents |

What is Docker?

Docker is an open-source platform for creating, deploying, running, updating, and managing containers. Containers are standardized, executable components that include the application's source code and the OS libraries and dependencies needed to run the application.

Due to its flexibility, portability, and ability to scale, Docker has become an essential part of modern software development methods like DevOps and microservices. Docker gives applications their space to run in, making them easy to move, consistent, and safe in different environments. Docker makes it easy for developers to put their apps and all of their dependencies, libraries, and configuration files into a single unit called a container. This container can then be run on any computer that has Docker installed.

| If you want to enrich your career and become a professional in Docker, then enroll in "Docker Training" - This course will help you to achieve excellence in this domain. |

What do Dockers do?

Cloud computing has changed the programmable infrastructure of an organization. It brought automation into the software development lifecycle from resource allocation to operating, configuring, deploying the applications, and monitoring the entire process. It led to the DevOps culture where developers make the whole application as a single Docker Image.

Docker is needed because managing and deploying apps in different environments, like development, testing, and production, is hard. Traditional software management methods can cause conflicts and inconsistencies between environments, like installing dependencies and libraries directly on the host machine.

Here are some points describing what Docker does

- Docker offers tools for creating and managing containers, such as Dockerfiles for defining container images and the Docker CLI for container management.

- Docker makes developing, testing, and deploying applications easier across multiple platforms and environments, reducing the time and effort required to manage infrastructure and increasing software delivery speed and reliability.

- Docker is a containerization platform allowing developers to create, ship, and run container applications.

- Docker offers a platform for distributing and sharing container images via public and private registries.

- Docker allows developers to easily package their applications and their dependencies, libraries, and configuration files into a single unit known as a container, which can be run on any machine that has Docker installed.

- Containers are lightweight, standalone executable packages that include all of the dependencies required to run an application, ensuring consistent behavior across multiple environments.

- Docker's flexibility, portability, and scalability have made it essential to modern software development practices such as microservices and DevOps.

Docker Architecture And Its Components

Docker is a framework for building, deploying, and running applications in isolated containers. Docker's architecture is made with the goal of simplifying the packaging and distribution of applications in mind.

Docker architecture is made up of main components. They are

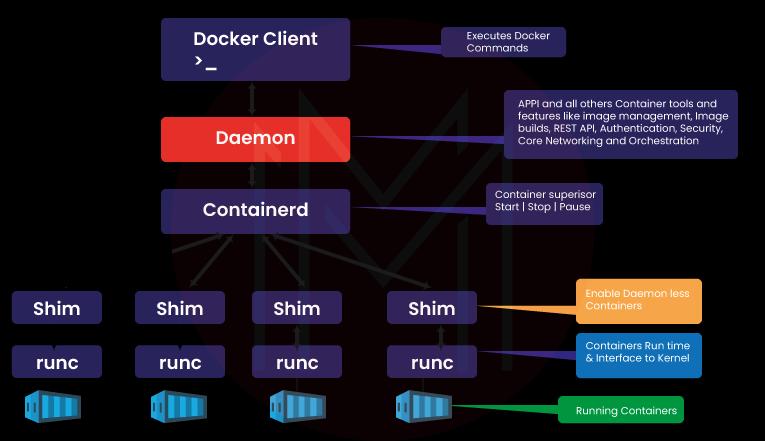

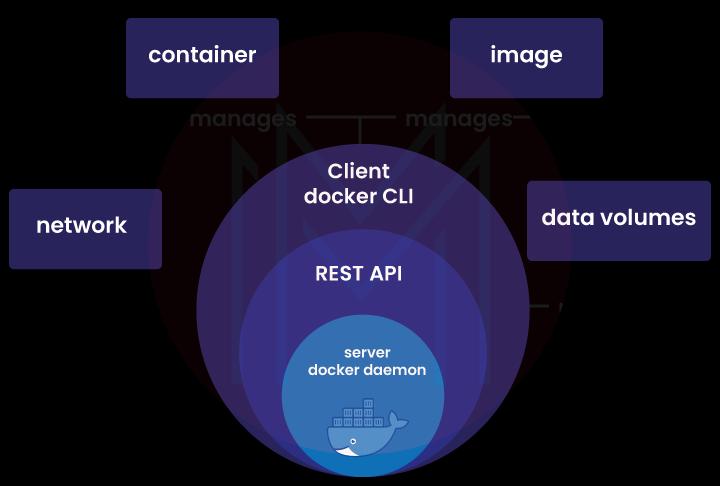

1. The Docker Engine

- Docker Daemon: It is a server that operates on the computer that is hosting Docker and is responsible for managing Docker objects like containers, images, volumes, and networks. Docker daemons are responsible for listening to the Docker client for commands and then responding by carrying out those operations.

- Docker API: A REST API that enables developers to interact with daemon through HTTP.

- Docker CLI: A client command-line interface for communicating with the Docker daemon. It considerably simplifies container instance management and is one of the primary reasons why developers adore Docker.

2. Docker Client

The Docker client is able to interface with the Docker Daemon through the use of commands and REST APIs (Server). Any time a client executes a docker command on the client terminal, the commands of docker are sent to the Docker daemon by the client terminal. These instructions come from the Docker client and are sent to the Docker daemon in the form of a command as well as a request to the REST API.

Command Line Interface (CLI) is used by Docker Client to run the following commands

- docker pull

- docker build

- docker run

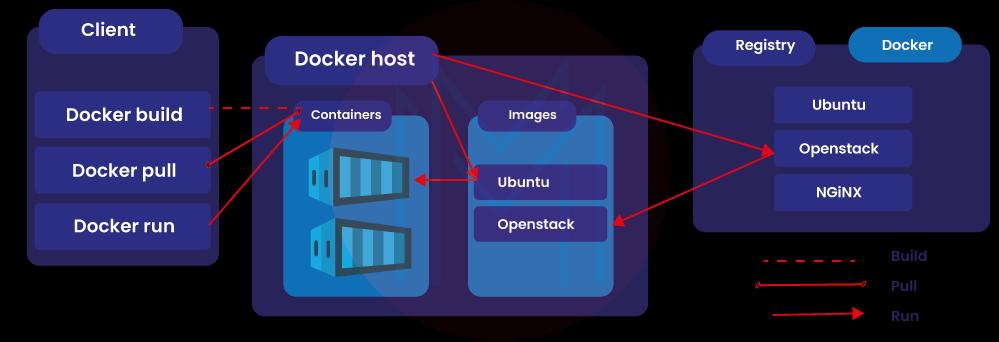

3. Docker Host

The DockerHost provides a comprehensive set in which programs may be launched and operated. TAs was mentioned earlier, the daemon is in charge of everything that happens in a container and takes instructions from the command line interface or the restful API. It can coordinate its operations with other daemons through two-way communication.

When a client requests a certain container image, the Docker daemon downloads and compiles it. After fetching the specified image, it follows the instructions in a build file to create a containerized version of the code. The build file may additionally specify whether the daemon should pre-load additional components before executing the container, or whether the created container should be given directly to the local command line.

4. Docker Objects

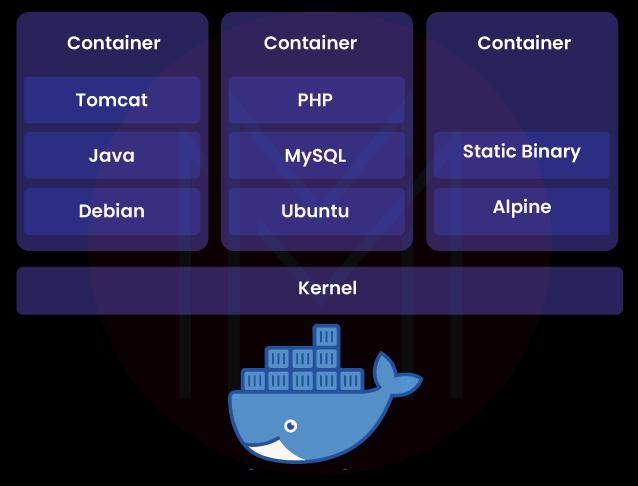

Docker objects are things like containers, images, volumes, and networks used when working with Docker.

- Docker Images: Docker images are the binary templates that are used to construct Docker Containers. These images can only be read and cannot be modified in any way. It uses a private container registry to share container images among company employees, it uses a public container registry to share container pictures with the general public. Docket images also use metadata to talk about what the container can do.

- Docker Containers: Containers are the building blocks of Docker. They are used to hold the whole package that an application needs to run. Containers are useful because they use very few resources. Containers are instances of Docker images that are executed at runtime. They create a sandbox in which programs can be executed, guaranteeing that they will run the same way in every setting.

- Docker Volumes: Volumes are where Docker stores data that stays around and is used by Docker containers. Docker manages them entirely via the Docker API or CLI. Volumes are supported by Linux and Windows Containers. When it comes to storing information, volumes are always preferred above the writable layer of a container. Adding a volume does not increase the size of a container because its data persists independently of the container's lifetime.

- Docker Networks: Docker networking is a conduit via which all of the containers that are isolated connect with one another. Docker primarily makes use of the following five network drivers

- Bridge

- Host

- None

- Overlay

- Macvlan

5. Docker Registry

It is a storage location for Docker images and functions as a repository. Docker's default public registry is called Docker Hub, although users can also construct their own private registries on which they can store their Docker images. Docker Hub is the default public registry for Docker. When you issue a command like a docker pull or docker run, the necessary docker image is downloaded from the registry you specify. Doing a docker push stores the docker image on the specified registry. Storage and management of Docker images is handled by Docker Registry.

Docker registries can be split into two categories

- Public Registry: or Docker hub, is another name for Public Registry.

- Private Registry: Image sharing within the company is facilitated using a private registry.

6. Docker Storage

Data can be stored on the container using Docker Storage. Docker gives you these choices for Storage:

- Data Volume: Data Volume gives you the ability to make storage that lasts. It also lets us name volumes, make a list of volumes, and link containers to volumes.

- Directory Mount: Directory Mounts are one of the best ways to store things in Docker. It puts the directory of a host into a container.

- Storage Plugins: It lets you connect to storage platforms on the outside.

Docker containers are created, run, and distributed through communication between the Docker client and the Docker daemon. The daemon and Docker client only coexist on the same machine. Docker clients can also be used to communicate with Docker daemons hosted elsewhere. Moreover, the daemon and Docker client talk to one another via UNIX sockets or a network interface utilizing a RESTful application programming interface.

Follow these simple steps to describe the Docker Architecture

- The Docker client interacts with the Docker daemon.

- The Docker daemon builds, runs, and distributes the application on Docker containers.

- The Docker Container creates an image, a read-only template with instructions for creating a Docker container.

- A Docker registry stores Docker images.

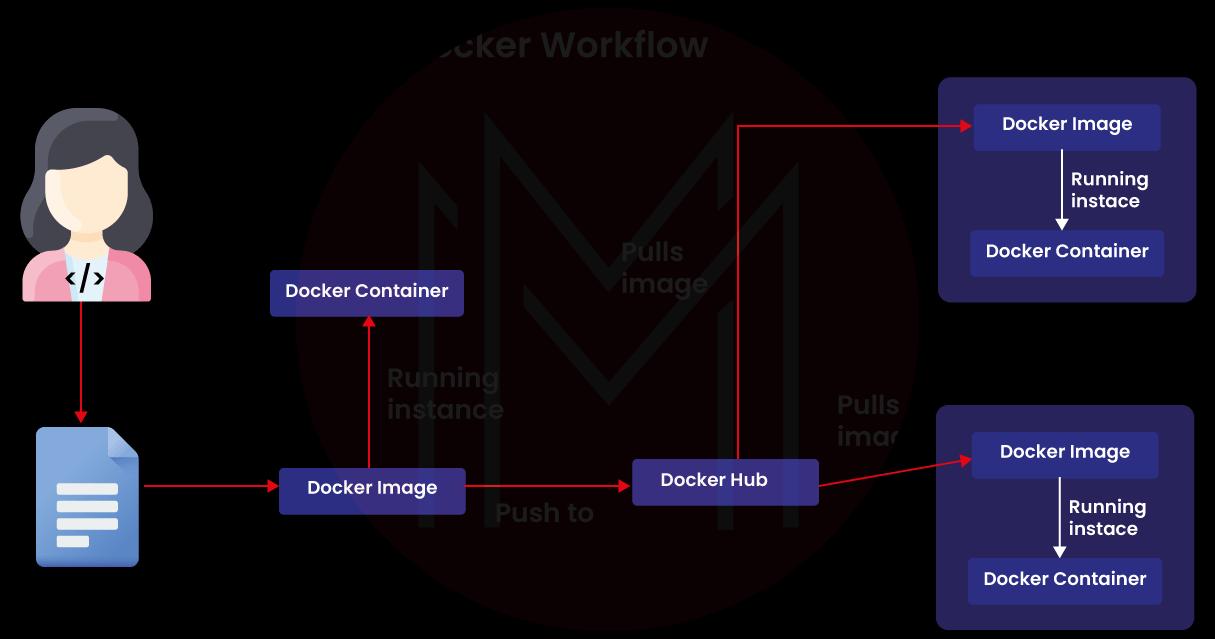

Docker's Workflow

In Docker's workflow, applications are built and put into portable containers that can be used in different environments. Developers can use Docker to set up a consistent development environment, test applications in isolation, and easily send them to production. The Docker workflow also includes tools like Docker Swarm and Docker Compose for managing containers.

Docker's workflow can be divided into the following steps

- Define the application: A Dockerfile is a text file with instructions for building a Docker image. This is how developers describe the application and its dependencies.

- Build the Docker image: Docker images are small, self-contained, executable packages containing the application and its dependencies; the Dockerfile is used to construct these images.

- Store the Docker image: The Docker image is published to a registry where others, such as Docker Hub or a private registry, can easily access it.

- Run the Docker container: Docker containers, which are operated from Docker images, can be started, stopped, and controlled independently of one another. The container is self-contained, meaning it uses its own set of files, networks, and other resources.

- Test the application: The developers within the Docker container can test the application to guarantee that it functions appropriately and satisfies their needs.

- Deploy the application: When it has passed testing and is ready for release, it can be deployed to a cloud service, an on-premises data center, or a hybrid cloud.

- Monitor and manage the application: Docker offers a suite of management and monitoring tools for Docker containers and applications, such as Docker Swarm and Kubernetes, that make it simple for developers to scale and manage their applications in production.

Overall, Docker's workflow provides great flexibility and universality, allowing developers to rapidly and easily build, test, and deploy applications.

What are the Special Features of the Docker Platform?

Some special features of the Docker platform are:

- With the detached method of containers, you can run many containers on the same host at the same time.

- Lightweight Containers don't need any hypervisors. They run directly within the host machine's kernel.

- Docker provides tools and a platform to manage the software development lifecycle using Docker containers.

- A centralized server can be used to distribute and test the software.

- The application can be put into production on your own servers, at a regional data center with a cloud service provider, or on a much larger scale.

- It enables you to run different and multiple holders on a single host.

- One can run any number of compartments on a given piece of equipment.

- One can run the Docker compartments inside the host machines.

| Related Article: Docker Deployment Tools |

What is the importance of Docker Container Software for Developers?

Docker is a containerization platform that gives programmers a safe space to code, package, and release software. Docker containers are a lightweight and portable replacement for conventional virtual machines that ensures application compatibility regardless of the host system.

Docker Container Software is important because it can run applications in a consistent and separate environment. This makes it easier for developers to build, test, and deploy their apps.

The Docker Container Software is important for the following reasons

- Portability: It is easy to migrate apps between development, testing, and production environments since Docker containers can operate on any machine with Docker installed.

- Improved Collaboration: Docker is a platform for delivering container images, facilitating developer collaboration, and sharing finished products.

- Consistent Environment: Docker lets developers package their apps, dependencies, and configuration files. This makes sure that the apps run the same way in all environments.

- Compatibility: Docker containers are portable, meaning they can run on many OSes, simplifying cross-platform program development and deployment.

- Faster Development Cycles: Docker allows programmers to construct and test their programs in separate contexts, lowering the potential for conflicts and allowing for quick iteration cycles.

- Simplified Infrastructure Management: By providing a lightweight and versatile framework for deploying and scaling applications, Docker simplifies infrastructure management.

- Cost Savings: Docker's lightweight and efficient platform can save infrastructure expenses by allowing for more efficient use of resources and less need for dedicated hardware.

- DevOps Integration: Docker Container Software has become essential to current DevOps procedures by facilitating better communication and collaboration between software engineers and IT operations staff.

Docker Architecture FAQs

1. What is Docker used, for example?

Docker is primarily used for application containerization, enabling developers to design, package, and deploy their programs more efficiently and uniformly while simplifying infrastructure administration.

For example, A developer might use Docker to develop and deploy a containerized web application on a cloud platform.

2. When should we use Docker?

Docker should be used to run applications in a consistent, isolated environment. It reduces the number of dependencies and conflicts between applications and the infrastructure they run on.

| Related Article: Docker Interview Questions |

3. How do I start Docker Coding?

Here are the steps you can follow to get started with Docker coding

- Install Docker

- Learn Docker concepts

- Write Dockerfile

- Build Docker image

- Run Docker container

- Push Docker image

- Dockerize your application

4. Is Kubernetes a container or VM?

Yes. Kubernetes is a container orchestration platform that manages container deployment, scalability, and administration.

5. Why is Docker used in DevOps?

Docker is used in DevOps because containers make creating a consistent environment across different infrastructures easy.

6. What is the difference between Docker and Container?

| Docker | Container |

| A containerization platform | An isolated environment |

| Provides tools for building, packaging, and deploying applications as containers | Provide a consistent runtime for applications and its dependencies |

| Uses images to build containers | Created from images |

7. How is Docker different from virtual machines?

Virtual machines (VMs) mimic an entire operating system and need a lot of resources to run. On the other hand, Docker containers share the host operating system's kernel and require less resources to run. This means Docker containers are lighter and easier to move around than VMs.

8. Is Docker Secure?

Yes, Docker has more than one layer of security to make sure that containers are safe.

| Related Article: Docker Security |

9. What are the three types of Docker?

There is only one type of Docker: the Docker platform for containerization. However, there are three editions of the Docker platform -Enterprise Edition (EE), Community Edition (CE), and Cloud.

10. Does Docker require coding?

Docker does not require code to use. However, creating Docker images and configuring Docker containers may need scripting and programming skills.

Conclusion

We hope this article provided a better understanding of Docker architecture and its important components that make it up. Regarding the DevOps ecosystem, Docker has emerged as a crucial tool for streamlining the SDLC and speeding up application delivery. Enhance your name on the Docker Training online to gain your IT skills and proficiency in Docker to land your dream job.

On-Job Support Service

On-Job Support Service

Online Work Support for your on-job roles.

Our work-support plans provide precise options as per your project tasks. Whether you are a newbie or an experienced professional seeking assistance in completing project tasks, we are here with the following plans to meet your custom needs:

- Pay Per Hour

- Pay Per Week

- Monthly

| Name | Dates | |

|---|---|---|

| Docker Training | Feb 17 to Mar 04 | View Details |

| Docker Training | Feb 21 to Mar 08 | View Details |

| Docker Training | Feb 24 to Mar 11 | View Details |

| Docker Training | Feb 28 to Mar 15 | View Details |

Vinod M is a Big data expert writer at Mindmajix and contributes in-depth articles on various Big Data Technologies. He also has experience in writing for Docker, Hadoop, Microservices, Commvault, and few BI tools. You can be in touch with him via LinkedIn and Twitter.