- Docker Architecture

- Docker Commands with Examples for Container Operations

- Docker Interview Questions

- Docker Swarm Architecture

- Docker Container Security and Tools

- Basic Terminologies of Docker

- Docker Deployment Tools

- Docker Images and Containers

- Docker Security

- Getting Started with Docker

- Installing Docker to Mac, Windows, Oracle Linux 6 & 7 In Docker

- Isolated Development Environments with Docker

- Network Configuration in Docker

- Networking in Docker

- Running Docker with HTTP

- Software Development Tools and Virtual Machines VS Docker

- Why is Docker so Popular - Good and Bad of Docker

- Vagrant Vs Docker

- LXD vs Docker

- Docker Projects and Use Cases

- What is Docker Kubernetes

Docker is one of the powerful tools that gained immense popularity in recent years. Many IT companies are turning towards Docker for their production environment. Without having explicit knowledge of what Docker is and what it does, there’s no way to know, how to use it in your environment. In this article, we take the opportunity to explain what Docker is and where it fits in the production environment in the most simple way.

Docker is an open-source containerization platform. It enables you to build lightweight and portable software containers that simplify application development, testing, and deployment. Before moving ahead with Docker, let’s understand what are containers and why to use them?

What is Docker

|

If you would like to Enrich your career with a Docker certified professional, then visit Mindmajix - A Global online training platform: “Docker Training” Course. This course will help you to achieve excellence in this domain. |

What are Containers?

The main goal of software development is to keep applications isolated from one another on the same host or cluster. This is difficult to achieve, thanks to the libraries, packages, and other software components needed for them to run. One solution to this problem is containers, which keep the application execution environments isolated from one another but share the underlying OS kernel. They provide an efficient and highly granular mechanism to combine software components into the kinds of application and service stacks required in a modern enterprise, and to keep those software components updated and maintained.

Containers offer all the benefits of Virtual Machines, including application isolation, disposability, and cost-effective scalability. But the additional layer of abstraction (at the OS level) offers important additional advantages:

- Lighter weight

- Greater resource efficiency

- Greater efficiency

- Better application development

- Improved developer productivity

We hope you now got a clear idea of what a container is.

What is Docker?

Docker is a tool designed to create, deploy, and run containers and container-based applications. It can run on Linux, Windows, and macOS. Dockers allow developers to package applications into containers that combine application source code with all the dependencies and libraries needed to run the code in any environment. Docker makes it easier and simpler to run containers using simple commands and work-saving automation.

Why use Docker?

The benefits of using Docker are:

- Offers improved and seamless productivity: Docker containers can be run on any data center, desktop, and cloud environment without any modifications.

- Automated container creation: Automatically builds a container based on application source code.

- Container reuse: Containers can be reused as base images(templates)

- Shared container libraries: Open-source Registry can be accessed by developers which include thousands of user-contributed containers.

- Container versioning: Version tracking of a container image can be done using Docker and it can also be rolled back to older versions.

- Fast and consistent delivery of applications: Docker’s streamline the development lifecycle by making developers work in a standardized environment with the use of local containers that provide the applications and services. Containers are very useful for continuous integration and continuous delivery workflows.

- Responsive deployment and scaling: Docker supports portable workloads, and its lightweight nature also makes it easy to manage dynamic workloads by scaling up applications and services in real-time.

- Runs more workloads on the same hardware: Docker provides a viable and cost-effective choice for hypervisor-based virtual machines and also it’s perfect for high-density environments and for small and medium deployments.

The Docker Platform:

The docker platform enables workloads virtualization in a very portable way, enabling distributed applications to cross the boundaries of servers. You can even manage the lifecycle of containers. The benefits of using the Docker platform are listed below:

- Develops the applications and their supporting components using containers.

- Containers become the base for testing and distributing applications.

- Deploy the application in a production environment, as a container or an orchestrated service.

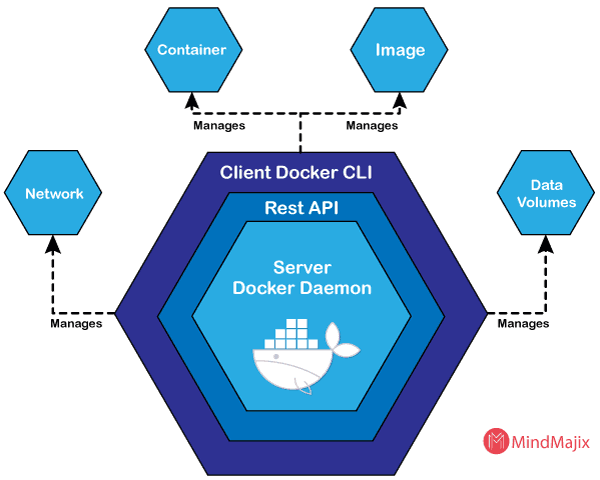

What Is Docker Engine:

The docker engine is the core of Docker. It uses client-server technology to create and run the containers. It offers two different versions, such as Docker Engine Enterprise and Docker Engine Community.

The major components of the Docker Engine are:

- A command-line interface (CLI) client.

- A server (docker daemon) is a type of long-running program.

- A REST API is used to define the interfaces that helps you to program the daemon and instruct it on what to do.

The Command-Line Interface uses REST API to interact or control the Docker daemon through direct CLI commands or scripting.

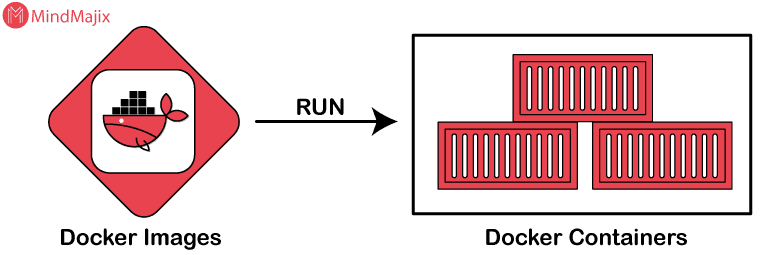

What is a Docker image?

A docker image is a type of template with instructions to create docker containers. They are created using the build command. Docker image can be created using a read-only template by using the run command. Docker allows you to create and share software using docker images. We can either create a new image as per the requirements or use a ready-made cocker image from the docker hub.

What is Docker Container?

Containers are a runnable instance of images or the ready applications created from docker images. Through Docker API or CLI, we can create or delete a container. Containers can be connected to one or more networks, even create a new image, or attach storage to its current state. Containers are by default isolated from each other and their host machine.

What is Docker Registry?

Finally, the docker registry is the place where Docker images are stored. The docker Hub is a public registry that anyone can access and configure Docker to look at images on the docker hub by default. We can even run a private registry. Docker Trusted Registry (DTR) is included if we use Docker Datacenter (DDC).

If we use docker run or docker pull commands, images are pulled from the configured Registry. For the docker push command, the image is pushed to the configured Registry. The Registry can either be a public or local repository that allows multiple users to interact in building an application.

How Does Docker Work?

We will understand Docker working by having a clear look at its architecture.

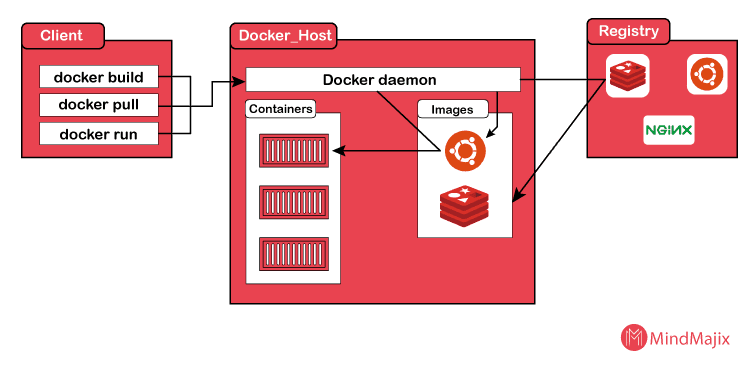

Docker works on a client-server architecture. It includes the docker client, docker host, and docker registry. The docker client is used for triggering docker commands, the docker host is used to running the docker daemon, and the docker registry to store docker images.

The docker client communicates to the docker daemon using a REST API, which internally supports to build, run, and distribution of docker containers. Both the client and daemon can run on the same system or can be connected remotely.

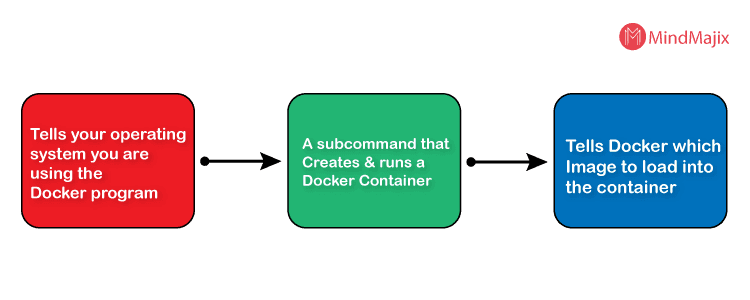

- We use the client (CLI) to issue a build command to the Docker daemon for building a docker image. Based on our inputs the Docker daemon will build an image and save it in Registry, which can either be a local repository or Docker hub.

- If you don’t want to create an image, just pull it from the docker hub built by another user.

- Finally, If we need to create a running instance of a docker image, issue a run command from CLI to create a docker container.

Let’s now understand in detailedly about important terms that help to create docker containerized applications, i.e. Docker daemon, client, and objects.

Docker daemon:

The docker daemon monitors API request and control docker objects like containers, images, volumes, and networks. For managing docker services, a daemon can also communicate with other daemons.

Docker client:

The docker client is the major way that provides communication between many dockers users to Docker. The client sends commands (docker API) used by the users such as docker run to the docked. To manage docker services, docker clients can be communicated with more than one daemon.

Docker objects :

Docker images, containers, networks, volumes, plugins, etc. are the docker objects.

What is Docker deployment and orchestration?

If we are working on a few containers, it’s easy to manage the application on the Docker engine itself. If deployment includes several containers and services, it’s difficult to manage without the help of these purpose-built tools.

Docker Compose

Docker Compose is used for simplifying the process of developing and testing multi-container applications. It creates a YAML file and determines which services to be included in the application. It can deploy and run containers using a single command. It’s a command-line tool that takes a specially formatted descriptor file to assemble applications out of multiple containers and run them in concert on a single host. You can also define nodes, storage, configure service dependencies using Docker Compose.

More advanced versions of these behaviors—what’s called container orchestration—are offered by other products, such as Kubernetes and Docker Swarm.

Docker swarm

Docker includes its own orchestration tool called Docker Swarm. It allows you to manage multiple containers deployed across several host machines. The major benefit of using it is providing a high level of availability for applications.

Kubernetes

To manage container lifecycles in complicated environments, we need a container orchestration tool. Kubernetes helps you to automate tasks integral to the management of container-based architectures, including service discovery, updates, load balancing, storage provisioning, container deployment, and more. Most of the developers choose Kubernetes as a container orchestration tool.

[Related Article: What is AWS SageMaker]

Conclusion:

In short, here’s what Docker can do for you: it makes it easier to develop containerized applications, get more applications run on the same hardware than other technologies, and functions simpler to manage and deploy applications. We hope the concepts that we covered on Docker and its usage were helpful for you.

If you have any interesting questions on Docker, post a comment below, we would love to help up.

List Of MindMajix Docker Courses:

| Kubernetes Administration |

| OpenShift |

| Docker Kubernetes |

| OpenShift Administration Training |

On-Job Support Service

On-Job Support Service

Online Work Support for your on-job roles.

Our work-support plans provide precise options as per your project tasks. Whether you are a newbie or an experienced professional seeking assistance in completing project tasks, we are here with the following plans to meet your custom needs:

- Pay Per Hour

- Pay Per Week

- Monthly

| Name | Dates | |

|---|---|---|

| Docker Training | Feb 21 to Mar 08 | View Details |

| Docker Training | Feb 24 to Mar 11 | View Details |

| Docker Training | Feb 28 to Mar 15 | View Details |

| Docker Training | Mar 03 to Mar 18 | View Details |

Vinod M is a Big data expert writer at Mindmajix and contributes in-depth articles on various Big Data Technologies. He also has experience in writing for Docker, Hadoop, Microservices, Commvault, and few BI tools. You can be in touch with him via LinkedIn and Twitter.