- Home

- Blog

- Microsoft Azure

- Azure Data Factory (ADF) Integration Runtime

- Azure Active Directory

- Azure Active Directory B2C

- Azure Active Directory Domain Services

- Azure Analysis Services

- Azure App Services

- What is Azure Application Insights?

- Azure Arc

- Azure Automation - Benefits and Special Features

- A Complete Guide On Microsoft Azure Batch

- Azure Cognitive Services

- Azure Data Catalog

- Azure Data Factory - Data Processing Services

- Microsoft Azure Data Factory Tutorial (2024)

- Everything You Need To Know About Azure Data Lake

- Azure DNS - Azure Domain Name System

- Azure ExpressRoute

- Azure Functions - Serverless Compute

- Azure Interview Questions and Answers (2024)

- Azure IoT Edge Overview

- Azure IoT Hub

- What Is Azure Key Vault??

- Azure Load Balancer

- Azure Logic Apps - The Lego Bricks to Serverless Architecture

- Azure Machine Learning

- Microsoft Azure Media Services

- Azure Monitor

- Introduction To Azure SaaS

- Azure Security Center

- Azure Service Bus

- Overview of Azure Service Fabric

- Azure Site Recovery

- Azure SQL Data Warehouse

- Azure Stack - Cloud Services

- Azure Stream Analytics

- Azure Virtual Machine

- Azure’s Public Cloud

- Microsoft Azure Application Gateway

- Microsoft Azure Certification Path

- Microsoft Azure - Exactly What You Are Looking For!

- Microsoft Azure Fabric Interview Questions

- HDInsight Of Azure

- IS Microsoft Azure Help To Grow?

- Microsoft Azure Portal

- Microsoft Azure Traffic Manager

- Microsoft Azure Tutorial

- Overview of Azure Logic Apps

- Top 10 Reasons Why You Should Learn Azure And Get Certified

- Server-Less Architecture In Azure

- What is Microsoft Azure

- Why Azure Machine Learning?

- Azure DevOps Interview Questions

- Azure Active Directory Interview Questions

- Azure DevOps vs Jira

- What is Azure Service Fabric

- What is Azure Databricks?

- Azure Databricks Interview Questions

- Azure Data Factory Interview Questions

- Azure Architect Interview Questions

- Azure Administrator Interview Questions

- Azure Data Studio vs SSMS

- Microsoft Interview Questions

- What is Azure Data Studio - How to Install Azure Data Studio?

- Azure DevOps Projects and Use Cases

- Azure DevOps Delivery Plans

- Azure DevOps Variables

- Azure DevOps vs GitHub

- Azure DevOps Pipeline

Integration runtime is the infrastructure that is used for computations. Azure Data Factory uses it to offer many integration capabilities. They can be data flows and data movement, activity dispatch, and SSIS package execution.

Know that the data factory offers three integration runtimes: Azure, self-hosted, and Azure SSIS. Azure integration runtime, as well as Azure SSIS, are usually managed by Microsoft. Besides, users manage self-hosted integration runtime.

This blog deeply digs into the basics of Azure data factory, integration runtime and its types, the step-by-step procedure to create Azure data factory instance, and so on. Let’s go over them!

Azure Data Factory Integration Runtime - Table of Contents

- What is Azure Data Factory?

- What is Azure Data Factory Integration Runtime?

- Types of ADF Integration Runtime

- Key Points of ADF Integration Runtime

- Steps To Create ADF Integration Runtime

- FAQs

What is Azure Data Factory (ADF)?

Let's understand Azure Data Factory first. Azure Data Factory is the platform that is used to perform various operations on large amounts of data. To understand it more clearly, we will go through an example: Let's say there is an E-commerce company that generates an enormous amount of data daily, such as the number of orders placed, bookmarks, delivery status, etc., and the company wants to extract insights from this data and also wants to automate it such that company will get all the insights and report on daily basis.

Being a cloud-based Extract-Transform-Load (ETL) tool, A.D.F. provides the facility to build workflows and monitor and transform data at a vast scale. These workflows are usually referred to as 'Pipelines'. Additionally, users can also convert data into visuals using various tools available. With ADF's help, we can organize raw and complex data and extract meaningful insights.

It provides Integration Runtimes, which are responsible for executing data integration activities. Azure Data Factory supports data migrations and allows you to create and run data pipelines on a specified schedule.

| If you want to enrich your career and become a professional in Azure Data Factory, then enroll in "Azure Data Factory Online Training". This course will help you to achieve excellence in this domain. |

What is Azure Data Factory Integration Runtime?

Now moving towards A.D.F. Integration Runtime, A.D.F. integration runtime is a component of A.D.F. which helps integrate data sources and destinations. It is also used to manage data movements in and outside the ecosystem. It's a middle person who provides infrastructure, connectivity between various networks, and computation ability that helps to connect A.D.F. and other Data stores or services.

Types of ADF Integration Runtime

1. Azure Integration Runtime (Auto Resolve)

In Azure Integration Runtime, Moving data from Azure to non-azure sources is wholly managed and auto-scaled up. Integration runtime to data store traffic is done via public networks. In this Integration Runtime, Azure provides a range of static public IP addresses, and only these IP addresses are provided access to the data store, such that no other IP address can access the target data store. This type of integration runtime does not require any additional configuration during the creation of pipelines. It dynamically scales up or down as per the integration tasks.

2. Self-Hosted Integration Runtime

If you want to keep data separate from different environments, then you must go for self-hosted integration runtime. As a result, data from two different environments don’t interfere with themselves. With this runtime, you can make a secure transmission from the local environment to the Azure cloud. Besides, you can install this runtime on a virtual machine and on-premises systems.

3. Azure SSIS Integration Runtime

Here, SSIS is the short form of SQL Server Integration Services. With this runtime, you can run the SSIS packages in the Azure data factory. You can quickly shift current workloads to the cloud. Mainly, it offers good flexibility and scalability to the infrastructure. With this runtime, you can perform data integration and ETL workflows. It also offers excellent features such as cloud-enabled workloads, integration of Azure services, logging and monitoring, security compliances, and more.

Moreover, this runtime simplifies infrastructure management so that it is possible for users to focus on creating and running SSIS packages. Also, users can control costs by controlling the start and stop of SSIS packages based on needs.

Key Points of Azure Data Factory Integration Runtime

As you know, Azure Data Factory Integration Runtime manages data movement and transformation activities efficiently. Let's go through a few key points of Azure data factory integration runtime.

- Data Movement: It simplifies data movement from many sources to destination points. It supports connection with both cloud-based as well as on-premises data sources. They can be Azure blob storage, Azure SQL Database, Oracle, SQL server, etc.

- Connectivity: The connection with various data sources is made with the help of different protocols such as REST, HTTPS, and OData.

- Data Transformation: Azure data factory provides wonderful data mapping and transformation capabilities. It helps to modify data structure, format, and content before you load them into the target data store.

- Monitoring: Azure data factory IR provides robust monitoring and logging capabilities. It helps to track data movement activities and troubleshoot potential issues.

- Security: Azure data factory employs encryption and authentication mechanisms to ensure secured data transfer.

Steps To Create Azure Data Factory Integration Runtime

Following is the step-by-step procedure to create Azure Data Factory Integration Runtime:

Step 1: Create an Azure Data Factory Instance:

1.1. First, Register to the Azure portal using the link https://portal.azure.com.

1.2. Next, Click the "Create a resource“ tab in the portal's upper-left corner.

1.3. Now, Search for “Data Factory” and select it from the results.

1.4. Lastly, Click the "Create” button to start the creation process and fill in the required details to create an Azure Data Factory instance.

Step 2: Create Linked Services:

Know that Linked Services determines the connection credentials as well as the required information for every data store. It is important that You must create Linked Services before setting up Integration Runtime (IR).

2.1. First, navigate to your Azure Data Factory instance In the Azure portal.

2.2. Next, click on the button "Author." located on the left-hand side menu

2.3. Then, Click the "Manage" button at the top.

2.4. Click the "Linked services" button in the left-hand side menu.

2.5. Click the "+ New" button to create a new Linked Service.

2.6. Now, you can choose the type of data store you want to connect. It may be Azure Blob Storage or Azure SQL Database. Then, follow the prompts to provide the connection details.

2.7. Lastly, Repeat the process to create Linked Services for all the data sources as well as destinations.

Step 3: Set-Up Integration Runtime:

Once you have created the Linked Services, you can set up Azure Data Factory Integration Runtime. You can follow the following steps to set up your integration runtime:

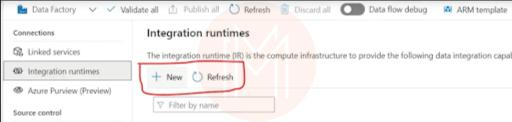

3.1. First, navigate to the Manage Tab on the Azure Data Factory Homepage.

3.2. Then, Select the Integration Runtime.

3.3. Select the “+ New” option.

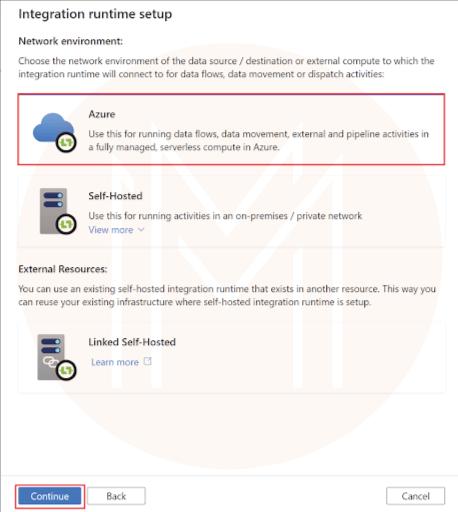

3.4. You will see a few options. Select Azure, Self-Hosted, and then choose Continue.

3.5. After this, select Azure and click Continue.

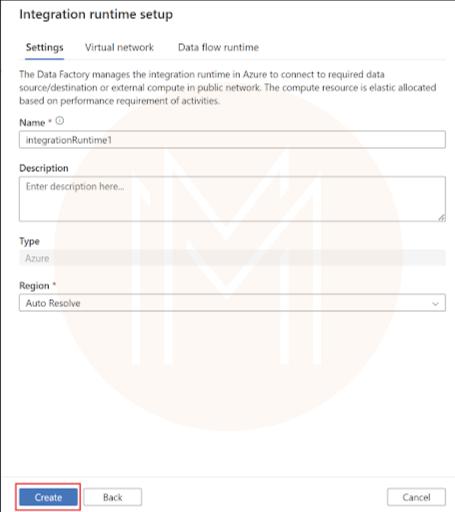

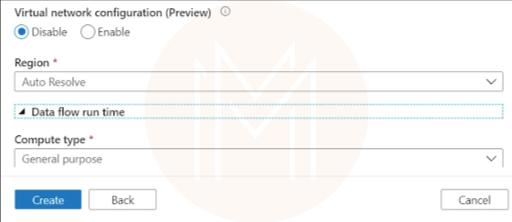

3.6. Provide a name for your Azure IR, and click Create.

3.7. You will see a popup asking you to select a region. Auto resolve will automatically choose IR based on skin region.

3.8. Ensure you see the newly created IR on the Integration Runtimes page.

| Learn Top Azure Data Factory Interview Questions and Answers that will help you grab high paying jobs |

Azure Data Factory Integration Runtime FAQs

1. What data factory version can I use to create data flows?

You can use the Data Factory version 2 to create data flows.

2. Is there any limit for integration runtime instances in a data factory?

No. There is no limit to having many integration runtime instances in a data factory.

3. What do you mean by Azure data factory?

With azure data factory, you can build data-driven workflows in the cloud. Also, you can manage and automate data movement operations effectively.

4. Name a few major components used in Azure data factory.

- Pipeline

- Activity

- Dataset

- Mapping data flow

- Linked service

- Trigger

- Control flow.

5. Is coding skill required to work with Azure data factory?

No. Coding skills are not required for Azure data factory. You can easily create workflows in the Azure data factory.

6. Is Azure data factory an ETL tool?

Yes. Azure data factory is an ETL tool.

7. Does learning Azure data factory worthwhile?

Of course! There is a soaring demand for Azure data factory engineers across the world. Therefore, if you gain certification in Azure Data Factory, the chance of getting hired by top companies is high.

8. What triggers does Azure Data Factory support?

Azure data factory supports the following triggers. They are listed below:

- Schedule trigger

- Tumbling window trigger

- Event-based trigger.

Conclusion

This article taught us about Azure Data Integration Runtime, its types, features, and many more. We also get to know how to set up an integration runtime. Now you are ready to install the Azure data factory on your own.

If you want to know more about Azure data factory integration runtime, you can register in MindMajix Azure Data Factory Course and get certification. It will help you improve your Azure data factory skills and elevate your career.

On-Job Support Service

On-Job Support Service

Online Work Support for your on-job roles.

Our work-support plans provide precise options as per your project tasks. Whether you are a newbie or an experienced professional seeking assistance in completing project tasks, we are here with the following plans to meet your custom needs:

- Pay Per Hour

- Pay Per Week

- Monthly

| Name | Dates | |

|---|---|---|

| ADF Training | Feb 28 to Mar 15 | View Details |

| ADF Training | Mar 03 to Mar 18 | View Details |

| ADF Training | Mar 07 to Mar 22 | View Details |

| ADF Training | Mar 10 to Mar 25 | View Details |

Viswanath is a passionate content writer of Mindmajix. He has expertise in Trending Domains like Data Science, Artificial Intelligence, Machine Learning, Blockchain, etc. His articles help the learners to get insights about the Domain. You can reach him on Linkedin