- 6 Hot And In-Demand Tech Areas In 2024

- How To Forward Your Career With Cloud Skills?

- Top 7 On-Demand IT Certifications

- Most In-demand Technologies To Upskill Your Career

- Top 10 Hottest Tech Skills to Master in 2024

- Top Skills You Need to Become a Data Scientist

- Groovy Interview Questions

- Facets Interview Questions

- Crystal Reports Tutorial

- VAPT Interview Questions

- Flutter Tutorial

- Saviynt VS Sailpoint

- Flutter vs Xamarin

- PingFederate Interview Questions and Answers

- Dart vs Javascript : What's the Difference?

- Terraform Private Registry

- Cylance Interview Questions and Answers

- Sophos Interview Questions and Answers

- Top Camunda Interview Questions

- NUnit Interview Questions and Answers

- Impala Interview Questions and Answers

- Ionic Interview Questions

- Grafana Tutorial

- What is VAPT? - A Complete Beginners Tutorial

- SnapLogic Interview Questions

- Saviynt Interview Questions

- What is PingFederate? - A Complete Beginners Tutorial

- SnapLogic Tutorial

- Grafana Interview Questions

- RHCE Interview Questions and Answers

- Web Services Interview Questions

- Domo Interview Questions and Answers

- Terraform Interview Questions

- What is Sophos? | Sophos Turorial for Beginners

- Top Servlet Interview Question And Answers

- NLP Interview Questions and Answers

- Microsoft Intune Interview Questions

- Top XML Interview Questions And Answers

- Tosca Commander

- Katalon vs Cypress

- SQLite Tutorial

- Tosca Tutorial - A Complete Guide for Beginners

- Xamarin Interview Questions and Answers

- UiPath vs Automation Anywhere - The Key Differences

- OpenShift Interview Questions

- What is Katalon Studio - Complete Tutorial Guide

- Kronos Interview Questions

- Tosca Framework

- Burp Suite Tutorial

- Mendix Interview Questions

- Power Platform Interview Questions

- Burp Suite Interview Questions

- What is Mendix

- What is Terraform ?

- Burp Suite Alternatives

- Dart vs Kotlin

- What is Kronos?

- ES6 Interview Questions

- Entity Framework Interview Questions

- COBOL Interview Questions

- Express JS Interview Questions

- OSPF Interview Questions

- LINQ Tutorial

- CSS3 Interview Questions and Answers

- Auth0 Tutorial

- MS Access Interview Questions

- What is SPARQL - A Complete Tutorial Guide

- ExpressJS Tutorial

- UML Tutorial

- HTML vs XML

- Cypress vs Jest

- Impacts of Social Media

- OWASP Interview Questions

- Security Testing Interview Questions

- OpenShift vs Docker

- ES6 Tutorial

- Spark SQL Interview Questions

- Spark SQL Tutorial

- What is OWASP?

- AppDynamics Interview Questions

- Dynatrace Interview Questions

- Rest Assured Tutorial

- New Relic Interview Questions

- REST API Tutorial

- Datadog Interview Questions

- Rest API Interview Questions

- Rest Assured Interview Questions

- PTC Windchill Interview Questions

- Easiest Tech Skills To Learn

- Python SQLite Tutorial - How to Install SQLite

- Datadog Tutorial - Datadog Incident Management

- What is AppDynamics - AppDynamics Architecture

- RabbitMQ Interview Questions And Answers

- What is Dynatrace

- Datadog Vs Splunk

- Web Developer Job Description

- JP Morgan Interview Questions

- Types of Corporate Training

- Benefits of Corporate Training

- What is Corporate Restructuring?

- Blended Learning in Corporate Training

- What is Corporate Level Strategy?

- Flutter Projects and Use Cases

- How to Become a Web Developer

- How To Install Keras?

- How to Install Flutter on Windows?

- How to Install Cypress on Windows?

- How to Become a Computer Scientist?

- How to Install Katalon Studio in Windows

- How to Become a Programmer

- OWASP Projects and Use Cases

- How to Install Sophos?

- Workato Tutorial

- Workato Tutorial - What is Workato?

ETL refers to the process of moving data from one repository to another. Extract, Transform, and Load is the three database methods that work together to pull data from several sources and store it in a data warehouse. Let's get to know What Is ETL & How It Works? from the beginning to the conclusion through this blog.

ETL - Table Of Contents

- What Is ETL?

- Why Is ETL Important?

- How does ETL Work?

- The Future of ETL

- Getting Started With ETL

- Data Blending Starter Kit

What Is ETL?

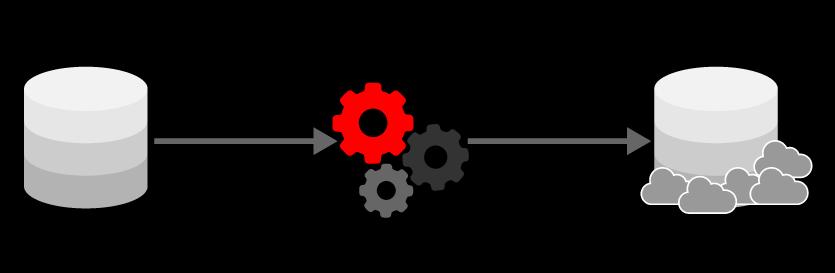

Extract-Transform-Load (ETL) is a Data Warehousing acronym that stands for Extract-Transform-Load. ETL refers to moving data from a source system to a data warehouse. A cleaning phase is now included in the ETL as a distinct step. Extract-Clean-Transform-Load is the next step.

Let's go over each stage of the ETL process in more detail. ETL was established as a procedure for integrating and loading data for computation and analysis as databases expanded in popularity in the 1970s, eventually becoming the primary method for Processing data for data warehousing initiatives.

Data analytics and machine learning Work Streams are built on top of ETL. ETL tools have been around for more than two decades and are particularly useful for developing and automating sophisticated ETL procedures.

Companies frequently use ETL to:

- Data from legacy systems is extracted.

- Cleanse the data to improve the quality and consistency of the information.

- Data should be loaded into a target database.

ETL data integration:

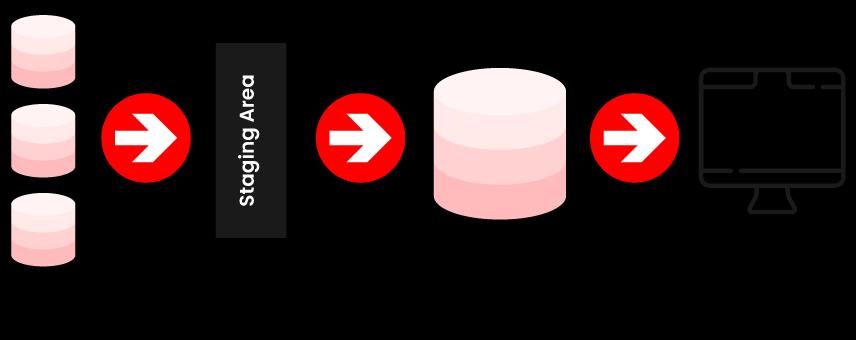

ETL is a sort of data integration that refers to the three phases used to combine data from various sources. It's frequently used to construct a data warehouse. Data is taken from a source system, converted (transformed) into an analyzable format, and placed into a data warehouse or other system throughout this process. ELT is a different but related approach for pushing processing to the database for better speed. In today's commercial world, integrating data is a more common occurrence.

Linked systems can detect the updates made in neighboring databases. This may not be directly related to the application. Still, it can be highly beneficial because it can help provide new features and functionality to applications and new insights by bridging the gap between products.

| If you would like to become an ETL-certified professional, then visit Mindmajix - A Global online training platform: "ETL Testing Course".This course will help you to achieve excellence in this domain. |

Why Is ETL Important?

To establish an effective business intelligence architecture, data integration is essential. It's one of the vital components for combining data from various sources and organizing it in a shared place. Even a little piece of information can be a game-changer for any firm in today's world of neck-and-neck competition. As a result, a company must employ the appropriate procedures to integrate its pertinent data from various sources.

For many years, businesses have relied on the ETL process to obtain a consolidated data view to make better business decisions. This method of combining data from many systems and sources is still used today as part of a company's data integration toolkit.

- ETL is a technique for moving and transforming data from various sources and loading it into multiple targets, such as Hadoop.

- ETL gives extensive historical context when utilized with an enterprise data warehouse (data at rest).

- ETL makes it easier for business users to examine and report on data relevant to their objectives by offering a consolidated perspective.

- Because ETL codifies and reuses data-moving procedures without requiring technical abilities to build code or scripts, it can help data professionals work more efficiently.

- Over time, ETL has developed to meet new integration needs, such as streaming data.

- For data warehousing, reporting, and analytics, organizations require ETL and ELT to bring data together, maintain accuracy, and offer the often required auditing for data warehousing, reporting, and analytics.

How does ETL Work?

ETL (Extraction, Transformation, and Loading) is a simple, automated method for combining disparate data, whether in different formats or from separate systems or data sources and making it analysis-ready. Data governance, an essential aspect of the process, specifies the policies and strategies that govern data processing. This involves both infrastructure and technology and the people in charge of managing the process. For businesses, data governance is essential because it provides more reliable data, lower costs, a single source of truth, and regulatory, legal, and industry compliance.

Extract:

The Extract phase is responsible for extracting data from the source system and making it available for processing. The extract step's primary goal is to get as much data as possible from the source system with as few resources as possible. The extract step Should be constructed to have no detrimental impact on the source system's performance, response time, or locking.

There are several ways to perform the extract:

- Update notification: If the source system can provide a statement that a record has been changed and describe the change, this is the easiest way to get the data.

- Incremental extract: Certain systems may fail to notify you that an update has occurred. Nonetheless, they can determine which records have been altered and offer an extract of those records. The system must detect and propagate changes made during subsequent ETL processes. It's worth noting that if we use daily extract, we might not manage deleted forms correctly.

- Full extract: Because some systems can't tell which data has been modified, the only method to get the data out is to do a full extract. A copy of the previous extract must be kept in the same format to identify changes in the whole extract. In addition, deletions are handled using full extract.

The extracted frequency is fundamental when using incremental or full extracts. The data volumes can be in tens of gigabytes, mainly whole extracts.

[Also Checkout "ETL Testing Interview Questions"]

Transform:

The data is transformed from the source to the target using a set of rules in the transform stage. This entails changing all measured data to the same dimension with the same units linked afterward. Joining data from many sources, generating aggregates, generating surrogate keys, sorting, calculating new calculated values, and applying complex validation criteria are part of the transformation process.

Load:

Ensures that the load is completed accurately and with as few resources as feasible during the load step. Frequently, a database is the goal of the Load procedure. It is beneficial to disable any constraints and indexes before the load to make the loan process More efficient and re-enable them only after it is finished. To assure consistency, the ETL tool must maintain referential integrity.

The Future of ETL

Traditional ETL methods, which rely on SQL, human coding, and IT professionals, create a rigid, segregated environment that slows down data processing. Analytic Process Automation (APA) is a more effective way to transform raw data from many sources into valuable insights that drive choices, thanks to modern ETL algorithms.

Traditional ETL was a vital part of the data warehousing process. The primary purpose of ETL was to take data from a variety of sources, convert it according to business rules, and load it into the target database. An ETL process can take a few hours to a day to complete. The ETL operations are mostly batch and relational, and they are generated and executed using a mature ETL tool.

The world of data, on the other hand, is constantly changing. When considering IoT datasets such as sensor data, video feeds, mobile geolocation data, product usage data, social media data, and log files, the Internet of Things can be seen as one of the drivers of the evolving data size and speed data.

Related Article: Ab Initio Tutorial - ETL Testing

Getting Started With ETL

A well-tuned ETL software can help you make faster, more informed decisions. Alteryx Analytics Automation makes the ETL process simple, auditable, and efficient, and anyone can use it thanks to its low-code, no-code, drag-and-drop interface.

Businesses can use the Alteryx Platform's versatility to:

- Using the Input Data Tool or pre-built connectors, extract data from numerous sources such as Snowflake, Tableau, Azure, and AWS. Users can also create their API connections using the open API.

- Filter, Data Cleansing, and Summarize are just a few of the drag-and-drop automation tools that may help you transform confusing, diverse data.

- Get access to cutting-edge predictive, geographic, and statistical analytics.

- Use the Output Data or Write Data IN-DB Tools to load data to its intended destination, a process that may be readily replicated.

Data Blending Starter Kit

Data blending is the practice of mixing data from several sources to create an actionable analytic dataset that can be used to make business decisions or drive specific business activities. This method enables businesses to derive value from various sources and conduct more in-depth analyses.

Data blending varies from data integration and data warehousing. Its primary goal isn't to generate a single version of the truth that can be kept in data warehouses or other record systems. Instead, a business or data analyst does this task intending to construct an analytic dataset to aid in the resolution of specific business concerns.

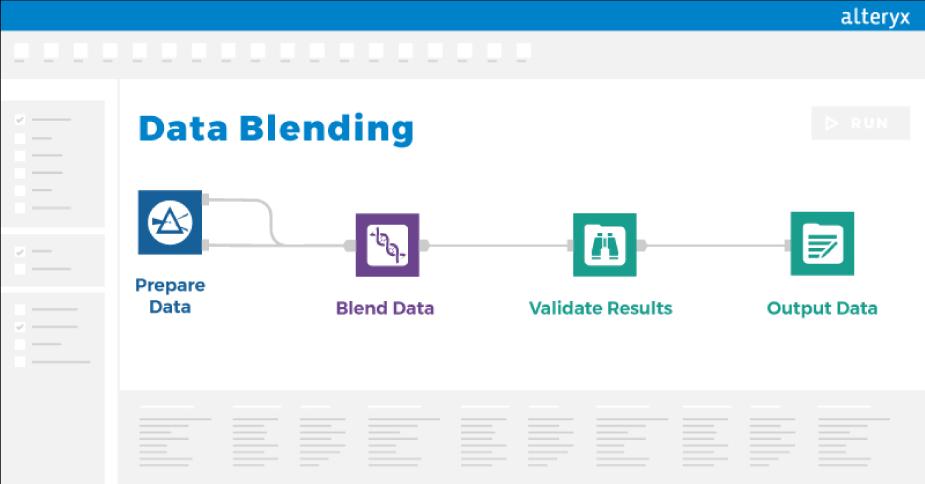

- The Data Blending Process:

While numerous methods for combining data, ranging from inner and outer joins to fuzzy matching and unions, data blending may be broken down into four easy processes. - Preparing Data:

The first stage in data collection is determining what information would help answer the questions. Identify relevant datasets from various sources using various forms and file types. For the data sources to be integrated, they must all have the same dimension. Modern analytics technology can automate and repeat, transforming these various types into a common framework that allows for a meaningful blend without modifying the original data source. - Blending Data:

To ensure that the data blending is seamless, combine data from multiple sources and adapt each join based on the standard dimension. Think of the ideal blended view and only include data required to answer the queries and any fields that may provide further meaning to the responses when an analysis is done. Stakeholders should be able to understand and interpret the dataset that results. Return to this phase to add or remove data from a workflow or expand the analysis. - Validating Results:

It's no secret that merging data from many sources can lead to a slew of challenges with compatibility and accuracy. Examine the data to verify the results, look for unmatched records, and ensure that the dataset is accurate and consistent. To begin, clean and arrange the data for the intended outcome. Then go over the new dataset to make sure the data type and size are what you want for analysis. Finally, examine the blend's work with a critical eye. This is an excellent time to explore the findings for any unmatched records and, if necessary, return to additional data preparation actions performed before the blend. Outputting Data After the heavy lifting of data, and blending are completed, it's time to integrate the data into the appropriate business intelligence system so that the blended dataset can help achieve the goal. This implies that the outputs can be pushed back into a database, integrated into a business process, further examined using statistical, spatial, or predictive methods, or fed into data visualization software like QlikView or Tableau. - Starter kit:

This Starter Kit will help you master data blending and automate repetitive workflow procedures that combine data from various sources.

Starter Kit Features:

- Visualize Customer Transactions:

It helps in Combining transactions and customers, which results in visual reporting insights that aid in identifying patterns and opportunities. - Identify Non-Exact Matches with Fuzzy Matching:

It describes how to integrate comparable but not precise matching data into automated workflows for real-time insights by enabling quick fuzzy matched blending. - Calculating Ad Area Distribution:

It helps to enhance sales and improve ROI, combining spatial data to compute ad area distribution.

- Visualize Customer Transactions:

Conclusion

Data must be accessible quickly and easily in today's businesses. As a result, there is a growing demand for data transformation into self-serviceable systems. In that system, ETLs are essential. They ensure that analysts and data scientists access data from various platforms. This makes a big difference and allows businesses to learn new things.

On-Job Support Service

On-Job Support Service

Online Work Support for your on-job roles.

Our work-support plans provide precise options as per your project tasks. Whether you are a newbie or an experienced professional seeking assistance in completing project tasks, we are here with the following plans to meet your custom needs:

- Pay Per Hour

- Pay Per Week

- Monthly

| Name | Dates | |

|---|---|---|

| ETL Testing Training | Mar 07 to Mar 22 | View Details |

| ETL Testing Training | Mar 10 to Mar 25 | View Details |

| ETL Testing Training | Mar 14 to Mar 29 | View Details |

| ETL Testing Training | Mar 17 to Apr 01 | View Details |

Usha Sri Mendi is a Senior Content writer with more than three years of experience in writing for Mindmajix on various IT platforms such as Tableau, Linux, and Cloud Computing. She spends her precious time on researching various technologies, and startups. Reach out to her via LinkedIn and Twitter.